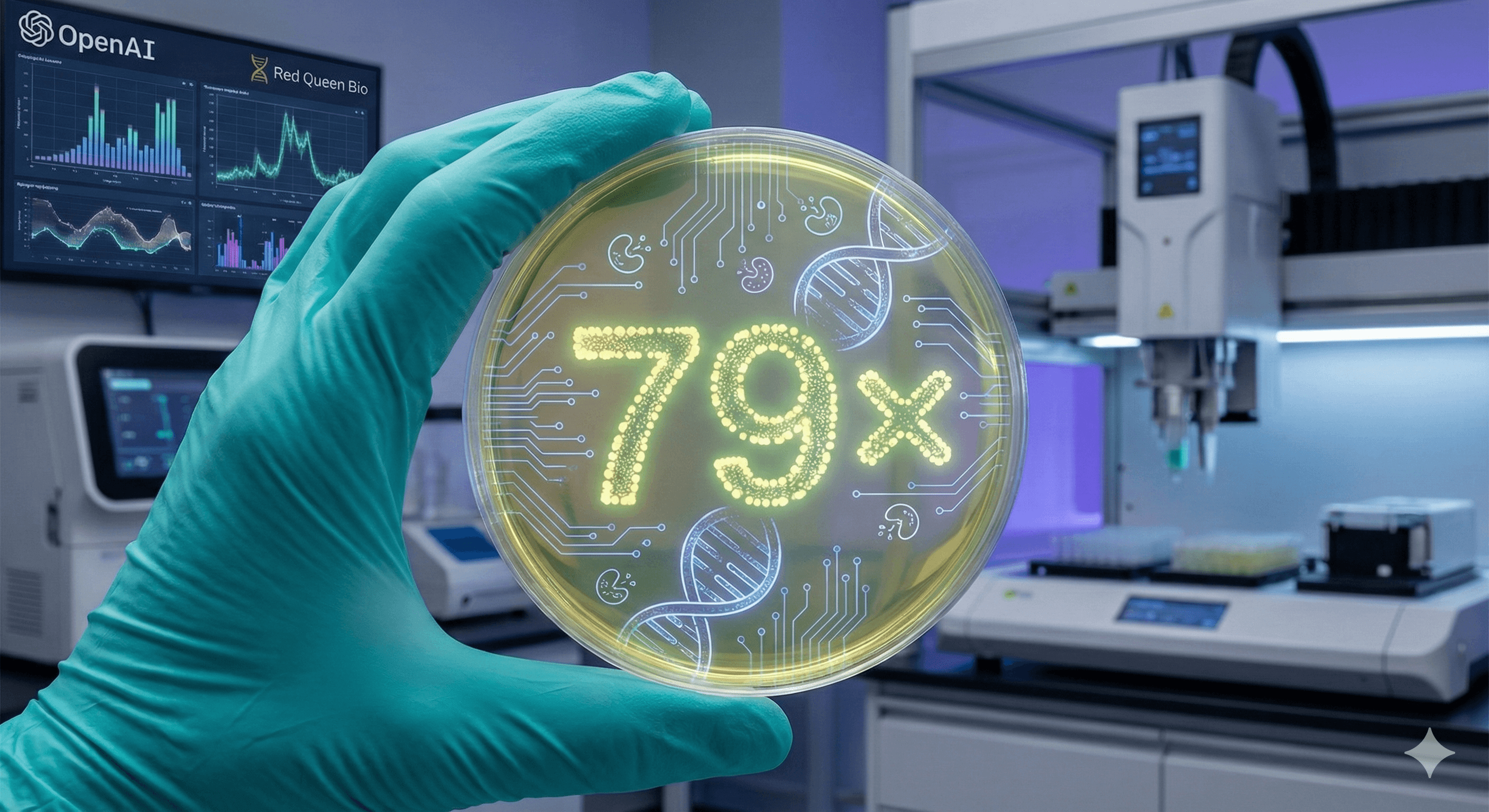

L'IA augmente l'efficacité du clonage en laboratoire humide de 79 fois (OpenAI GPT-5)

L'IA augmente l'efficacité du clonage en laboratoire humide de 79 fois (OpenAI GPT-5)

OpenAI

11 déc. 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Réservez une consultation

OpenAI rapporte que GPT-5 a amélioré l'efficacité d'un protocole standard de clonage moléculaire de 79× dans une étude contrôlée en laboratoire humide avec Red Queen Bio. Le modèle a proposé des modifications novatrices (y compris une approche d'assemblage assistée par enzyme) et un ajustement de transformation séparé; des humains ont exécuté les expériences et validé les résultats à travers des réplicas. Précoce mais significatif.

Que s'est-il passé?

OpenAI a collaboré avec Red Queen Bio pour tester si un modèle avancé pouvait significativement améliorer une véritable expérience. GPT-5 a proposé des changements de protocole; les scientifiques ont réalisé les expériences et ont renvoyé les résultats; le système a itéré. Résultat : 79× plus de clones vérifiés par séquence à partir du même ADN d'entrée par rapport à la méthode de base — la définition de l'« efficacité » par l'étude.

Pourquoi c'est important : le clonage est un pilier fondamental dans l'ingénierie des protéines, les criblages génétiques et l'ingénierie des souches, donc une production plus élevée par entrée peut raccourcir les cycles et réduire les coûts dans une grande partie de la biologie quotidienne.

Qu'est-ce qui a réellement changé ?

Nouveau mécanisme d'assemblage : GPT-5 a suggéré une variation assistée par enzyme qui ajoute deux protéines auxiliaires (RecA et gp32) pour améliorer comment les extrémités d'ADN se trouvent et s'apparient — une étape qui limite de nombreux assemblages basés sur l'homologie. Cela seul a amélioré l'efficacité de l'étude.

Un ajustement de transformation séparé : Il a aussi proposé un changement de manipulation pendant la transformation qui a augmenté le nombre de colonies obtenues. Ensemble, le changement d'assemblage et le changement de transformation ont produit l'amélioration de 79× dans les validations de l'étude.

Note : L'équipe souligne que cela a été fait dans un système bénin, avec des contrôles de sécurité stricts, et que les résultats sont préliminaires et spécifiques au système — prometteurs, mais sans garantie générale.

Comment « 79× d'efficacité » a été mesuré

L'efficacité ici signifie clones vérifiés par séquence récupérés par quantité fixe d'ADN d'entrée par rapport au protocole de clonage de référence. OpenAI rapporte une validation à travers des réplicas indépendants (n=3) pour les meilleurs candidats.

Ce que cela ne signifie pas

Il ne signifie pas que l'IA fonctionne sans supervision dans un laboratoire libre. Des humains ont exécuté les expériences; le modèle a proposé et itéré.

Il ne signifie pas que l'amélioration s'applique à chaque organisme, vecteur, insert ou flux de travail. L'équipe note que les gains étaient spécifiques à leur configuration et que la généralisation plus large nécessite plus de travail.

Il ne supprime pas les soucis de sécurité. Le travail a suivi un cadre de préparation et un système contraint et bénin pour gérer le risque de biosécurité.

Qu'est-ce qui est vraiment nouveau

Idée novatrice fondée sur des mécanismes : l'approche RecA/gp32 formalise une étape de « couplage assisté par auxiliaire » dans un flux de travail de style Gibson — intéressant car Gibson a été une solution à un tube, une température depuis 2009.

Preuve de la boucle IA–laboratoire : incitations fixes, pas de pilotage humain au stade de la proposition, mais cela a quand même abouti à un nouveau mécanisme et à une amélioration pratique de la transformation.

Signal précoce de la robotique : l'équipe a également testé un robot de laboratoire polyvalent qui a exécuté des protocoles générés par l'IA; les performances relatives ont suivi les expériences exécutées par des humains, bien que les rendements absolus soient plus faibles (il reste des domaines pour l'étalonnage).

Implications pratiques pour les leaders en R&D

Attendez-vous à des cycles de conception–réalisation–test plus rapides où des « systèmes modèles » bénins sont utilisés pour le développement de méthodes, puis adaptés par des experts du domaine.

Planifiez la gouvernance : traitez l'IA comme un moteur de propositions dans un cadre de sécurité d'abord (examen des risques, maîtrise des changements, suivi des audits).

Thèse d'investissement : si même une fraction de ces gains se généralise, le coût/temps par étape de clonage pourrait baisser considérablement — avec des effets cumulatifs à travers la construction de bibliothèques et les programmes de criblage. Une couverture indépendante fait écho à ce potentiel mais met en garde contre l'emballement.

FAQs

Comment GPT-5 a-t-il atteint 79×?

En combinant un nouveau mécanisme d'assemblage (avec des protéines auxiliaires pour améliorer l'appariement) et un changement de transformation, validé par rapport à une référence standard; la métrique était des clones vérifiés par entrée d'ADN fixe. OpenAI

L'IA dirigeait-elle le laboratoire?

Non. GPT-5 a proposé et itéré; des scientifiques formés ont exécuté et téléchargé les résultats. L'étude a délibérément utilisé des incitations fixes pour mesurer les propres contributions du modèle. OpenAI

Est-ce sûr?

Les expériences ont été réalisées dans un système bénin sous des contrôles stricts et cadrées dans l'approche de préparation d'OpenAI. Les auteurs soulignent explicitement les considérations de biosécurité. OpenAI

Les mêmes gains apparaîtront-ils dans mon laboratoire?

Pas garanti. L'équipe souligne des résultats spécifiques au système et un statut précoce; reproduction et étalonnage plus large sont nécessaires. Les journalistes indépendants notent également l'historique du domaine avec des affirmations exagérées — un scepticisme sain s'applique. OpenAI

Quelle était la référence?

Un flux de travail d'assemblage à la Gibson — largement utilisé pour joindre des fragments d'ADN. L'étude positionne ses changements par rapport à cette référence. OpenAI

OpenAI rapporte que GPT-5 a amélioré l'efficacité d'un protocole standard de clonage moléculaire de 79× dans une étude contrôlée en laboratoire humide avec Red Queen Bio. Le modèle a proposé des modifications novatrices (y compris une approche d'assemblage assistée par enzyme) et un ajustement de transformation séparé; des humains ont exécuté les expériences et validé les résultats à travers des réplicas. Précoce mais significatif.

Que s'est-il passé?

OpenAI a collaboré avec Red Queen Bio pour tester si un modèle avancé pouvait significativement améliorer une véritable expérience. GPT-5 a proposé des changements de protocole; les scientifiques ont réalisé les expériences et ont renvoyé les résultats; le système a itéré. Résultat : 79× plus de clones vérifiés par séquence à partir du même ADN d'entrée par rapport à la méthode de base — la définition de l'« efficacité » par l'étude.

Pourquoi c'est important : le clonage est un pilier fondamental dans l'ingénierie des protéines, les criblages génétiques et l'ingénierie des souches, donc une production plus élevée par entrée peut raccourcir les cycles et réduire les coûts dans une grande partie de la biologie quotidienne.

Qu'est-ce qui a réellement changé ?

Nouveau mécanisme d'assemblage : GPT-5 a suggéré une variation assistée par enzyme qui ajoute deux protéines auxiliaires (RecA et gp32) pour améliorer comment les extrémités d'ADN se trouvent et s'apparient — une étape qui limite de nombreux assemblages basés sur l'homologie. Cela seul a amélioré l'efficacité de l'étude.

Un ajustement de transformation séparé : Il a aussi proposé un changement de manipulation pendant la transformation qui a augmenté le nombre de colonies obtenues. Ensemble, le changement d'assemblage et le changement de transformation ont produit l'amélioration de 79× dans les validations de l'étude.

Note : L'équipe souligne que cela a été fait dans un système bénin, avec des contrôles de sécurité stricts, et que les résultats sont préliminaires et spécifiques au système — prometteurs, mais sans garantie générale.

Comment « 79× d'efficacité » a été mesuré

L'efficacité ici signifie clones vérifiés par séquence récupérés par quantité fixe d'ADN d'entrée par rapport au protocole de clonage de référence. OpenAI rapporte une validation à travers des réplicas indépendants (n=3) pour les meilleurs candidats.

Ce que cela ne signifie pas

Il ne signifie pas que l'IA fonctionne sans supervision dans un laboratoire libre. Des humains ont exécuté les expériences; le modèle a proposé et itéré.

Il ne signifie pas que l'amélioration s'applique à chaque organisme, vecteur, insert ou flux de travail. L'équipe note que les gains étaient spécifiques à leur configuration et que la généralisation plus large nécessite plus de travail.

Il ne supprime pas les soucis de sécurité. Le travail a suivi un cadre de préparation et un système contraint et bénin pour gérer le risque de biosécurité.

Qu'est-ce qui est vraiment nouveau

Idée novatrice fondée sur des mécanismes : l'approche RecA/gp32 formalise une étape de « couplage assisté par auxiliaire » dans un flux de travail de style Gibson — intéressant car Gibson a été une solution à un tube, une température depuis 2009.

Preuve de la boucle IA–laboratoire : incitations fixes, pas de pilotage humain au stade de la proposition, mais cela a quand même abouti à un nouveau mécanisme et à une amélioration pratique de la transformation.

Signal précoce de la robotique : l'équipe a également testé un robot de laboratoire polyvalent qui a exécuté des protocoles générés par l'IA; les performances relatives ont suivi les expériences exécutées par des humains, bien que les rendements absolus soient plus faibles (il reste des domaines pour l'étalonnage).

Implications pratiques pour les leaders en R&D

Attendez-vous à des cycles de conception–réalisation–test plus rapides où des « systèmes modèles » bénins sont utilisés pour le développement de méthodes, puis adaptés par des experts du domaine.

Planifiez la gouvernance : traitez l'IA comme un moteur de propositions dans un cadre de sécurité d'abord (examen des risques, maîtrise des changements, suivi des audits).

Thèse d'investissement : si même une fraction de ces gains se généralise, le coût/temps par étape de clonage pourrait baisser considérablement — avec des effets cumulatifs à travers la construction de bibliothèques et les programmes de criblage. Une couverture indépendante fait écho à ce potentiel mais met en garde contre l'emballement.

FAQs

Comment GPT-5 a-t-il atteint 79×?

En combinant un nouveau mécanisme d'assemblage (avec des protéines auxiliaires pour améliorer l'appariement) et un changement de transformation, validé par rapport à une référence standard; la métrique était des clones vérifiés par entrée d'ADN fixe. OpenAI

L'IA dirigeait-elle le laboratoire?

Non. GPT-5 a proposé et itéré; des scientifiques formés ont exécuté et téléchargé les résultats. L'étude a délibérément utilisé des incitations fixes pour mesurer les propres contributions du modèle. OpenAI

Est-ce sûr?

Les expériences ont été réalisées dans un système bénin sous des contrôles stricts et cadrées dans l'approche de préparation d'OpenAI. Les auteurs soulignent explicitement les considérations de biosécurité. OpenAI

Les mêmes gains apparaîtront-ils dans mon laboratoire?

Pas garanti. L'équipe souligne des résultats spécifiques au système et un statut précoce; reproduction et étalonnage plus large sont nécessaires. Les journalistes indépendants notent également l'historique du domaine avec des affirmations exagérées — un scepticisme sain s'applique. OpenAI

Quelle était la référence?

Un flux de travail d'assemblage à la Gibson — largement utilisé pour joindre des fragments d'ADN. L'étude positionne ses changements par rapport à cette référence. OpenAI

Recevez des conseils pratiques directement dans votre boîte de réception

En vous abonnant, vous consentez à ce que Génération Numérique stocke et traite vos informations conformément à notre politique de confidentialité. Vous pouvez lire la politique complète sur gend.co/privacy.

Génération

Numérique

Bureau au Royaume-Uni

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

1 University Ave,

Toronto,

ON M5J 1T1,

Canada

Bureau NAMER

77 Sands St,

Brooklyn,

NY 11201,

États-Unis

Bureau EMEA

Rue Charlemont, Saint Kevin's, Dublin,

D02 VN88,

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77 | Droits d'auteur 2026 | Conditions générales | Politique de confidentialité

Génération

Numérique

Bureau au Royaume-Uni

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

1 University Ave,

Toronto,

ON M5J 1T1,

Canada

Bureau NAMER

77 Sands St,

Brooklyn,

NY 11201,

États-Unis

Bureau EMEA

Rue Charlemont, Saint Kevin's, Dublin,

D02 VN88,

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77

Conditions générales

Politique de confidentialité

Droit d'auteur 2026