Résilience en Cybersécurité IA : Les Mesures de Protection 2026

Résilience en Cybersécurité IA : Les Mesures de Protection 2026

Sécurité de l'IA

26 nov. 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Réservez une consultation

Alors que les modèles d'IA deviennent plus compétents en cybersécurité, OpenAI et l'écosystème en général les renforcent avec des sauvegardes en couches, des tests rigoureux et une collaboration avec des experts en sécurité mondiale. À l'horizon 2026, la priorité est la résilience pratique : détection plus rapide, déploiements plus sûrs et réduction mesurable des risques.

Pourquoi cela importe maintenant

Les attaquants utilisent déjà l'automatisation et l'IA pour explorer les systèmes rapidement. Les défenseurs ont besoin de l'IA qui peut repérer les signaux faibles, corréler les alertes et aider les équipes à agir plus vite, sans introduire de nouveaux risques. L'opportunité est de jumeler des modèles puissants avec des contrôles disciplinés et une supervision humaine afin d'améliorer les résultats sans augmenter votre surface d'attaque.

Points clés

Capacités améliorées de l'IA en cybersécurité. Les modèles modernes analysent d'énormes flux d'événements, apprennent les schémas normaux et signalent les anomalies plus tôt, améliorant le temps moyen de détection (MTTD).

Mise en œuvre de sauvegardes en couches. La défense en profondeur inclut désormais des contrôles au niveau des modèles, des garde-fous de politique et une évaluation continue, pas seulement des outils de périmètre.

Partenariats avec des experts en sécurité mondiale. Les équipes rouges externes, la simulation d'incidents et la collaboration sur les normes aident à combler les lacunes et à renforcer les défenses.

Nouveautés et fonctionnement

Les modèles d'IA évoluent pour répondre aux menaces réelles tout au long du cycle de vie :

Des données à la détection. Les modèles enrichissent la télémétrie des points de terminaison, de l'identité, du réseau et du cloud, mettant en évidence des insights corrélés pour les analystes.

Prévision à la prévention. La reconnaissance de schémas met en évidence des comportements suspects avant qu'ils n'escaladent, permettant des actions préventives (par exemple, MFA forcée, accès conditionnel).

Réponse rapide. L'automatisation sécurisée peut rédiger des livres de réponse, ouvrir des tickets et orchestrer des étapes de confinement routinières—toujours avec approbations et traces d'audit. Pour les workflows d'incident et la coordination d'équipe, voir Asana.

Boucles de rétroaction. Les apprentissages post-incident, les tests synthétiques et les résultats des équipes rouges améliorent continuellement la qualité des modèles et réduisent les faux positifs.

Étapes pratiques

OpenAI et les partenaires de l'industrie continuent d'investir dans la recherche et le développement, en se concentrant sur des cadres de sécurité robustes qui exploitent la puissance prédictive de l'IA. Voici comment votre organisation peut appliquer les mêmes principes :

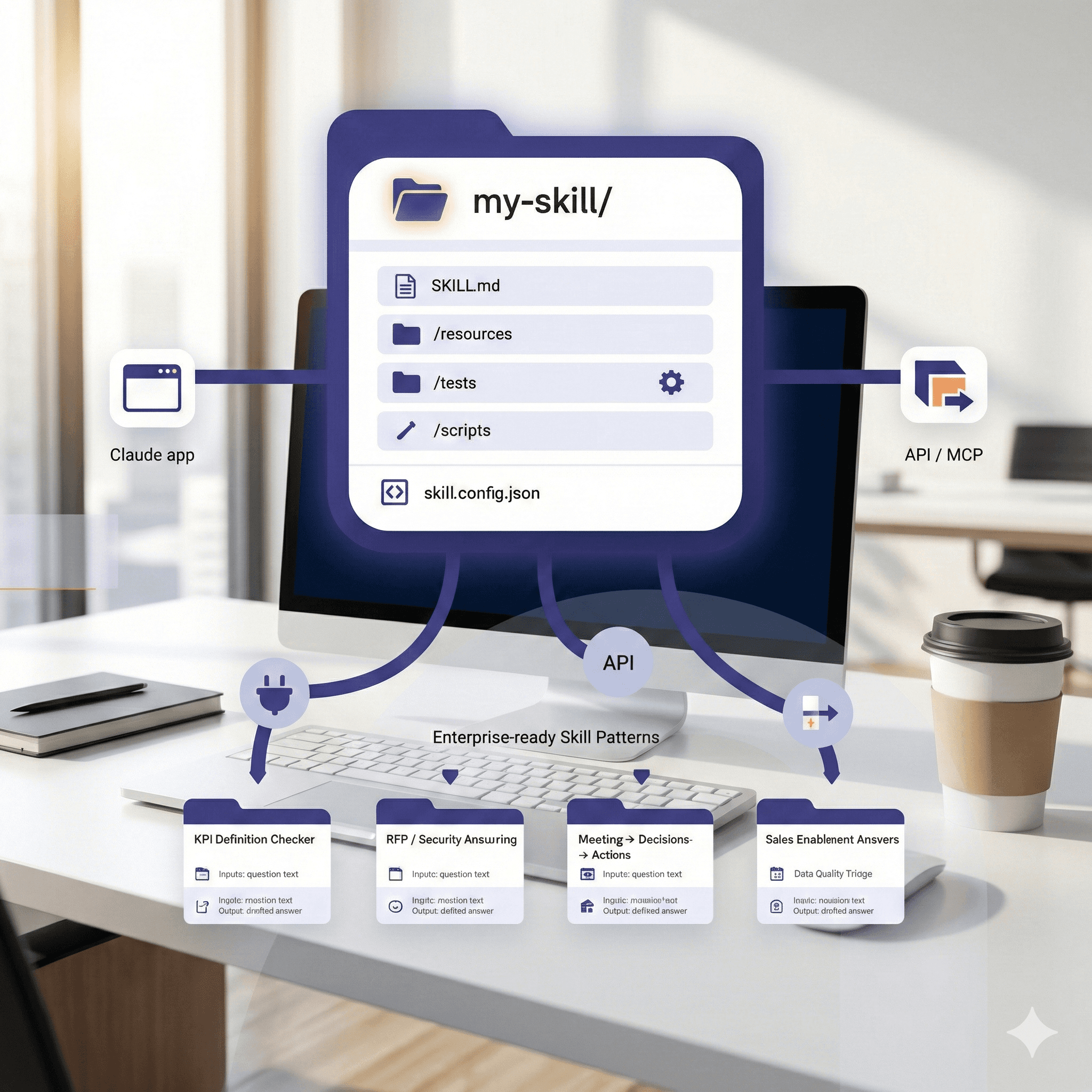

1) Établir des sauvegardes en couches pour l'utilisation de l'IA

Créez une pile de contrôle qui couvre :

Sauvegardes de modèle : invites de politique, listes d'autorisation/interdiction, limites de taux, filtres de données sensibles.

Contrôles opérationnels : accès conscient de l'identité, gestion des clés, isolation du réseau, rotation des secrets.

Évaluation & test : tests avant déploiement, invites adversariales, drills basés sur des scénarios, vérifications continues de dérive.

Gouvernance : propriété claire, registres des risques, DPIAs où applicable, et chemins de retour rapide. Documenter les garde-fous et les livres de structures dans Notion garde les équipes alignées et prêtes pour l'audit.

2) Intégrer l'IA avec les outils de sécurité existants

Alignement SOC. Alimentez les sorties du modèle dans SIEM/XDR afin que les analystes obtiennent un contexte enrichi et explicable—pas seulement plus d'alertes.

Réponse automatisée mais approuvée. Commencez par des automatisations à faible risque (par exemple, marquage, création de cas, enrichissement) avant de passer à un confinement partiel avec approbation humaine—coordonné via Asana.

Télémétrie de qualité. Des données de haute qualité et bien étiquetées améliorent la détection. Utilisez une recherche d'entreprise comme Glean pour mettre en évidence rapidement les signaux et les cas précédents.

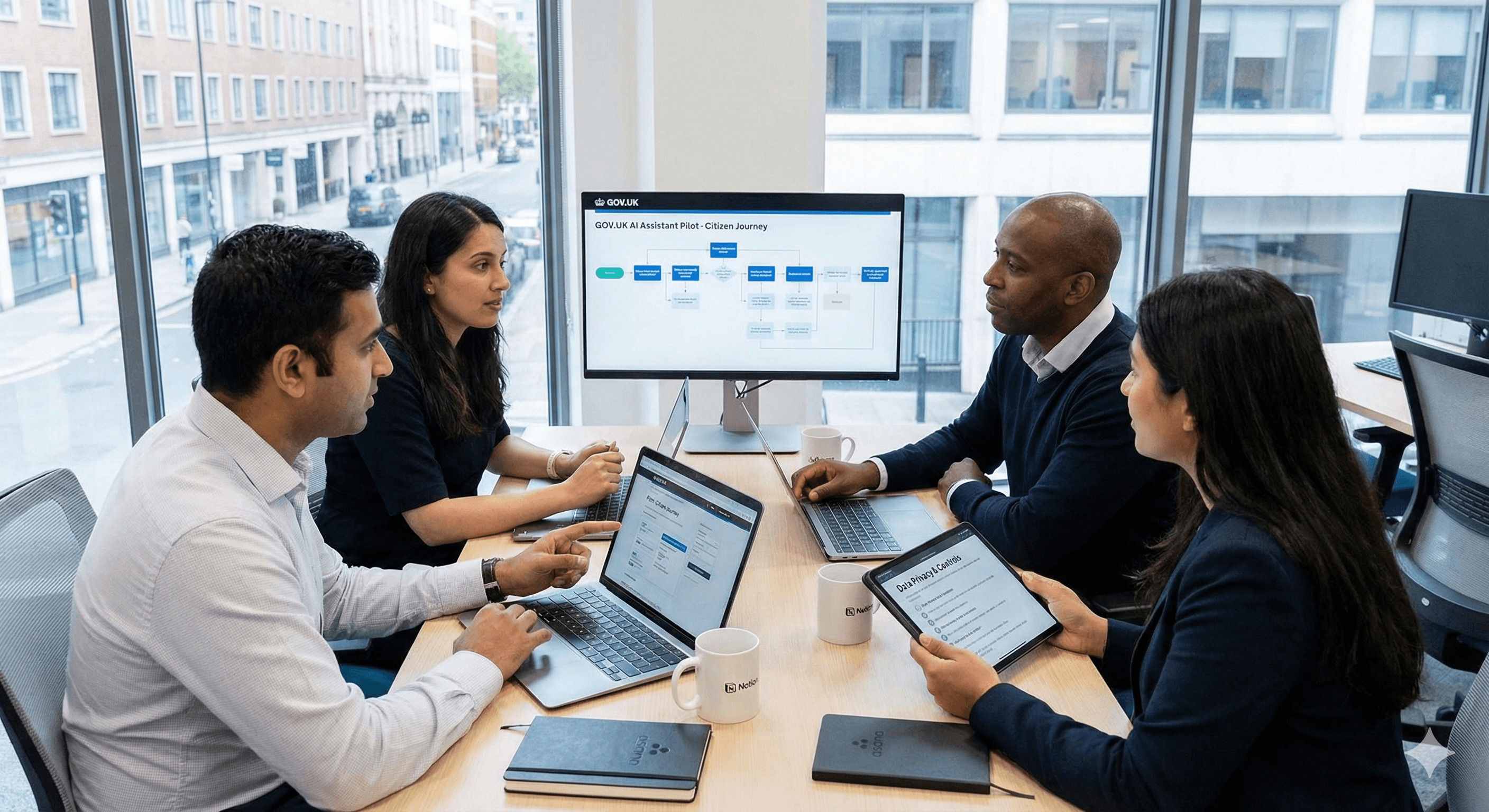

3) S'associer avec des experts en sécurité mondiale

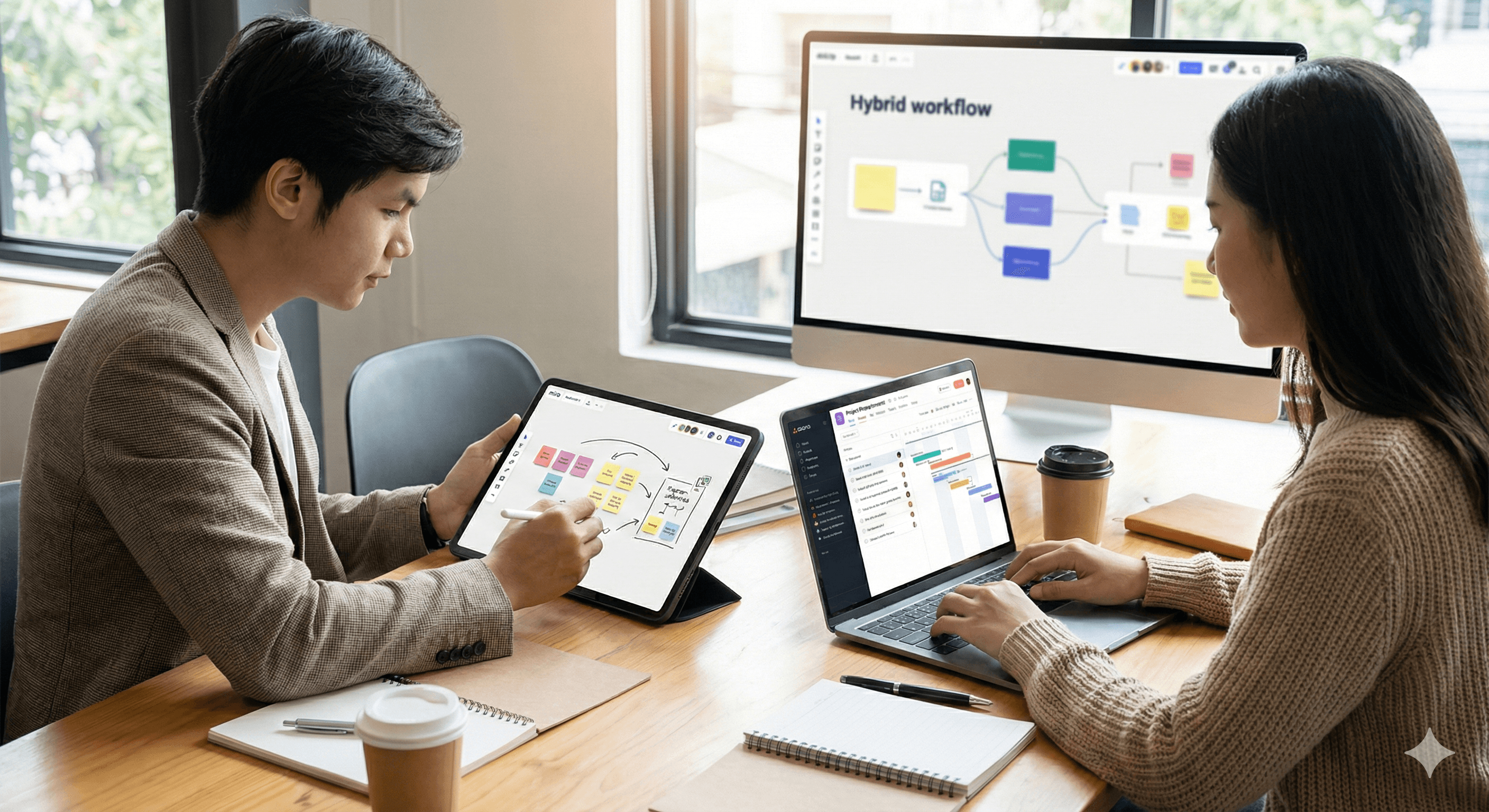

Équipe rouge externe. Commandez des tests qui ciblent à la fois l'infrastructure traditionnelle et les workflows assistés par l'IA autour de celle-ci. Visualisez les résultats et les plans de remédiation avec des toiles collaboratives dans Miro.

Étalonnage et assurance. Comparez la performance des modèles par rapport à des scénarios d'attaque connus; suivez les taux MTTD/MTTR et les taux de faux positifs.

Collaboration communautaire. Engagez avec des organismes de normes et des chercheurs de confiance pour partager les apprentissages et améliorer les méthodes de sécurité.

4) Construire une sécurité responsable de l'IA dans le travail quotidien

Humain dans la boucle. Exigez une révision par analyste pour les actions matérielles jusqu'à ce que les seuils de confiance soient atteints.

Auditabilité. Enregistrez les invites, réponses, décisions et dérogations pour soutenir la criminalistique et la conformité—stockez et partagez les politiques dans Notion.

Privilège et minimisation des données. Gardez les modèles limités aux données minimales nécessaires pour atteindre les résultats de sécurité.

Formation & culture. Perfectionnez votre SOC en matière de triage assisté par l'IA, création de livre de jeux et hygiène d'invites; utilisez Miro pour des ateliers et des exercices de table.

5) Mesurer ce qui compte

Définissez des KPI que les dirigeants comprennent :

Réduction des risques : incidents évités, réduction de la gravité, tendances du temps de séjour.

Efficacité : temps d'analyste épargné, cas traités par quart, taux d'adoption de l'automatisation.

Qualité : précision/rappel des détections, rapports de faux positifs/négatifs, actions d'amélioration post-incident prises. Suivez les tâches de remédiation avec Asana et les artefacts de connaissance dans Notion.

Cas d'utilisation réalistes

Anomalies d'identité : L'IA signale des chemins d'accès inhabituels ou des schémas d'escalade de privilèges et ouvre un workflow de confinement approuvé dans Asana.

Classement du phishing : Les modèles regroupent les e-mails signalés, extraient les IOCs et enrichissent les cas de SIEM, économisant des minutes sur chaque enquête; les analystes se référent aux cas précédents via Glean.

Posture cloud : L'analyse continue suggère des ajustements de privilège minimum et met en lumière des mauvaises configurations avant qu'elles ne soient exploitées; les équipes scénarisent la remédiation dans Miro.

Risques des tiers : Les modèles textuels résument les artefacts de sécurité des fournisseurs et les associent aux exigences de politique; la documentation est centralisée dans Notion.

FAQs

Q1. Comment l'IA améliore-t-elle la résilience en cybersécurité?

L'IA améliore la résilience en analysant une télémétrie à haut volume, en repérant les anomalies plus tôt et en élaborant des actions de réponse. Lorsqu'elle est associée à la gouvernance et à une révision humaine, les équipes réduisent les temps de détection et de réponse tout en réduisant les faux positifs.

Q2. Quelles sauvegardes sont mises en œuvre dans les modèles d'IA?

Les organisations déploient des sauvegardes multicouches : garde-fous de politique, filtrage, accès conscient de l'identité, isolation du réseau, limitation des taux et équipes rouges continues—plus des traces d'audit pour la responsabilité, capturées dans des outils comme Notion.

Q3. Qui sont les partenaires d'OpenAI dans cette initiative?

Les fournisseurs de sécurité, les organismes de normes et les chercheurs indépendants contribuent à travers des équipes rouges, des méthodologies d'évaluation et des partages de meilleures pratiques pour améliorer la sécurité et l'efficacité de l'IA en cybersécurité. Des ateliers collaboratifs peuvent être organisés dans Miro pour aligner les parties prenantes.

Résumé

OpenAI et la communauté de sécurité au sens large poussent l'IA vers l'avant pour renforcer la résilience cyber. Avec des sauvegardes en couches, une collaboration avec des experts et des opérations disciplinées, les organisations peuvent réduire les risques et répondre plus rapidement. Vous voulez une feuille de route pratique pour 2026 ? Contactez Generation Digital pour explorer les pilotes, les modèles de gouvernance et l'automatisation sécurisée adaptée à votre environnement—avec des workflows dans Asana, collaboration dans Miro, documentation dans Notion, et découverte rapide via Glean.

Alors que les modèles d'IA deviennent plus compétents en cybersécurité, OpenAI et l'écosystème en général les renforcent avec des sauvegardes en couches, des tests rigoureux et une collaboration avec des experts en sécurité mondiale. À l'horizon 2026, la priorité est la résilience pratique : détection plus rapide, déploiements plus sûrs et réduction mesurable des risques.

Pourquoi cela importe maintenant

Les attaquants utilisent déjà l'automatisation et l'IA pour explorer les systèmes rapidement. Les défenseurs ont besoin de l'IA qui peut repérer les signaux faibles, corréler les alertes et aider les équipes à agir plus vite, sans introduire de nouveaux risques. L'opportunité est de jumeler des modèles puissants avec des contrôles disciplinés et une supervision humaine afin d'améliorer les résultats sans augmenter votre surface d'attaque.

Points clés

Capacités améliorées de l'IA en cybersécurité. Les modèles modernes analysent d'énormes flux d'événements, apprennent les schémas normaux et signalent les anomalies plus tôt, améliorant le temps moyen de détection (MTTD).

Mise en œuvre de sauvegardes en couches. La défense en profondeur inclut désormais des contrôles au niveau des modèles, des garde-fous de politique et une évaluation continue, pas seulement des outils de périmètre.

Partenariats avec des experts en sécurité mondiale. Les équipes rouges externes, la simulation d'incidents et la collaboration sur les normes aident à combler les lacunes et à renforcer les défenses.

Nouveautés et fonctionnement

Les modèles d'IA évoluent pour répondre aux menaces réelles tout au long du cycle de vie :

Des données à la détection. Les modèles enrichissent la télémétrie des points de terminaison, de l'identité, du réseau et du cloud, mettant en évidence des insights corrélés pour les analystes.

Prévision à la prévention. La reconnaissance de schémas met en évidence des comportements suspects avant qu'ils n'escaladent, permettant des actions préventives (par exemple, MFA forcée, accès conditionnel).

Réponse rapide. L'automatisation sécurisée peut rédiger des livres de réponse, ouvrir des tickets et orchestrer des étapes de confinement routinières—toujours avec approbations et traces d'audit. Pour les workflows d'incident et la coordination d'équipe, voir Asana.

Boucles de rétroaction. Les apprentissages post-incident, les tests synthétiques et les résultats des équipes rouges améliorent continuellement la qualité des modèles et réduisent les faux positifs.

Étapes pratiques

OpenAI et les partenaires de l'industrie continuent d'investir dans la recherche et le développement, en se concentrant sur des cadres de sécurité robustes qui exploitent la puissance prédictive de l'IA. Voici comment votre organisation peut appliquer les mêmes principes :

1) Établir des sauvegardes en couches pour l'utilisation de l'IA

Créez une pile de contrôle qui couvre :

Sauvegardes de modèle : invites de politique, listes d'autorisation/interdiction, limites de taux, filtres de données sensibles.

Contrôles opérationnels : accès conscient de l'identité, gestion des clés, isolation du réseau, rotation des secrets.

Évaluation & test : tests avant déploiement, invites adversariales, drills basés sur des scénarios, vérifications continues de dérive.

Gouvernance : propriété claire, registres des risques, DPIAs où applicable, et chemins de retour rapide. Documenter les garde-fous et les livres de structures dans Notion garde les équipes alignées et prêtes pour l'audit.

2) Intégrer l'IA avec les outils de sécurité existants

Alignement SOC. Alimentez les sorties du modèle dans SIEM/XDR afin que les analystes obtiennent un contexte enrichi et explicable—pas seulement plus d'alertes.

Réponse automatisée mais approuvée. Commencez par des automatisations à faible risque (par exemple, marquage, création de cas, enrichissement) avant de passer à un confinement partiel avec approbation humaine—coordonné via Asana.

Télémétrie de qualité. Des données de haute qualité et bien étiquetées améliorent la détection. Utilisez une recherche d'entreprise comme Glean pour mettre en évidence rapidement les signaux et les cas précédents.

3) S'associer avec des experts en sécurité mondiale

Équipe rouge externe. Commandez des tests qui ciblent à la fois l'infrastructure traditionnelle et les workflows assistés par l'IA autour de celle-ci. Visualisez les résultats et les plans de remédiation avec des toiles collaboratives dans Miro.

Étalonnage et assurance. Comparez la performance des modèles par rapport à des scénarios d'attaque connus; suivez les taux MTTD/MTTR et les taux de faux positifs.

Collaboration communautaire. Engagez avec des organismes de normes et des chercheurs de confiance pour partager les apprentissages et améliorer les méthodes de sécurité.

4) Construire une sécurité responsable de l'IA dans le travail quotidien

Humain dans la boucle. Exigez une révision par analyste pour les actions matérielles jusqu'à ce que les seuils de confiance soient atteints.

Auditabilité. Enregistrez les invites, réponses, décisions et dérogations pour soutenir la criminalistique et la conformité—stockez et partagez les politiques dans Notion.

Privilège et minimisation des données. Gardez les modèles limités aux données minimales nécessaires pour atteindre les résultats de sécurité.

Formation & culture. Perfectionnez votre SOC en matière de triage assisté par l'IA, création de livre de jeux et hygiène d'invites; utilisez Miro pour des ateliers et des exercices de table.

5) Mesurer ce qui compte

Définissez des KPI que les dirigeants comprennent :

Réduction des risques : incidents évités, réduction de la gravité, tendances du temps de séjour.

Efficacité : temps d'analyste épargné, cas traités par quart, taux d'adoption de l'automatisation.

Qualité : précision/rappel des détections, rapports de faux positifs/négatifs, actions d'amélioration post-incident prises. Suivez les tâches de remédiation avec Asana et les artefacts de connaissance dans Notion.

Cas d'utilisation réalistes

Anomalies d'identité : L'IA signale des chemins d'accès inhabituels ou des schémas d'escalade de privilèges et ouvre un workflow de confinement approuvé dans Asana.

Classement du phishing : Les modèles regroupent les e-mails signalés, extraient les IOCs et enrichissent les cas de SIEM, économisant des minutes sur chaque enquête; les analystes se référent aux cas précédents via Glean.

Posture cloud : L'analyse continue suggère des ajustements de privilège minimum et met en lumière des mauvaises configurations avant qu'elles ne soient exploitées; les équipes scénarisent la remédiation dans Miro.

Risques des tiers : Les modèles textuels résument les artefacts de sécurité des fournisseurs et les associent aux exigences de politique; la documentation est centralisée dans Notion.

FAQs

Q1. Comment l'IA améliore-t-elle la résilience en cybersécurité?

L'IA améliore la résilience en analysant une télémétrie à haut volume, en repérant les anomalies plus tôt et en élaborant des actions de réponse. Lorsqu'elle est associée à la gouvernance et à une révision humaine, les équipes réduisent les temps de détection et de réponse tout en réduisant les faux positifs.

Q2. Quelles sauvegardes sont mises en œuvre dans les modèles d'IA?

Les organisations déploient des sauvegardes multicouches : garde-fous de politique, filtrage, accès conscient de l'identité, isolation du réseau, limitation des taux et équipes rouges continues—plus des traces d'audit pour la responsabilité, capturées dans des outils comme Notion.

Q3. Qui sont les partenaires d'OpenAI dans cette initiative?

Les fournisseurs de sécurité, les organismes de normes et les chercheurs indépendants contribuent à travers des équipes rouges, des méthodologies d'évaluation et des partages de meilleures pratiques pour améliorer la sécurité et l'efficacité de l'IA en cybersécurité. Des ateliers collaboratifs peuvent être organisés dans Miro pour aligner les parties prenantes.

Résumé

OpenAI et la communauté de sécurité au sens large poussent l'IA vers l'avant pour renforcer la résilience cyber. Avec des sauvegardes en couches, une collaboration avec des experts et des opérations disciplinées, les organisations peuvent réduire les risques et répondre plus rapidement. Vous voulez une feuille de route pratique pour 2026 ? Contactez Generation Digital pour explorer les pilotes, les modèles de gouvernance et l'automatisation sécurisée adaptée à votre environnement—avec des workflows dans Asana, collaboration dans Miro, documentation dans Notion, et découverte rapide via Glean.

Recevez des conseils pratiques directement dans votre boîte de réception

En vous abonnant, vous consentez à ce que Génération Numérique stocke et traite vos informations conformément à notre politique de confidentialité. Vous pouvez lire la politique complète sur gend.co/privacy.

Génération

Numérique

Bureau au Royaume-Uni

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

1 University Ave,

Toronto,

ON M5J 1T1,

Canada

Bureau NAMER

77 Sands St,

Brooklyn,

NY 11201,

États-Unis

Bureau EMEA

Rue Charlemont, Saint Kevin's, Dublin,

D02 VN88,

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77 | Droits d'auteur 2026 | Conditions générales | Politique de confidentialité

Génération

Numérique

Bureau au Royaume-Uni

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

1 University Ave,

Toronto,

ON M5J 1T1,

Canada

Bureau NAMER

77 Sands St,

Brooklyn,

NY 11201,

États-Unis

Bureau EMEA

Rue Charlemont, Saint Kevin's, Dublin,

D02 VN88,

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77

Conditions générales

Politique de confidentialité

Droit d'auteur 2026