Open-Weight AI for Global Enterprises - Avoiding the ‘Off-Switch’ Problem

Open-Weight AI for Global Enterprises - Avoiding the ‘Off-Switch’ Problem

Artificial Intelligence

Jan 30, 2026

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Schedule a Consultation

Open-weight AI lets enterprises run intelligence like electricity: portable, resilient and under your control. By adopting models with downloadable weights, you reduce vendor lock-in, meet regional data-residency needs, and keep critical services available—even if a provider changes terms, throttles access or switches off an API.

Why this matters now

The centre of gravity for enterprise AI is shifting from “renting intelligence” via a single API to controlling intelligence across your own infrastructure. Executive teams want:

Portability across clouds and on-prem.

Assurance that no single vendor can degrade or revoke access.

Compliance with regional data laws and sector-specific regulations.

Open-weight models—where you can download and run the weights—give technology leaders new leverage. In practice: run the same model in your preferred region today, move it to another jurisdiction tomorrow, or replicate it across sites for resilience.

What we mean by “open-weight” (and what we don’t)

Open-weight: You can obtain the model weights and run them anywhere (VPC, on-prem, edge). Licences vary; the key is operational control and portability.

Open source: Source code + weights are permissively licensed. Many open-weight models are also open source, but not all.

Closed API: Access only via vendor endpoint; no self-hosting.

Outcome: treat AI like critical infrastructure—not a single point of failure.

Global residency, sovereignty and compliance

Data residency: Major clouds provide regional residency controls so personal data and telemetry can remain within designated jurisdictions when required.

Sovereign & restricted-operator options: Growing offerings across providers limit operator access and support stricter localisation and customer-managed keys.

Regulatory alignment: Privacy and AI governance obligations are converging worldwide (e.g., GDPR in the EU, CCPA/CPRA in California, LGPD in Brazil, PDPA in Singapore). Open-weight helps with operational controls (location, access, auditability); you still need governance and risk management.

Open-weight vs closed AI: quick comparison

Criteria | Open-weight model | Closed API model |

|---|---|---|

Control & availability | Self-host anywhere; no vendor off-switch | Dependent on provider SLAs, policy & pricing |

Data locality | Full control; run in required regions | Constrained by vendor regions & controls |

Compliance evidence | Easier to evidence location, access boundaries, change control | Relies on vendor attestations and contracts |

Cost model | Infra + inference; stable unit economics at scale | Opex per-token; exposure to price changes |

Performance tuning | Fine-tune, distil, specialise | Limited to provider features and guardrails |

Vendor leverage | High – interchangeable | Low – switching costs high |

Where open-weight shines in the enterprise

Knowledge search & RAG: Keep embeddings, context windows and execution inside your tenancy; minimise egress and simplify legal review.

Process automation & agents: Self-hosting reduces latency and enables offline/air-gapped modes for sensitive workflows.

Multimodal: Speech, image reasoning and code generation close to your data apps—without crossing borders.

Cost governance: Predictable unit costs for high-volume inference (contact centres, document processing, developer copilots).

A pragmatic adoption roadmap (RAG-first, tune later)

Phase 1 – Prove value with RAG

Stand up retrieval-augmented generation on open-weight text models.

Use regional vector stores that meet your residency needs; log prompts/responses for audit.

Add guardrails (redaction, content filters) + human-in-the-loop review.

Phase 2 – Specialise

Fine-tune on domain data (tickets, policies, SOPs).

Introduce small, specialised models (reasoning, coding, speech) where they beat a single large model.

Phase 3 – Industrialise

Standardise evals (helpfulness, safety, bias) and release cadence.

Build N+1 plans: multi-model, multi-region, offline kits.

Align governance to applicable regulations and internal policies.

Model options to evaluate (from cloud to edge)

General-purpose LLMs (open-weight): reasoning, multilingual; great for RAG and agents.

Coding models (open-weight): tuned for repos and CI; ideal for internal dev copilots.

Speech models (open-weight): high-accuracy STT for contact centres and meetings.

Compact/edge models: run on constrained hardware for field/kiosk scenarios.

Tip: treat models as components. Benchmark three per use-case and keep two alternates warm for portability.

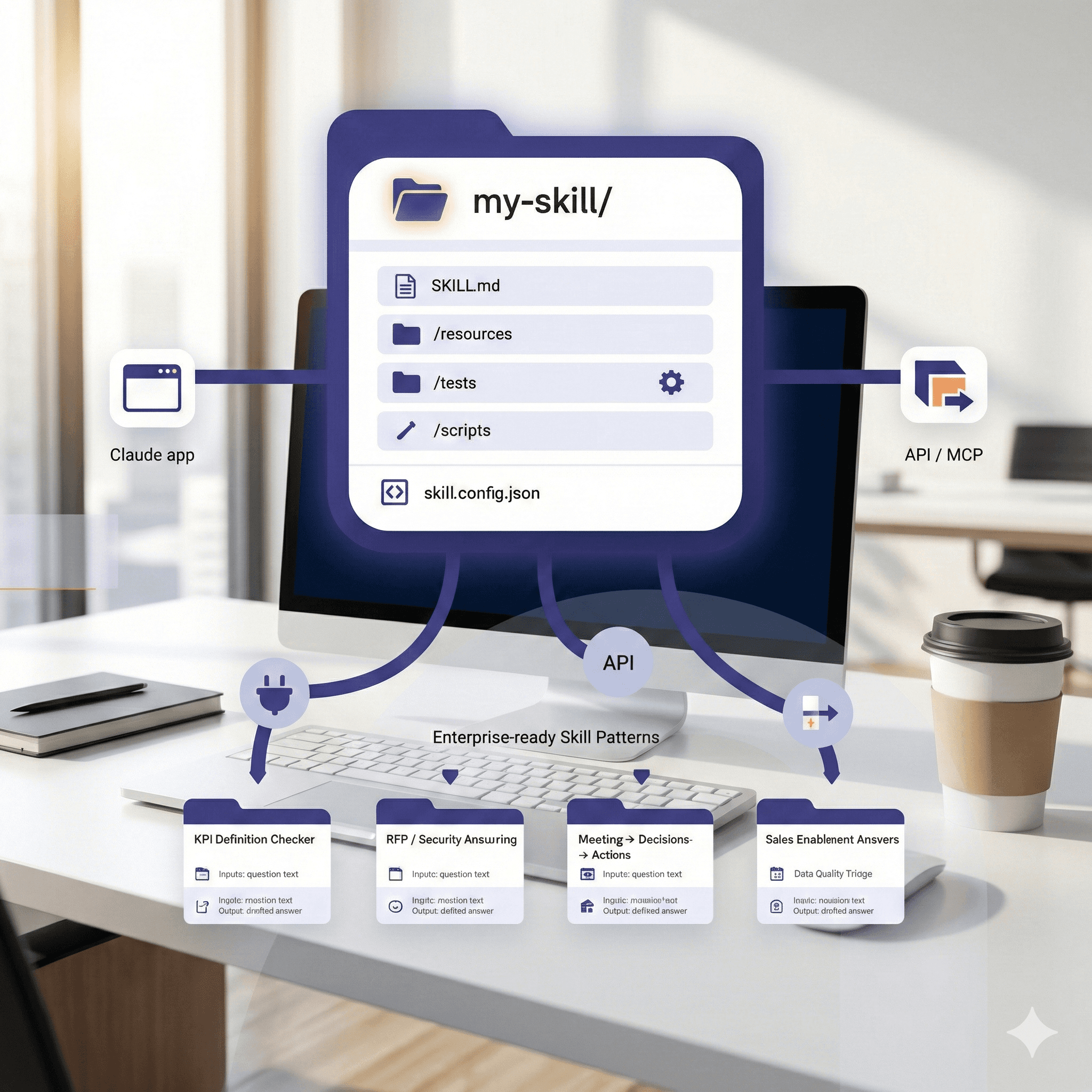

Architecture patterns we recommend

Residency-by-design: Deploy in required regions; enforce KMS-backed encryption, private networking, and customer-managed keys.

Isolation & least privilege: Separate inference clusters per data domain; zero standing access via JIT + audit trails.

Eval & monitoring: Automate pre-release and in-prod evals; track drift and regressions.

Failover: Keep a warm standby model (different vendor/family) behind a lightweight broker; run periodic chaos tests.

Risks & mitigations

Licence ambiguity → Vet for commercial use/redistribution; add licence review to intake.

Model drift → Lock versions; snapshot weights; document data lineage and fine-tune sets.

Prompt/data leakage → Redact PII; isolate logs; rotate keys; clear data-handling SOPs.

Shadow AI → Provide sanctioned, high-quality options so teams don’t reach for risky tools.

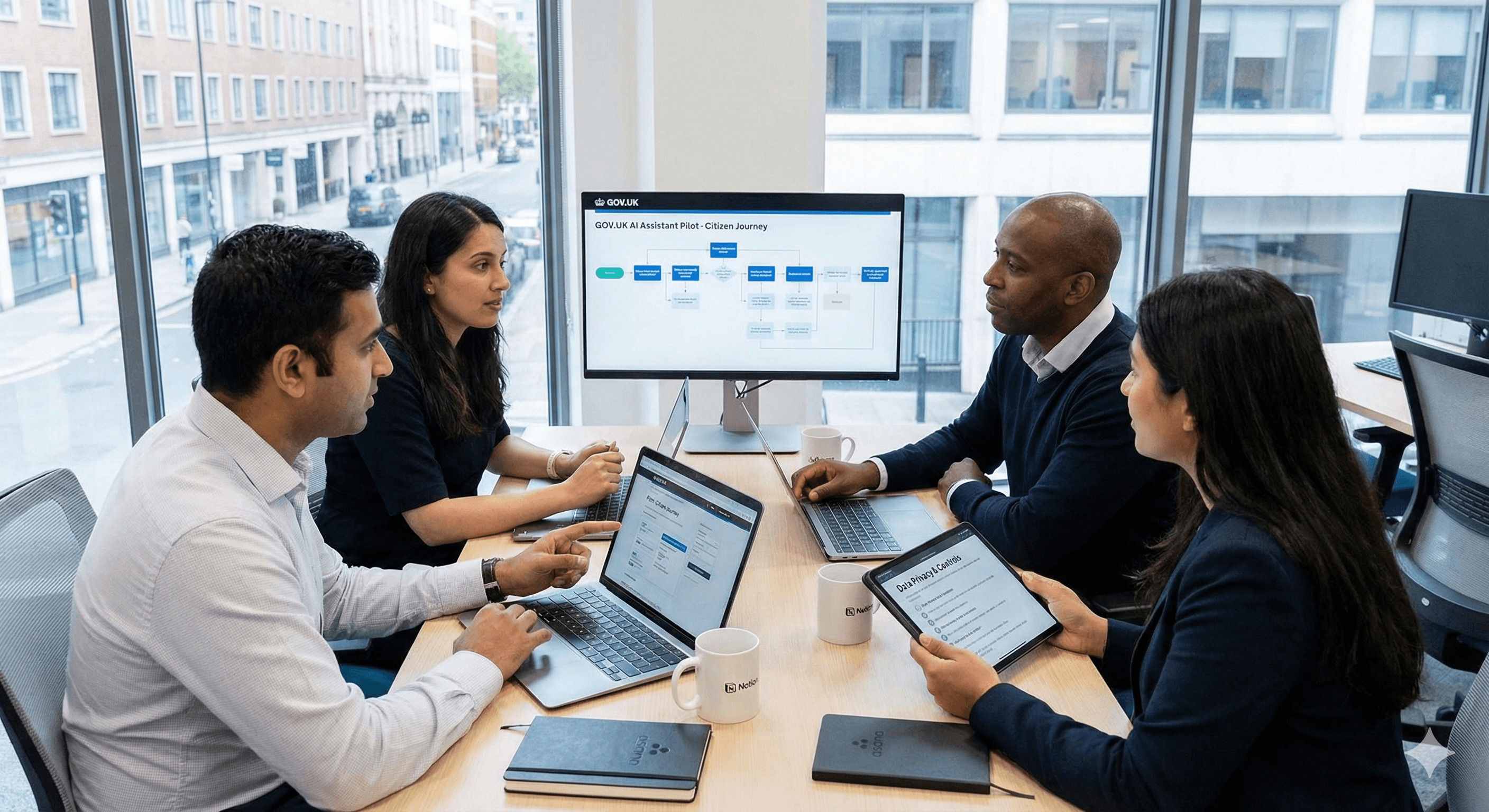

What Generation Digital delivers

Assessment & strategy: Business case, risk posture, and a 90-day roadmap for open-weight adoption.

Regional deployment: Landing zones across major clouds, networking, IAM, KMS, monitoring.

RAG accelerators: Search-quality tuning, eval harnesses, citation UX.

Ops & governance: Model registry, change control, PIA templates, and policy alignment.

Let’s talk — we’ll benchmark models against your data and ship a production RAG pilot in 4–6 weeks.

FAQs

Is open-weight “safer” than closed APIs?

Safer isn’t automatic—but control is. With open-weight you decide where the model runs, who can access it, and how it’s updated—making compliance and resilience easier to evidence.

Will running models ourselves increase cost?

At small volumes, APIs are fine. At scale, self-hosting often wins on unit cost and reduces exposure to price changes or rate-limits. Many enterprises blend both.

How does this align with global regulations?

Open-weight helps with operational controls (location, access, audit). You still need risk management, data governance, and transparency aligned to your jurisdictions and use-cases.

Can we avoid lock-in completely?

Yes—design for interchangeability: abstract your prompt/eval layer, keep multiple models viable, and enforce portability in contracts.

Open-weight AI lets enterprises run intelligence like electricity: portable, resilient and under your control. By adopting models with downloadable weights, you reduce vendor lock-in, meet regional data-residency needs, and keep critical services available—even if a provider changes terms, throttles access or switches off an API.

Why this matters now

The centre of gravity for enterprise AI is shifting from “renting intelligence” via a single API to controlling intelligence across your own infrastructure. Executive teams want:

Portability across clouds and on-prem.

Assurance that no single vendor can degrade or revoke access.

Compliance with regional data laws and sector-specific regulations.

Open-weight models—where you can download and run the weights—give technology leaders new leverage. In practice: run the same model in your preferred region today, move it to another jurisdiction tomorrow, or replicate it across sites for resilience.

What we mean by “open-weight” (and what we don’t)

Open-weight: You can obtain the model weights and run them anywhere (VPC, on-prem, edge). Licences vary; the key is operational control and portability.

Open source: Source code + weights are permissively licensed. Many open-weight models are also open source, but not all.

Closed API: Access only via vendor endpoint; no self-hosting.

Outcome: treat AI like critical infrastructure—not a single point of failure.

Global residency, sovereignty and compliance

Data residency: Major clouds provide regional residency controls so personal data and telemetry can remain within designated jurisdictions when required.

Sovereign & restricted-operator options: Growing offerings across providers limit operator access and support stricter localisation and customer-managed keys.

Regulatory alignment: Privacy and AI governance obligations are converging worldwide (e.g., GDPR in the EU, CCPA/CPRA in California, LGPD in Brazil, PDPA in Singapore). Open-weight helps with operational controls (location, access, auditability); you still need governance and risk management.

Open-weight vs closed AI: quick comparison

Criteria | Open-weight model | Closed API model |

|---|---|---|

Control & availability | Self-host anywhere; no vendor off-switch | Dependent on provider SLAs, policy & pricing |

Data locality | Full control; run in required regions | Constrained by vendor regions & controls |

Compliance evidence | Easier to evidence location, access boundaries, change control | Relies on vendor attestations and contracts |

Cost model | Infra + inference; stable unit economics at scale | Opex per-token; exposure to price changes |

Performance tuning | Fine-tune, distil, specialise | Limited to provider features and guardrails |

Vendor leverage | High – interchangeable | Low – switching costs high |

Where open-weight shines in the enterprise

Knowledge search & RAG: Keep embeddings, context windows and execution inside your tenancy; minimise egress and simplify legal review.

Process automation & agents: Self-hosting reduces latency and enables offline/air-gapped modes for sensitive workflows.

Multimodal: Speech, image reasoning and code generation close to your data apps—without crossing borders.

Cost governance: Predictable unit costs for high-volume inference (contact centres, document processing, developer copilots).

A pragmatic adoption roadmap (RAG-first, tune later)

Phase 1 – Prove value with RAG

Stand up retrieval-augmented generation on open-weight text models.

Use regional vector stores that meet your residency needs; log prompts/responses for audit.

Add guardrails (redaction, content filters) + human-in-the-loop review.

Phase 2 – Specialise

Fine-tune on domain data (tickets, policies, SOPs).

Introduce small, specialised models (reasoning, coding, speech) where they beat a single large model.

Phase 3 – Industrialise

Standardise evals (helpfulness, safety, bias) and release cadence.

Build N+1 plans: multi-model, multi-region, offline kits.

Align governance to applicable regulations and internal policies.

Model options to evaluate (from cloud to edge)

General-purpose LLMs (open-weight): reasoning, multilingual; great for RAG and agents.

Coding models (open-weight): tuned for repos and CI; ideal for internal dev copilots.

Speech models (open-weight): high-accuracy STT for contact centres and meetings.

Compact/edge models: run on constrained hardware for field/kiosk scenarios.

Tip: treat models as components. Benchmark three per use-case and keep two alternates warm for portability.

Architecture patterns we recommend

Residency-by-design: Deploy in required regions; enforce KMS-backed encryption, private networking, and customer-managed keys.

Isolation & least privilege: Separate inference clusters per data domain; zero standing access via JIT + audit trails.

Eval & monitoring: Automate pre-release and in-prod evals; track drift and regressions.

Failover: Keep a warm standby model (different vendor/family) behind a lightweight broker; run periodic chaos tests.

Risks & mitigations

Licence ambiguity → Vet for commercial use/redistribution; add licence review to intake.

Model drift → Lock versions; snapshot weights; document data lineage and fine-tune sets.

Prompt/data leakage → Redact PII; isolate logs; rotate keys; clear data-handling SOPs.

Shadow AI → Provide sanctioned, high-quality options so teams don’t reach for risky tools.

What Generation Digital delivers

Assessment & strategy: Business case, risk posture, and a 90-day roadmap for open-weight adoption.

Regional deployment: Landing zones across major clouds, networking, IAM, KMS, monitoring.

RAG accelerators: Search-quality tuning, eval harnesses, citation UX.

Ops & governance: Model registry, change control, PIA templates, and policy alignment.

Let’s talk — we’ll benchmark models against your data and ship a production RAG pilot in 4–6 weeks.

FAQs

Is open-weight “safer” than closed APIs?

Safer isn’t automatic—but control is. With open-weight you decide where the model runs, who can access it, and how it’s updated—making compliance and resilience easier to evidence.

Will running models ourselves increase cost?

At small volumes, APIs are fine. At scale, self-hosting often wins on unit cost and reduces exposure to price changes or rate-limits. Many enterprises blend both.

How does this align with global regulations?

Open-weight helps with operational controls (location, access, audit). You still need risk management, data governance, and transparency aligned to your jurisdictions and use-cases.

Can we avoid lock-in completely?

Yes—design for interchangeability: abstract your prompt/eval layer, keep multiple models viable, and enforce portability in contracts.

Receive practical advice directly in your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital