Pentagon Reviews Anthropic Deal: AI Safeguards Clash

Pentagon Reviews Anthropic Deal: AI Safeguards Clash

Anthropic

17 févr. 2026

Pas sûr de quoi faire ensuite avec l'IA?

Évaluez la préparation, les risques et les priorités en moins d'une heure.

Pas sûr de quoi faire ensuite avec l'IA?

Évaluez la préparation, les risques et les priorités en moins d'une heure.

➔ Téléchargez notre kit de préparation à l'IA gratuit

Below is an SEO/GEO-optimised rewrite (British English, “Warm Intelligence” tone) based on reporting that the US Department of Defense is reviewing its relationship with Anthropic over AI usage terms, including requests for broader “all lawful purposes” use and Anthropic’s stated hard limits.

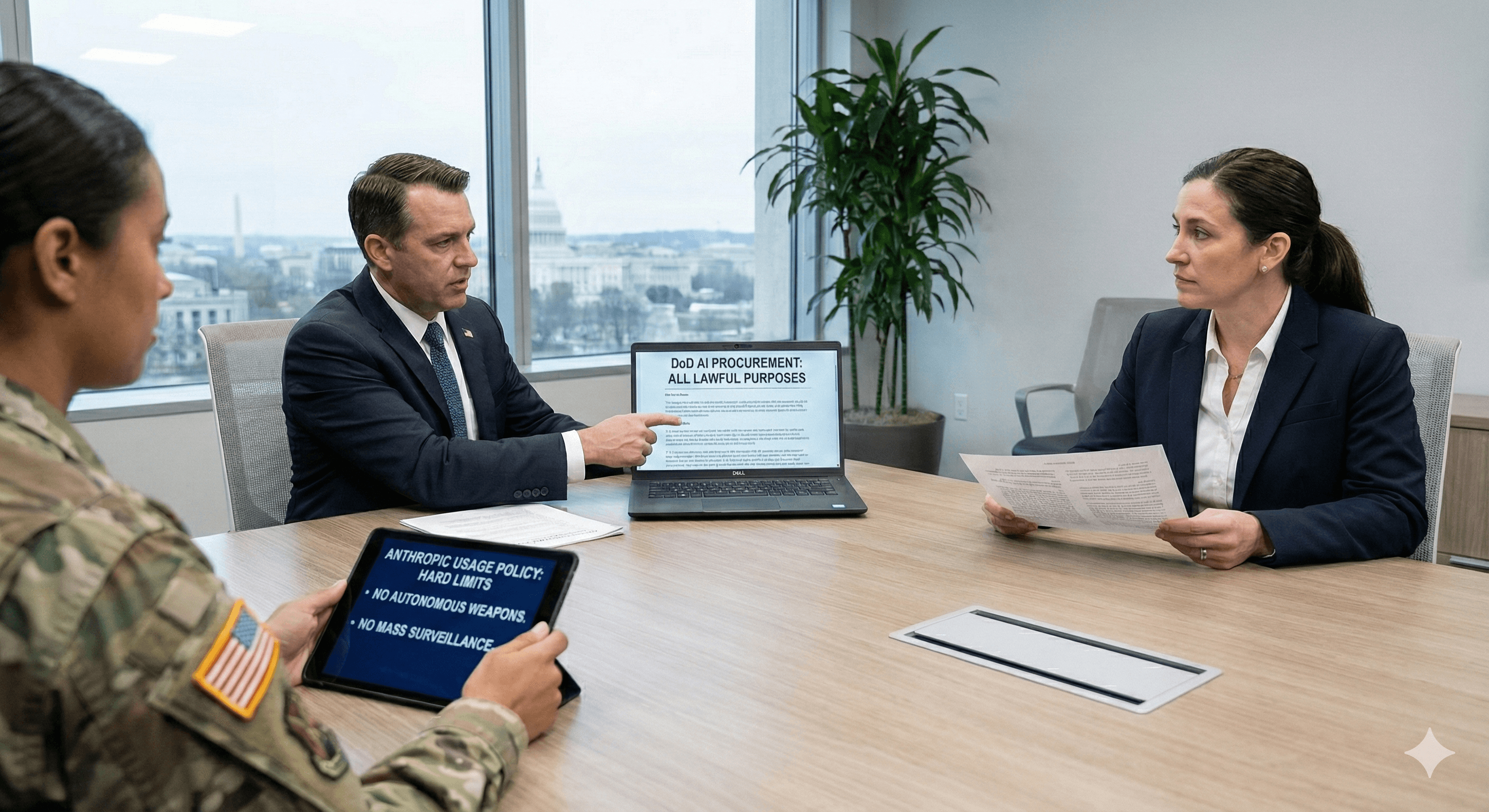

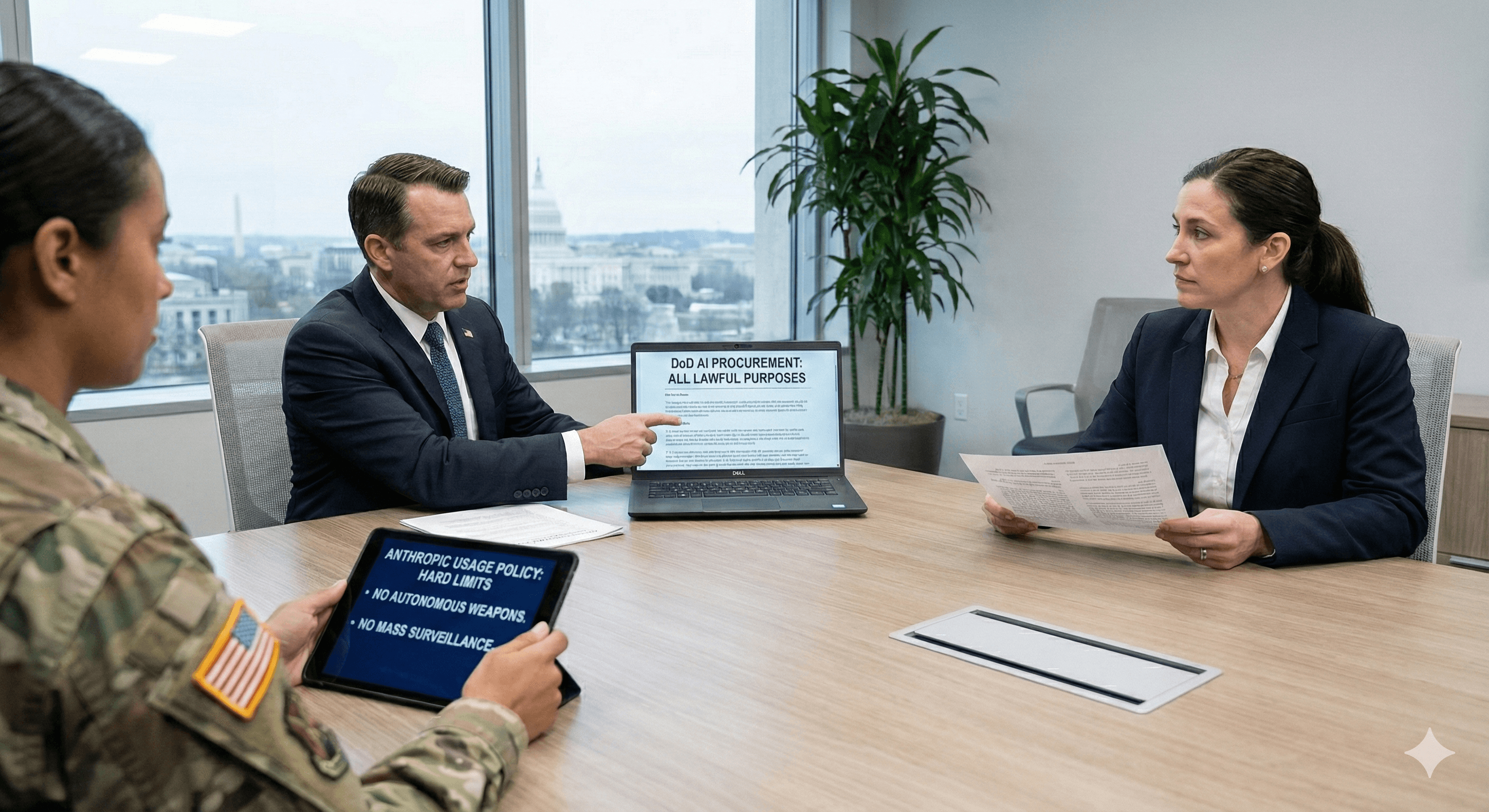

The Pentagon is reportedly reviewing its relationship with AI company Anthropic after disagreements over how Claude can be used in military contexts. Officials want broader “all lawful purposes” usage, while Anthropic has stated hard limits around fully autonomous weapons and mass domestic surveillance. The outcome could shape how AI safeguards are treated in government procurement.

A reported dispute between the US Department of Defense and Anthropic is becoming a test case for a question many organisations now face: who controls how a powerful AI system may be used once it’s embedded in critical workflows?

Multiple outlets report that the Pentagon is reviewing its relationship with Anthropic, the maker of Claude, following tensions over usage terms and safeguards. The Pentagon is said to be pushing AI providers to allow use of their models for “all lawful purposes”, while Anthropic has been described as resisting that approach and maintaining hard limits, including around fully autonomous weapons and mass domestic surveillance.

What’s happening

According to reporting based on Axios, the Pentagon has been in negotiations with Anthropic over how Claude can be used within defence environments. The sticking point is the scope of permitted use: the Pentagon wants broad latitude, while Anthropic’s policies emphasise restrictions and safeguards for high‑risk applications.

Some reports say the Pentagon has threatened to end a contract worth around $200 million if the dispute can’t be resolved.

Why this matters beyond defence

Even if you don’t work anywhere near government, this story matters because it’s a live example of a wider shift:

Procurement is becoming governance. Contracts increasingly include usage clauses, safety obligations, and audit expectations.

Policy meets product reality. AI providers may have safety policies that don’t neatly align with every customer’s operational demands.

Regulated sectors are watching. Healthcare, finance, education and critical infrastructure buyers often need the same clarity: “What’s allowed, what’s prohibited, and who is accountable?”

The core tension: “all lawful purposes” vs hard limits

In TechCrunch’s summary of the Axios reporting, the Pentagon is pushing AI companies to allow use of their technology for “all lawful purposes”, while Anthropic is pushing back. Anthropic’s position (as reported) focuses on a specific set of usage policy questions, including hard limits around fully autonomous weapons and mass domestic surveillance.

From a governance perspective, this is the main takeaway: lawfulness is not the same as safety. Many organisations now want terms that define not just legality, but acceptable risk.

What could happen next

Reporting suggests several potential outcomes:

A negotiated compromise that clarifies permitted use, adds oversight, or introduces carve‑outs for certain high‑risk applications.

A commercial separation, where the Pentagon reduces or ends its use of Anthropic tools.

A precedent for the industry, signalling to other AI vendors what government buyers may require—and what vendors may refuse.

Some coverage also references the possibility of the Pentagon labelling Anthropic a “supply chain risk”, which—if pursued—could have wider knock‑on effects for contractors and partners. (Because this is typically a severe designation, it’s best treated as a “watch item” until confirmed in official documentation.)

What organisations can learn from this

If you’re adopting AI in high‑stakes environments, three practical steps help reduce surprises:

Translate usage policy into operational rules

Don’t assume “we bought the tool” equals “we can use it any way we like”. Map vendor terms to real workflows.Create a high‑risk use register

Define which use cases require extra approvals (e.g., surveillance, profiling, autonomous decisioning, weapons-adjacent work).Build governance into procurement

Add contract language for auditability, incident response, model updates, and policy changes—so compliance doesn’t depend on memory or goodwill.

Summary

The reported Pentagon review of its relationship with Anthropic is less about one vendor and one contract, and more about how AI will be governed once it sits inside sensitive systems. Whether the dispute ends in compromise or separation, it’s a signal that usage terms and safety constraints are now central to enterprise—and government—AI adoption.

Next steps (Generation Digital): If your team needs help turning AI policies into practical guardrails (and procurement language) that still allow innovation, we can help design a governance framework that fits real workflows.

FAQs

Q1: Why is the Pentagon reviewing its relationship with Anthropic?

Reporting indicates disagreements over how Anthropic’s Claude model may be used in defence contexts, including the scope of permitted usage and safeguards.

Q2: What is the key issue in the reported dispute?

Coverage describes tension between broader “all lawful purposes” usage requested by the Pentagon and Anthropic’s stated hard limits around fully autonomous weapons and mass domestic surveillance.

Q3: What does “supply chain risk” mean in this context?

In general terms, it refers to a designation that could restrict how contractors and partners work with a supplier. (Treat specific outcomes as unconfirmed unless officially published.)

Q4: What should organisations take from this story?

It highlights that AI adoption increasingly depends on clear usage terms, governance, and accountability—not just model performance.

Below is an SEO/GEO-optimised rewrite (British English, “Warm Intelligence” tone) based on reporting that the US Department of Defense is reviewing its relationship with Anthropic over AI usage terms, including requests for broader “all lawful purposes” use and Anthropic’s stated hard limits.

The Pentagon is reportedly reviewing its relationship with AI company Anthropic after disagreements over how Claude can be used in military contexts. Officials want broader “all lawful purposes” usage, while Anthropic has stated hard limits around fully autonomous weapons and mass domestic surveillance. The outcome could shape how AI safeguards are treated in government procurement.

A reported dispute between the US Department of Defense and Anthropic is becoming a test case for a question many organisations now face: who controls how a powerful AI system may be used once it’s embedded in critical workflows?

Multiple outlets report that the Pentagon is reviewing its relationship with Anthropic, the maker of Claude, following tensions over usage terms and safeguards. The Pentagon is said to be pushing AI providers to allow use of their models for “all lawful purposes”, while Anthropic has been described as resisting that approach and maintaining hard limits, including around fully autonomous weapons and mass domestic surveillance.

What’s happening

According to reporting based on Axios, the Pentagon has been in negotiations with Anthropic over how Claude can be used within defence environments. The sticking point is the scope of permitted use: the Pentagon wants broad latitude, while Anthropic’s policies emphasise restrictions and safeguards for high‑risk applications.

Some reports say the Pentagon has threatened to end a contract worth around $200 million if the dispute can’t be resolved.

Why this matters beyond defence

Even if you don’t work anywhere near government, this story matters because it’s a live example of a wider shift:

Procurement is becoming governance. Contracts increasingly include usage clauses, safety obligations, and audit expectations.

Policy meets product reality. AI providers may have safety policies that don’t neatly align with every customer’s operational demands.

Regulated sectors are watching. Healthcare, finance, education and critical infrastructure buyers often need the same clarity: “What’s allowed, what’s prohibited, and who is accountable?”

The core tension: “all lawful purposes” vs hard limits

In TechCrunch’s summary of the Axios reporting, the Pentagon is pushing AI companies to allow use of their technology for “all lawful purposes”, while Anthropic is pushing back. Anthropic’s position (as reported) focuses on a specific set of usage policy questions, including hard limits around fully autonomous weapons and mass domestic surveillance.

From a governance perspective, this is the main takeaway: lawfulness is not the same as safety. Many organisations now want terms that define not just legality, but acceptable risk.

What could happen next

Reporting suggests several potential outcomes:

A negotiated compromise that clarifies permitted use, adds oversight, or introduces carve‑outs for certain high‑risk applications.

A commercial separation, where the Pentagon reduces or ends its use of Anthropic tools.

A precedent for the industry, signalling to other AI vendors what government buyers may require—and what vendors may refuse.

Some coverage also references the possibility of the Pentagon labelling Anthropic a “supply chain risk”, which—if pursued—could have wider knock‑on effects for contractors and partners. (Because this is typically a severe designation, it’s best treated as a “watch item” until confirmed in official documentation.)

What organisations can learn from this

If you’re adopting AI in high‑stakes environments, three practical steps help reduce surprises:

Translate usage policy into operational rules

Don’t assume “we bought the tool” equals “we can use it any way we like”. Map vendor terms to real workflows.Create a high‑risk use register

Define which use cases require extra approvals (e.g., surveillance, profiling, autonomous decisioning, weapons-adjacent work).Build governance into procurement

Add contract language for auditability, incident response, model updates, and policy changes—so compliance doesn’t depend on memory or goodwill.

Summary

The reported Pentagon review of its relationship with Anthropic is less about one vendor and one contract, and more about how AI will be governed once it sits inside sensitive systems. Whether the dispute ends in compromise or separation, it’s a signal that usage terms and safety constraints are now central to enterprise—and government—AI adoption.

Next steps (Generation Digital): If your team needs help turning AI policies into practical guardrails (and procurement language) that still allow innovation, we can help design a governance framework that fits real workflows.

FAQs

Q1: Why is the Pentagon reviewing its relationship with Anthropic?

Reporting indicates disagreements over how Anthropic’s Claude model may be used in defence contexts, including the scope of permitted usage and safeguards.

Q2: What is the key issue in the reported dispute?

Coverage describes tension between broader “all lawful purposes” usage requested by the Pentagon and Anthropic’s stated hard limits around fully autonomous weapons and mass domestic surveillance.

Q3: What does “supply chain risk” mean in this context?

In general terms, it refers to a designation that could restrict how contractors and partners work with a supplier. (Treat specific outcomes as unconfirmed unless officially published.)

Q4: What should organisations take from this story?

It highlights that AI adoption increasingly depends on clear usage terms, governance, and accountability—not just model performance.

Recevez chaque semaine des nouvelles et des conseils sur l'IA directement dans votre boîte de réception

En vous abonnant, vous consentez à ce que Génération Numérique stocke et traite vos informations conformément à notre politique de confidentialité. Vous pouvez lire la politique complète sur gend.co/privacy.

Ateliers et webinaires à venir

Clarté opérationnelle à grande échelle - Asana

Webinaire Virtuel

Mercredi 25 février 2026

En ligne

Collaborez avec des coéquipiers IA - Asana

Atelier en personne

Jeudi 26 février 2026

London, UK

De l'idée au prototype - L'IA dans Miro

Webinaire virtuel

Mercredi 18 février 2026

En ligne

Génération

Numérique

Bureau du Royaume-Uni

Génération Numérique Ltée

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

Génération Numérique Amériques Inc

181 rue Bay, Suite 1800

Toronto, ON, M5J 2T9

Canada

Bureau aux États-Unis

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

États-Unis

Bureau de l'UE

Génération de logiciels numériques

Bâtiment Elgee

Dundalk

A91 X2R3

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77 | Droits d'auteur 2026 | Conditions générales | Politique de confidentialité

Génération

Numérique

Bureau du Royaume-Uni

Génération Numérique Ltée

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

Génération Numérique Amériques Inc

181 rue Bay, Suite 1800

Toronto, ON, M5J 2T9

Canada

Bureau aux États-Unis

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

États-Unis

Bureau de l'UE

Génération de logiciels numériques

Bâtiment Elgee

Dundalk

A91 X2R3

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77

Conditions générales

Politique de confidentialité

Droit d'auteur 2026