OpenAI + OpenClaw: Why Agents Are Replacing Chatbots

OpenAI + OpenClaw: Why Agents Are Replacing Chatbots

OpenAI

18 févr. 2026

Pas sûr de quoi faire ensuite avec l'IA?

Évaluez la préparation, les risques et les priorités en moins d'une heure.

Pas sûr de quoi faire ensuite avec l'IA?

Évaluez la préparation, les risques et les priorités en moins d'une heure.

➔ Téléchargez notre kit de préparation à l'IA gratuit

OpenAI’s move to bring OpenClaw’s creator into the company signals a shift from chatbots to AI agents that can take actions across tools and workflows. For enterprises, the opportunity is speed and automation — but only with strong governance: secure tool access, workload boundaries, and a reliable knowledge foundation that agents can trust.

The “chatbot era” isn’t ending because conversation is no longer useful. It’s ending because conversation alone isn’t enough.

VentureBeat reports that OpenClaw — a fast-growing open-source agent project — has effectively become OpenAI’s latest strategic bet on agents: systems that don’t just answer questions, but browse, click, run code, and complete tasks across real tools. Its creator, Peter Steinberger, is joining OpenAI, while OpenClaw is expected to transition to an independent foundation model (with OpenAI sponsorship).

For IT and transformation leaders, the message is clear: the competitive edge is moving from “who has the best chat UI” to “who can safely automate work”.

Why this matters for enterprise AI strategy

Agents change the unit of value.

A chatbot improves knowledge access and drafting. An agent changes throughput: it can complete multi-step workflows — updating records, generating content, triaging requests, producing reports — without a human stitching the steps together.

But that also makes the risk profile very different. Recent reporting highlights security concerns serious enough that some companies have restricted or banned OpenClaw internally due to risk and governance gaps.

So the real enterprise question becomes: how do you get the productivity upside of agents without inheriting the chaos?

What OpenClaw got right (and why it went viral)

VentureBeat describes OpenClaw’s breakout as a combination of capabilities that previously existed in fragments — tool access, code execution, “memory”, and easy integrations with messaging platforms.

Whether you buy the “end of ChatGPT era” headline or not, the underlying trend is hard to ignore: users increasingly want AI that does, not just AI that says.

The enterprise reality check: agents need guardrails (and good knowledge)

Open-source agents can move fast because they accept trade-offs enterprises can’t.

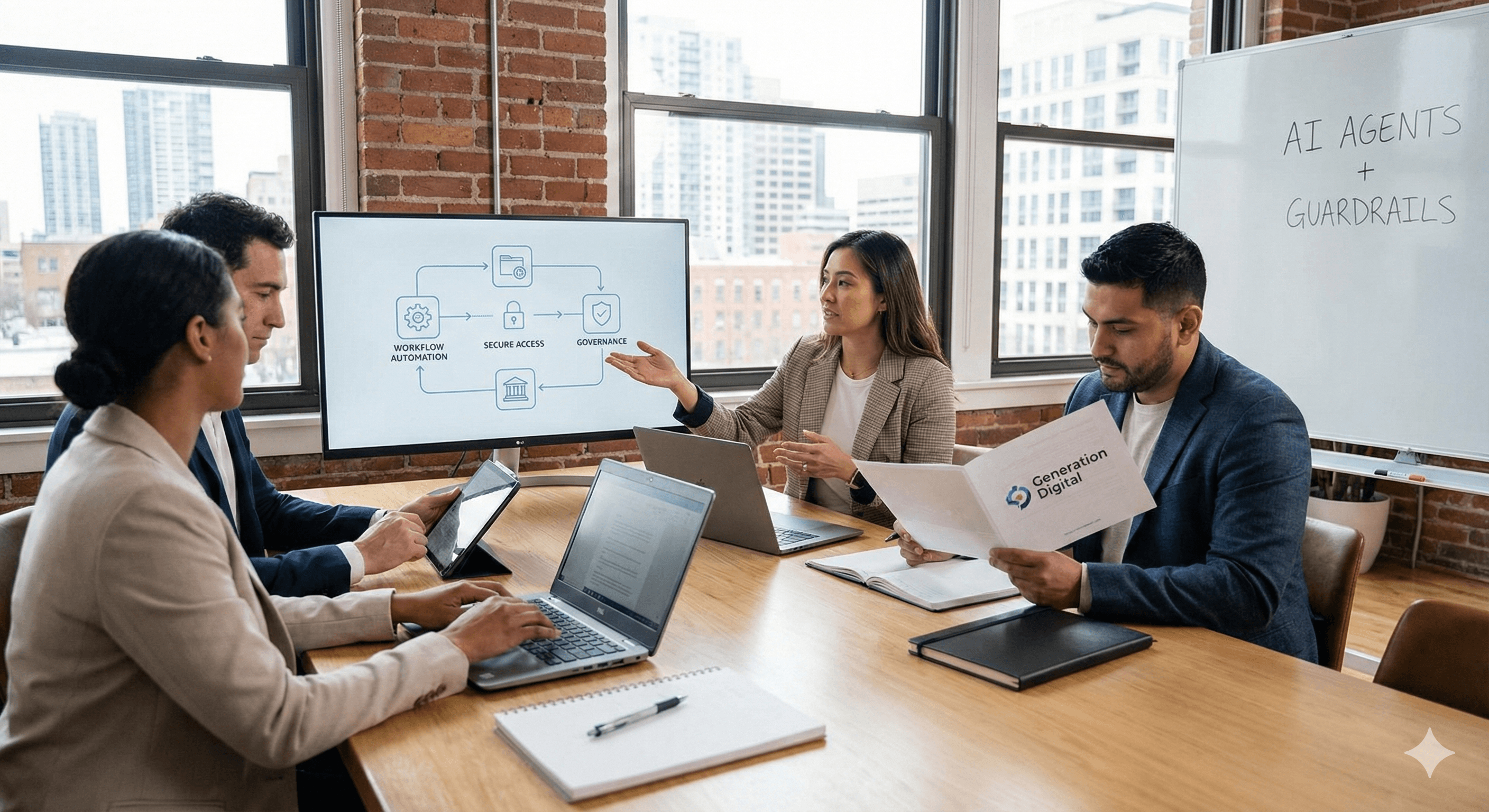

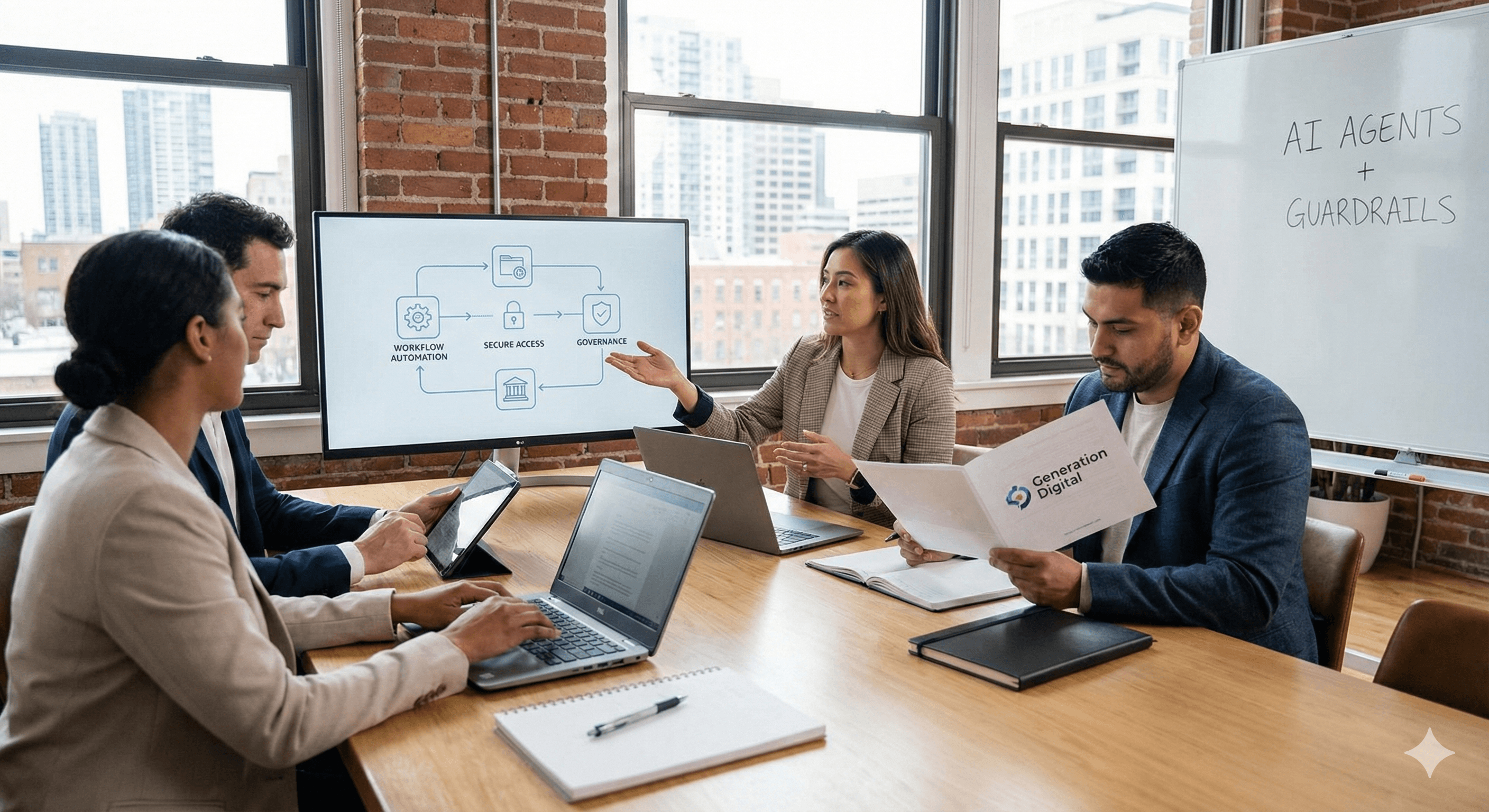

To deploy agents safely, organisations typically need four foundations:

1) Identity, permissions and audit

Agents must operate with least-privilege access, with clear audit trails. If an agent can “click anything”, it can also click the wrong thing.

2) Tooling boundaries

Enterprises need controlled tool access: which systems can be touched, what actions are allowed, and how exceptions are handled.

3) Workload isolation

Agent workloads should be separated so failures don’t cascade — e.g., experimental agents should never share the same runtime/permissions as production-critical workflows.

4) Knowledge foundations that don’t collapse under scale

Agents are only as reliable as the knowledge they retrieve. If policies, SOPs, pricing, product details or processes are fragmented across tools, agents will either:

retrieve inconsistent information, or

act on outdated information.

This is exactly where Generation Digital comes in.

How Generation Digital helps teams prepare for the agent shift

Agents aren’t a plug-in; they’re a new way of working.

We help organisations do the hard, high-leverage work that determines whether “agents” becomes a competitive advantage or a security incident:

align stakeholders on the right use cases (and what not to automate yet)

build governance, ownership, and operational controls

prepare knowledge for reliable retrieval (the bit most teams skip)

design adoption so teams actually change habits — not just tools

And when KM is the blocker, we often recommend Notion as a strong foundation: flexible enough to capture real work, structured enough to become a usable source of truth, and practical for scaling templates, playbooks and SOPs across teams.

Practical next steps (what to do this quarter)

If your leadership is asking about agents, start here:

Pick one workflow with clear ROI (support triage, onboarding, internal reporting, sales enablement).

Fix the knowledge source first (one canonical place, owners, templates, review cadence).

Define safe tool access (least privilege, auditability, approvals where needed).

Pilot in a sandbox (separate environments and accounts).

Measure outcomes (cycle time, error rate, adoption) — not “number of prompts”.

Summary

OpenAI’s OpenClaw move is another signal that the industry is shifting towards agents. The opportunity is real — but so are the security and governance implications. Enterprises that win will be the ones that build the foundations: clean knowledge, controlled tool access, and a change programme that gets teams aligned on how work will run in an agent-enabled world.

FAQs

What is OpenClaw?

OpenClaw is an open-source AI agent project designed to take actions across tools and environments, not just answer questions. It gained rapid developer adoption in late 2025 and early 2026.

Why does OpenAI hiring its creator matter?

It suggests OpenAI is investing heavily in the agent direction — moving beyond chat interfaces towards systems that can execute tasks end-to-end.

Why are enterprises cautious about agent tools?

Because agents often require broad access to systems and data. Recent reporting highlights security concerns serious enough that some companies have restricted or banned OpenClaw.

How do we prepare for agents without increasing risk?

Start with governance (permissions/audit), knowledge foundations (one source of truth), and a controlled pilot. Don’t scale agents before you can control them.

OpenAI’s move to bring OpenClaw’s creator into the company signals a shift from chatbots to AI agents that can take actions across tools and workflows. For enterprises, the opportunity is speed and automation — but only with strong governance: secure tool access, workload boundaries, and a reliable knowledge foundation that agents can trust.

The “chatbot era” isn’t ending because conversation is no longer useful. It’s ending because conversation alone isn’t enough.

VentureBeat reports that OpenClaw — a fast-growing open-source agent project — has effectively become OpenAI’s latest strategic bet on agents: systems that don’t just answer questions, but browse, click, run code, and complete tasks across real tools. Its creator, Peter Steinberger, is joining OpenAI, while OpenClaw is expected to transition to an independent foundation model (with OpenAI sponsorship).

For IT and transformation leaders, the message is clear: the competitive edge is moving from “who has the best chat UI” to “who can safely automate work”.

Why this matters for enterprise AI strategy

Agents change the unit of value.

A chatbot improves knowledge access and drafting. An agent changes throughput: it can complete multi-step workflows — updating records, generating content, triaging requests, producing reports — without a human stitching the steps together.

But that also makes the risk profile very different. Recent reporting highlights security concerns serious enough that some companies have restricted or banned OpenClaw internally due to risk and governance gaps.

So the real enterprise question becomes: how do you get the productivity upside of agents without inheriting the chaos?

What OpenClaw got right (and why it went viral)

VentureBeat describes OpenClaw’s breakout as a combination of capabilities that previously existed in fragments — tool access, code execution, “memory”, and easy integrations with messaging platforms.

Whether you buy the “end of ChatGPT era” headline or not, the underlying trend is hard to ignore: users increasingly want AI that does, not just AI that says.

The enterprise reality check: agents need guardrails (and good knowledge)

Open-source agents can move fast because they accept trade-offs enterprises can’t.

To deploy agents safely, organisations typically need four foundations:

1) Identity, permissions and audit

Agents must operate with least-privilege access, with clear audit trails. If an agent can “click anything”, it can also click the wrong thing.

2) Tooling boundaries

Enterprises need controlled tool access: which systems can be touched, what actions are allowed, and how exceptions are handled.

3) Workload isolation

Agent workloads should be separated so failures don’t cascade — e.g., experimental agents should never share the same runtime/permissions as production-critical workflows.

4) Knowledge foundations that don’t collapse under scale

Agents are only as reliable as the knowledge they retrieve. If policies, SOPs, pricing, product details or processes are fragmented across tools, agents will either:

retrieve inconsistent information, or

act on outdated information.

This is exactly where Generation Digital comes in.

How Generation Digital helps teams prepare for the agent shift

Agents aren’t a plug-in; they’re a new way of working.

We help organisations do the hard, high-leverage work that determines whether “agents” becomes a competitive advantage or a security incident:

align stakeholders on the right use cases (and what not to automate yet)

build governance, ownership, and operational controls

prepare knowledge for reliable retrieval (the bit most teams skip)

design adoption so teams actually change habits — not just tools

And when KM is the blocker, we often recommend Notion as a strong foundation: flexible enough to capture real work, structured enough to become a usable source of truth, and practical for scaling templates, playbooks and SOPs across teams.

Practical next steps (what to do this quarter)

If your leadership is asking about agents, start here:

Pick one workflow with clear ROI (support triage, onboarding, internal reporting, sales enablement).

Fix the knowledge source first (one canonical place, owners, templates, review cadence).

Define safe tool access (least privilege, auditability, approvals where needed).

Pilot in a sandbox (separate environments and accounts).

Measure outcomes (cycle time, error rate, adoption) — not “number of prompts”.

Summary

OpenAI’s OpenClaw move is another signal that the industry is shifting towards agents. The opportunity is real — but so are the security and governance implications. Enterprises that win will be the ones that build the foundations: clean knowledge, controlled tool access, and a change programme that gets teams aligned on how work will run in an agent-enabled world.

FAQs

What is OpenClaw?

OpenClaw is an open-source AI agent project designed to take actions across tools and environments, not just answer questions. It gained rapid developer adoption in late 2025 and early 2026.

Why does OpenAI hiring its creator matter?

It suggests OpenAI is investing heavily in the agent direction — moving beyond chat interfaces towards systems that can execute tasks end-to-end.

Why are enterprises cautious about agent tools?

Because agents often require broad access to systems and data. Recent reporting highlights security concerns serious enough that some companies have restricted or banned OpenClaw.

How do we prepare for agents without increasing risk?

Start with governance (permissions/audit), knowledge foundations (one source of truth), and a controlled pilot. Don’t scale agents before you can control them.

Recevez chaque semaine des nouvelles et des conseils sur l'IA directement dans votre boîte de réception

En vous abonnant, vous consentez à ce que Génération Numérique stocke et traite vos informations conformément à notre politique de confidentialité. Vous pouvez lire la politique complète sur gend.co/privacy.

Ateliers et webinaires à venir

Clarté opérationnelle à grande échelle - Asana

Webinaire Virtuel

Mercredi 25 février 2026

En ligne

Collaborez avec des coéquipiers IA - Asana

Atelier en personne

Jeudi 26 février 2026

London, UK

De l'idée au prototype - L'IA dans Miro

Webinaire virtuel

Mercredi 18 février 2026

En ligne

Génération

Numérique

Bureau du Royaume-Uni

Génération Numérique Ltée

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

Génération Numérique Amériques Inc

181 rue Bay, Suite 1800

Toronto, ON, M5J 2T9

Canada

Bureau aux États-Unis

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

États-Unis

Bureau de l'UE

Génération de logiciels numériques

Bâtiment Elgee

Dundalk

A91 X2R3

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77 | Droits d'auteur 2026 | Conditions générales | Politique de confidentialité

Génération

Numérique

Bureau du Royaume-Uni

Génération Numérique Ltée

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

Génération Numérique Amériques Inc

181 rue Bay, Suite 1800

Toronto, ON, M5J 2T9

Canada

Bureau aux États-Unis

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

États-Unis

Bureau de l'UE

Génération de logiciels numériques

Bâtiment Elgee

Dundalk

A91 X2R3

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77

Conditions générales

Politique de confidentialité

Droit d'auteur 2026