Échelle de l'IA en Fabrication : Guide du COO pour la Performance (2026)

Échelle de l'IA en Fabrication : Guide du COO pour la Performance (2026)

IA

15 déc. 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Réservez une consultation

La thèse du COO : la valeur vient des facilitateurs, pas de la démo

Le Sondage COO100 est sans équivoque : les leaders du secteur manufacturier financent l'IA à grande échelle, mais nombreux sont ceux qui sous-investissent dans les fondations nécessaires pour un impact durable—c'est précisément pourquoi les pilotes stagnent et les économies s'évaporent après la première année. Traitez l'IA comme un processus de production : capacité, contrôle, cadence.

Ce qui change en 2026

Deux réalités convergent. Premièrement, les conseils d'administration s'attendent à ce que les gains de productivité et de qualité au niveau de l'usine se reflètent dans le P&L, pas seulement dans les présentations. Deuxièmement, les entreprises qui déclarent des retours se distinguent par leur modèle opérationnel et leurs facilitateurs—des pipelines de données intégrés à la ligne, un MLOps renforcé, des rituels d'adoption sur le terrain et une gouvernance financée par flux de valeur, pas par outil.

Pour déployer l'IA à grande échelle dans le secteur manufacturier, les COO doivent insister sur les facilitateurs : connectivité des données/OT, MLOps, modèles opérationnels transversaux et adoption sur le terrain. L'enquête McKinsey COO100 constate des budgets d'IA élevés mais un sous-investissement dans ces fondations—ce qui explique pourquoi les pilotes ne deviennent que rarement une performance à l'échelle de l'usine. Faites des facilitateurs le programme.

Des pilotes à la performance : cinq choix du COO

1) Financer par flux de valeur, pas par cas d'usage.

Arrêtez de disperser les budgets à travers des « victoires » isolées. Financez un flux de valeur cible (par exemple, OEE d'emballage ou FPY) et liez tous les modèles, travaux de données et activités de changement à ces indicateurs clés. Les leaders qui déploient l'IA s'organisent autour de la stratégie, du talent, du modèle opérationnel, de la technologie, des données et de l'adoption—et mesurent à ce niveau.

2) Industrialiser la couche de données à la ligne.

Rendez le travail ennuyeux non-négociable : qualité des capteurs, accès aux historien, modèles sémantiques pour les équipements, et magasins de fonctions gouvernementaux. Si les données ne sont pas de qualité production, les modèles ne le sont pas non plus. (C'est le sous-investissement le plus fréquent signalé par l'enquête.)

3) Traitez les modèles comme des actifs : MLOps pour OT.

Standardisez le déploiement en bordure et dans le cloud, implémentez la surveillance de la dérive, le retour en arrière et le contrôle des modifications en qui vos gestionnaires de plant ont confiance. Reliez les versions de modèle aux fenêtres de maintenance comme tout autre changement d'actif. Les grands performants font ceci systématiquement.

4) Mettez l'adoption sur le Gemba.

Déplacez les rituels d'amélioration (réunions, réunions à niveaux) pour utiliser par défaut les insights d'IA—alertes de qualité, temps d'arrêt prédit, anomalies énergétiques—pour que les opérateurs sollicitent les outils, pas qu'ils les soubissent. L'adoption est un système de gestion, pas un plan de communication.

5) Gouvernez pour l'échelle, pas la permission.

Créez une tour de contrôle de l'IA qui possède le carnet de commandes, élimine les duplications et retire les modèles qui ne prouvent pas leur rentabilité. Financez des expériences, mais ne graduez que celles avec un impact vérifié sur le débit, le rendement, le coût à servir, et la sécurité.

À quoi ressemble le succès sur le plancher de l'usine

OEE, FPY, et MTBF évoluent ensemble, pas de manière isolée—parce que les modèles sont intégrés dans les flux de travail de maintenance, qualité et planification, pas seulement dans les tableaux de bord.

Cycle d'apprentissage toutes les deux semaines : nouvelles données, réentraîner, redéployer, vérifier; les modèles sont traités comme les équipements—maintenus, audités, et remplacés lorsqu'ils sont obsolètes.

Réutilisation à l'échelle de l'entreprise : un seul livre de jeu pour la QA visuelle ou l'optimisation énergétique, répliqué sur des lignes/usines similaires avec 80 % de composants communs.

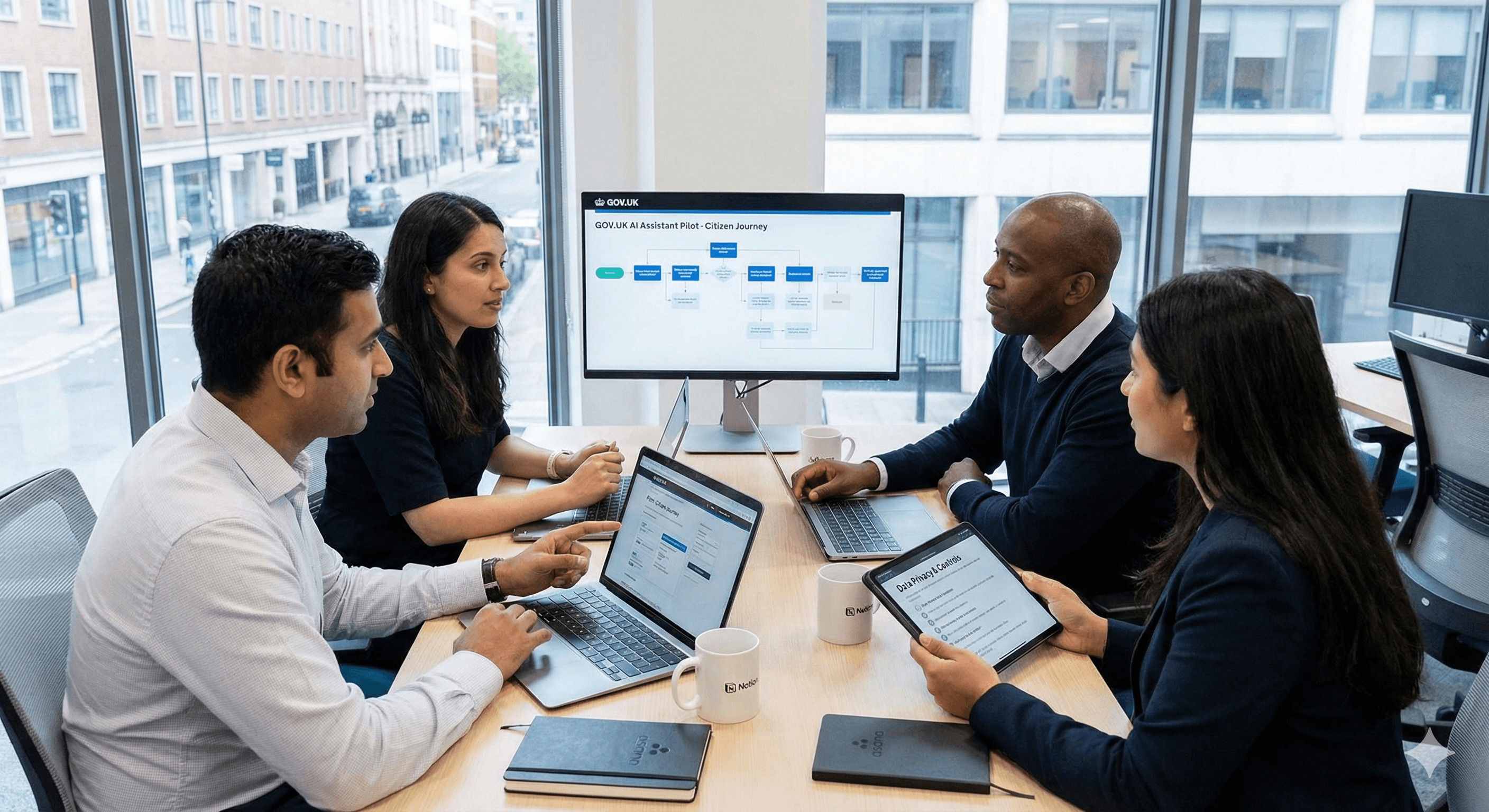

(Note du Royaume-Uni : l'adoption s'accélère; le Royaume-Uni est maintenant en tête de l'Europe en matière de pénétration de l'IA dans la fabrication intelligente—prouvant que l'écosystème est prêt si les facilitateurs sont en place.)

FAQs

Q1 : Quel est le principal avantage de l'IA dans le secteur manufacturier ?

Lorsque l'IA est déployée via les facilitateurs, elle augmente simultanément le rendement, le débit et l'efficacité énergétique—se manifestant dans l'OEE, le FPY et le coût par unité, pas seulement dans des anecdotes de pilote. McKinsey & Company

Q2 : Pourquoi les entreprises sous-investissent-elles dans les facilitateurs ?

Parce que les cas d'usage sont visibles et finançables, alors que l'infrastructure de données, les MLOps et la gestion du changement semblent être des frais généraux. Le COO100 avertit que c'est précisément ce qui tue la durabilité. McKinsey & Company

Q3 : Comment les COO peuvent-ils garantir une mise à l'échelle réussie ?

Gérez l'IA comme un programme de production : financement par flux de valeur, données/OT renforcées, MLOps disciplinés, et rituels d'adoption sur le plancher. Gouvernez avec un carnet de commandes unique et retirez ce qui ne rapporte pas. McKinsey & Company

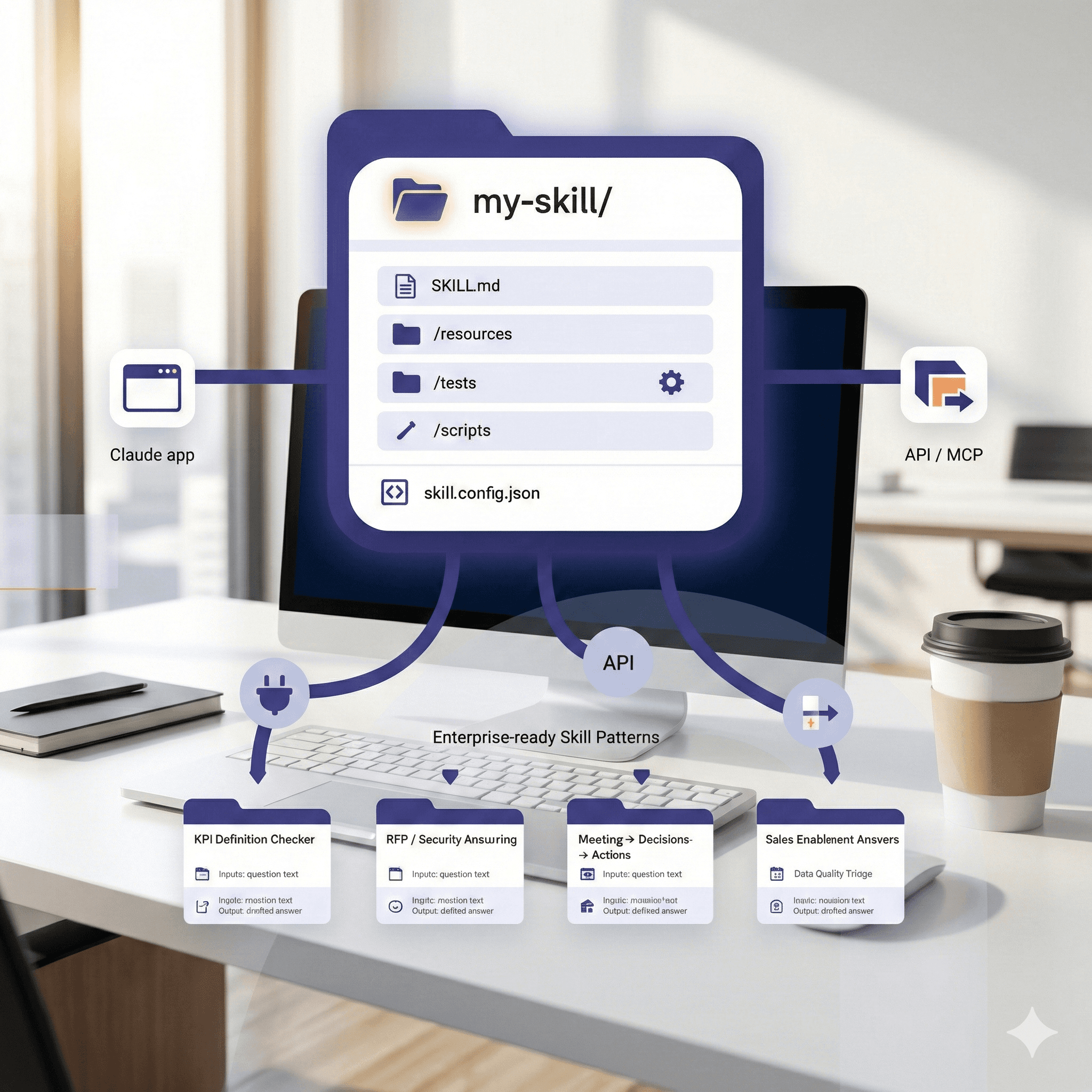

Options de logiciel

Asana pour les OKR de flux de valeur et les trains de sortie inter-plantes.

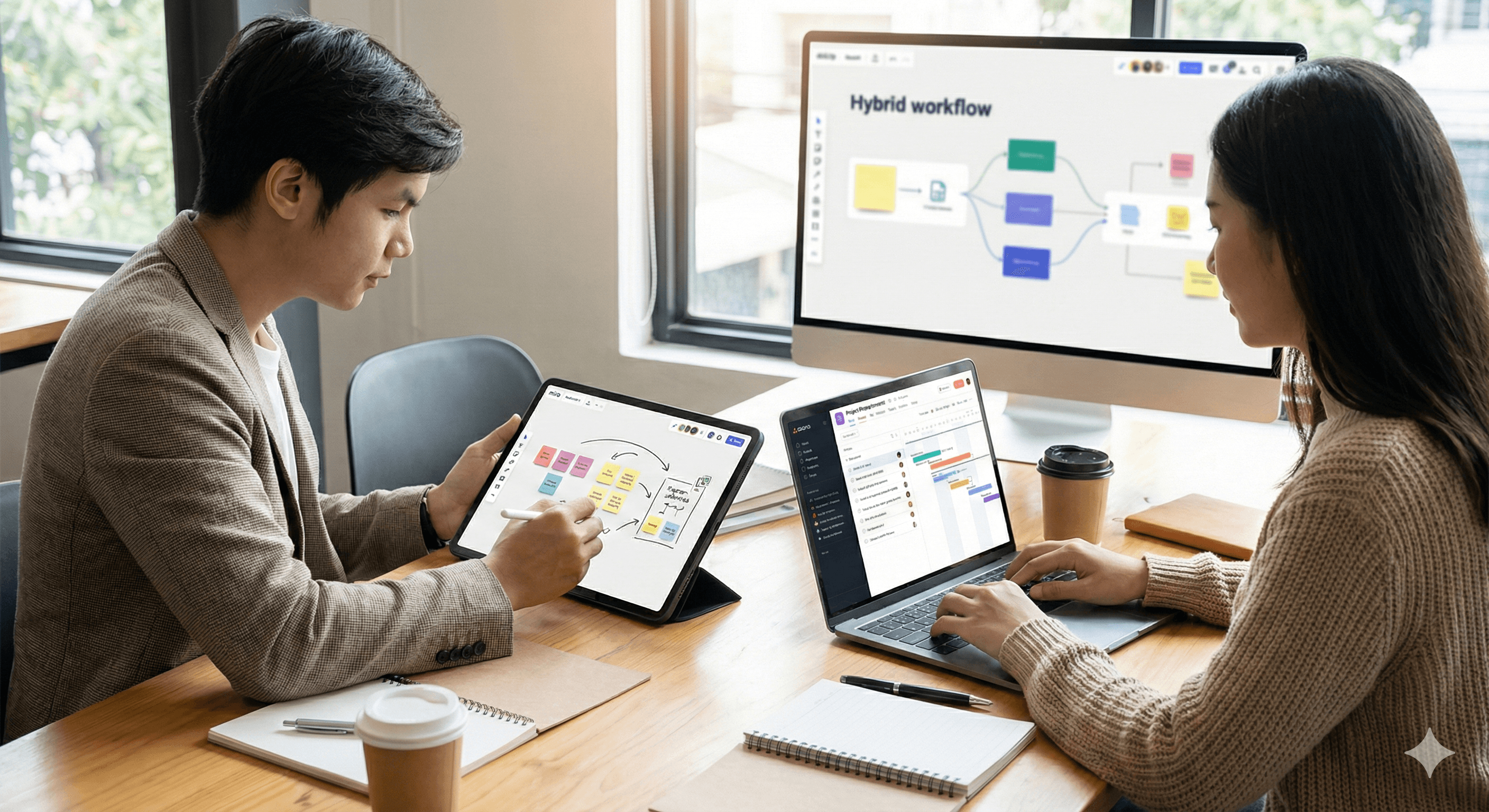

Miro pour la cartographie des données au niveau de la ligne et des modes de défaillance.

Notion pour le travail standard, les livres de jeu, et les exécutions de modèle.

Glean pour un accès autorisé aux connaissances en ingénierie.

Prochaines étapes ?

Prêt à transformer les pilotes en performance ? Generation Digital aide les COO à mettre en place les facilitateurs d'IA—des données au Gemba—et à construire le modèle opérationnel qui s'étend dans toutes les usines.

La thèse du COO : la valeur vient des facilitateurs, pas de la démo

Le Sondage COO100 est sans équivoque : les leaders du secteur manufacturier financent l'IA à grande échelle, mais nombreux sont ceux qui sous-investissent dans les fondations nécessaires pour un impact durable—c'est précisément pourquoi les pilotes stagnent et les économies s'évaporent après la première année. Traitez l'IA comme un processus de production : capacité, contrôle, cadence.

Ce qui change en 2026

Deux réalités convergent. Premièrement, les conseils d'administration s'attendent à ce que les gains de productivité et de qualité au niveau de l'usine se reflètent dans le P&L, pas seulement dans les présentations. Deuxièmement, les entreprises qui déclarent des retours se distinguent par leur modèle opérationnel et leurs facilitateurs—des pipelines de données intégrés à la ligne, un MLOps renforcé, des rituels d'adoption sur le terrain et une gouvernance financée par flux de valeur, pas par outil.

Pour déployer l'IA à grande échelle dans le secteur manufacturier, les COO doivent insister sur les facilitateurs : connectivité des données/OT, MLOps, modèles opérationnels transversaux et adoption sur le terrain. L'enquête McKinsey COO100 constate des budgets d'IA élevés mais un sous-investissement dans ces fondations—ce qui explique pourquoi les pilotes ne deviennent que rarement une performance à l'échelle de l'usine. Faites des facilitateurs le programme.

Des pilotes à la performance : cinq choix du COO

1) Financer par flux de valeur, pas par cas d'usage.

Arrêtez de disperser les budgets à travers des « victoires » isolées. Financez un flux de valeur cible (par exemple, OEE d'emballage ou FPY) et liez tous les modèles, travaux de données et activités de changement à ces indicateurs clés. Les leaders qui déploient l'IA s'organisent autour de la stratégie, du talent, du modèle opérationnel, de la technologie, des données et de l'adoption—et mesurent à ce niveau.

2) Industrialiser la couche de données à la ligne.

Rendez le travail ennuyeux non-négociable : qualité des capteurs, accès aux historien, modèles sémantiques pour les équipements, et magasins de fonctions gouvernementaux. Si les données ne sont pas de qualité production, les modèles ne le sont pas non plus. (C'est le sous-investissement le plus fréquent signalé par l'enquête.)

3) Traitez les modèles comme des actifs : MLOps pour OT.

Standardisez le déploiement en bordure et dans le cloud, implémentez la surveillance de la dérive, le retour en arrière et le contrôle des modifications en qui vos gestionnaires de plant ont confiance. Reliez les versions de modèle aux fenêtres de maintenance comme tout autre changement d'actif. Les grands performants font ceci systématiquement.

4) Mettez l'adoption sur le Gemba.

Déplacez les rituels d'amélioration (réunions, réunions à niveaux) pour utiliser par défaut les insights d'IA—alertes de qualité, temps d'arrêt prédit, anomalies énergétiques—pour que les opérateurs sollicitent les outils, pas qu'ils les soubissent. L'adoption est un système de gestion, pas un plan de communication.

5) Gouvernez pour l'échelle, pas la permission.

Créez une tour de contrôle de l'IA qui possède le carnet de commandes, élimine les duplications et retire les modèles qui ne prouvent pas leur rentabilité. Financez des expériences, mais ne graduez que celles avec un impact vérifié sur le débit, le rendement, le coût à servir, et la sécurité.

À quoi ressemble le succès sur le plancher de l'usine

OEE, FPY, et MTBF évoluent ensemble, pas de manière isolée—parce que les modèles sont intégrés dans les flux de travail de maintenance, qualité et planification, pas seulement dans les tableaux de bord.

Cycle d'apprentissage toutes les deux semaines : nouvelles données, réentraîner, redéployer, vérifier; les modèles sont traités comme les équipements—maintenus, audités, et remplacés lorsqu'ils sont obsolètes.

Réutilisation à l'échelle de l'entreprise : un seul livre de jeu pour la QA visuelle ou l'optimisation énergétique, répliqué sur des lignes/usines similaires avec 80 % de composants communs.

(Note du Royaume-Uni : l'adoption s'accélère; le Royaume-Uni est maintenant en tête de l'Europe en matière de pénétration de l'IA dans la fabrication intelligente—prouvant que l'écosystème est prêt si les facilitateurs sont en place.)

FAQs

Q1 : Quel est le principal avantage de l'IA dans le secteur manufacturier ?

Lorsque l'IA est déployée via les facilitateurs, elle augmente simultanément le rendement, le débit et l'efficacité énergétique—se manifestant dans l'OEE, le FPY et le coût par unité, pas seulement dans des anecdotes de pilote. McKinsey & Company

Q2 : Pourquoi les entreprises sous-investissent-elles dans les facilitateurs ?

Parce que les cas d'usage sont visibles et finançables, alors que l'infrastructure de données, les MLOps et la gestion du changement semblent être des frais généraux. Le COO100 avertit que c'est précisément ce qui tue la durabilité. McKinsey & Company

Q3 : Comment les COO peuvent-ils garantir une mise à l'échelle réussie ?

Gérez l'IA comme un programme de production : financement par flux de valeur, données/OT renforcées, MLOps disciplinés, et rituels d'adoption sur le plancher. Gouvernez avec un carnet de commandes unique et retirez ce qui ne rapporte pas. McKinsey & Company

Options de logiciel

Asana pour les OKR de flux de valeur et les trains de sortie inter-plantes.

Miro pour la cartographie des données au niveau de la ligne et des modes de défaillance.

Notion pour le travail standard, les livres de jeu, et les exécutions de modèle.

Glean pour un accès autorisé aux connaissances en ingénierie.

Prochaines étapes ?

Prêt à transformer les pilotes en performance ? Generation Digital aide les COO à mettre en place les facilitateurs d'IA—des données au Gemba—et à construire le modèle opérationnel qui s'étend dans toutes les usines.

Recevez des conseils pratiques directement dans votre boîte de réception

En vous abonnant, vous consentez à ce que Génération Numérique stocke et traite vos informations conformément à notre politique de confidentialité. Vous pouvez lire la politique complète sur gend.co/privacy.

Génération

Numérique

Bureau au Royaume-Uni

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

1 University Ave,

Toronto,

ON M5J 1T1,

Canada

Bureau NAMER

77 Sands St,

Brooklyn,

NY 11201,

États-Unis

Bureau EMEA

Rue Charlemont, Saint Kevin's, Dublin,

D02 VN88,

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77 | Droits d'auteur 2026 | Conditions générales | Politique de confidentialité

Génération

Numérique

Bureau au Royaume-Uni

33 rue Queen,

Londres

EC4R 1AP

Royaume-Uni

Bureau au Canada

1 University Ave,

Toronto,

ON M5J 1T1,

Canada

Bureau NAMER

77 Sands St,

Brooklyn,

NY 11201,

États-Unis

Bureau EMEA

Rue Charlemont, Saint Kevin's, Dublin,

D02 VN88,

Irlande

Bureau du Moyen-Orient

6994 Alsharq 3890,

An Narjis,

Riyad 13343,

Arabie Saoudite

Numéro d'entreprise : 256 9431 77

Conditions générales

Politique de confidentialité

Droit d'auteur 2026