Anthropic × UK Government. What It Really Means for Public‑Sector AI

Anthropic × UK Government. What It Really Means for Public‑Sector AI

Anthropic

Jan 30, 2026

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Schedule a Consultation

The UK is piloting a GOV.UK AI assistant with Anthropic. Headlines call it “ironic,” given warnings about AI‑driven job losses. The real story: a chance to modernise citizen services—if government keeps control of data, deploys open integrations, and measures outcomes (faster resolutions, higher completion rates) rather than hype.

Why this matters now

The UK is moving from AI talk to AI delivery: a citizen assistant to help people navigate services and get tailored guidance, starting with employment support.

Media framed it as ironic: Anthropic warning about automation risks while helping build a job‑seeker tool. Both can be true; the point is safety + usefulness in the same programme.

For public bodies, the question isn’t “AI: yes/no?” but “Under what controls—and does it actually help citizens?”

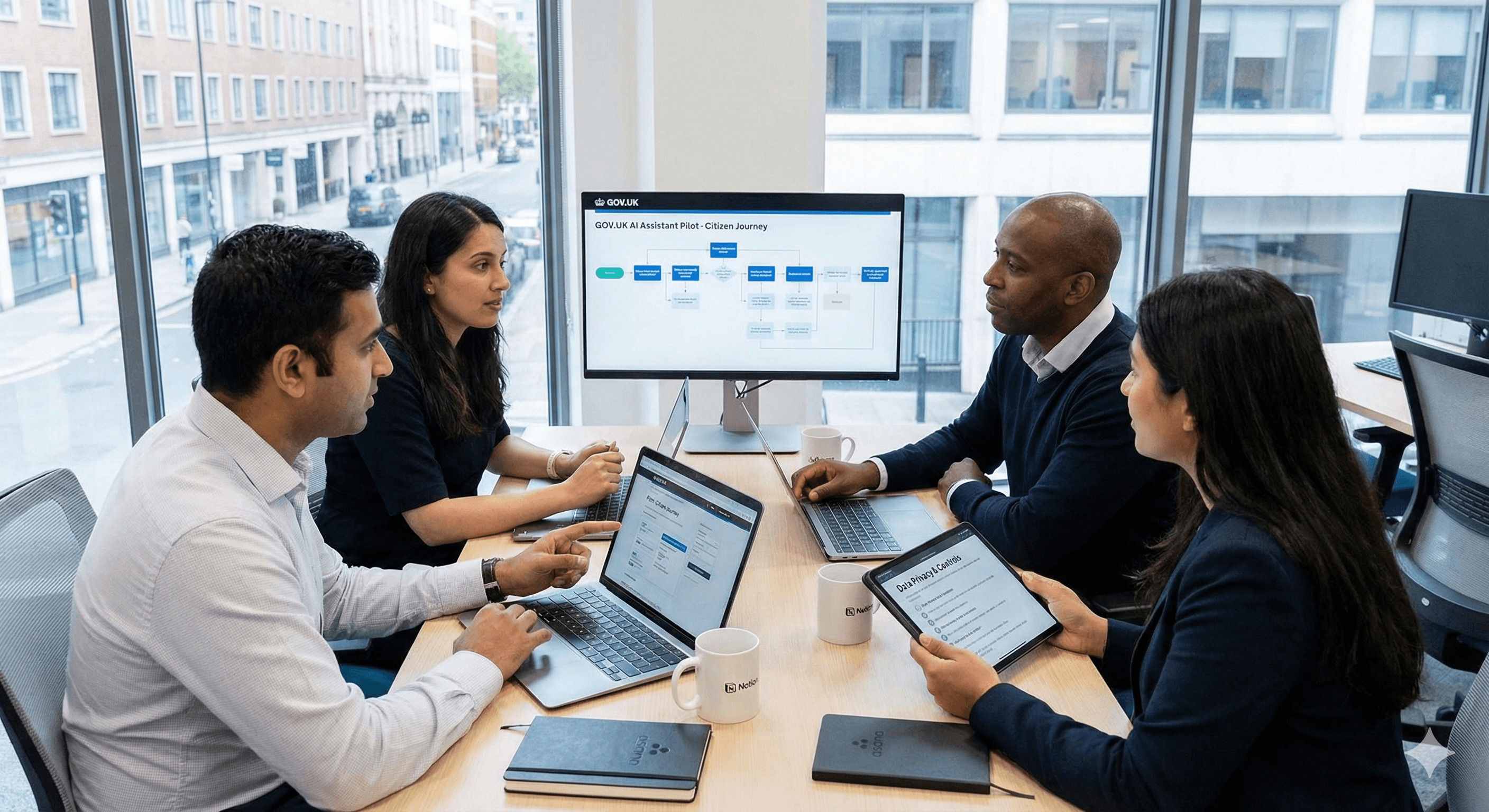

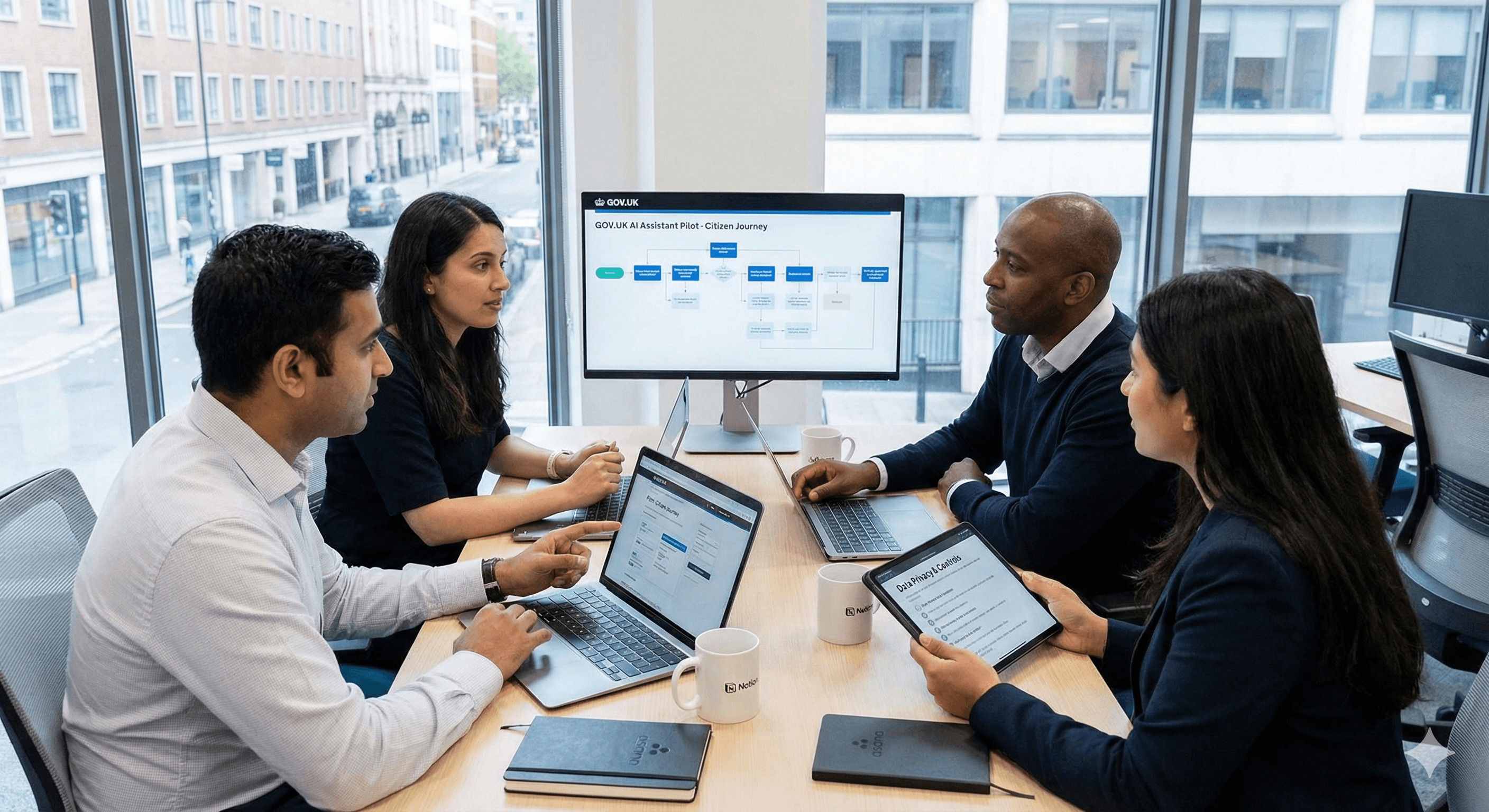

What’s being piloted

A GOV.UK conversational assistant that can guide users through steps and forms, not just answer FAQs.

Initial scope: employment and training support (job seekers, skills, benefits navigation).

Target capability: link to authoritative pages, walk through eligibility criteria, summarise options, and surface the next action.

Outcome to test: completion rate uplift (people actually finishing processes), time‑to‑answer, and case‑worker load.

Guardrails that make this work

Public‑sector controls first

Data residency in UK regions; customer‑managed keys; no training on citizen prompts by default.

Access logging and independent audit.

Open integration layer

Use open standards/APIs so components can be swapped (models, vector stores, orchestration).

Avoid single‑vendor dependencies for identity, search, and content retrieval.

Human in the loop

Clear escalation to human agents; hand‑off transcripts captured.

High‑risk topics (benefits sanctions, immigration) require confirmable sources and double‑checks.

Safety + quality evaluation

Pre‑launch evals on helpfulness, factuality, bias; red‑teaming on sensitive use‑cases.

In‑production monitoring: drift, unsafe outputs, and resolution outcomes.

Transparent UX

Labelling that this is an AI assistant; show sources; record consent where data is reused.

What to measure

Task completion: % of users who finish a journey (e.g., job‑search sign‑up) via the assistant vs. web pages alone.

Average handling time: reduction for agent‑assisted cases.

Deflection quality: when the assistant resolves an issue, is it correct? (spot‑checks).

Equity: performance across demographics and accessibility needs.

Cost per successful outcome: operational cost divided by completed, verified cases.

Practical architecture

Frontend: GOV.UK design system components for trust and accessibility.

Brain: orchestration service calling a model (swappable), retrieval from a curated knowledge base, eligibility calculators.

Data: UK‑hosted vector store; content pipeline ingesting only authoritative pages; nightly rebuilds.

Safety: prompt filters, PII redaction, policy checks pre‑response; human escalation paths.

Analytics: consented telemetry + outcome tagging; dashboards for managers.

Risks & mitigations

Hallucinations on policy → Retrieval‑first design; require citations; block answers without sources.

Over‑automation → Default to suggestive mode; require explicit user confirmation before any irreversible step.

Vendor lock‑in → Contract for portability (export data, swap models), and maintain a second model as standby.

Equity gaps → Accessibility testing; language variants; bias evals on representative datasets.

Privacy creep → Data minimisation; retention limits; DPIAs published.

How this fits a modern public‑sector stack

Notion/Glean as the knowledge base and retrieval source of truth.

Open‑weight models as alternates where sovereignty or cost require self‑hosting.

FAQs

Will the assistant decide benefits or sanctions?

No. It informs and guides; decisions remain with authorised officers and systems.

Is my data used to train the model?

By default, prompts and personal data are not used to train public models. Data is stored only to improve the service under UK‑government controls.

Can I speak to a person?

Yes. The assistant offers hand‑off to trained agents with full context.

Call to action

Public‑sector lead?

Book a 60‑minute scoping session. We’ll design the guardrails, wire retrieval to your authoritative content, and run a pilot that measures outcomes citizens actually feel.

The UK is piloting a GOV.UK AI assistant with Anthropic. Headlines call it “ironic,” given warnings about AI‑driven job losses. The real story: a chance to modernise citizen services—if government keeps control of data, deploys open integrations, and measures outcomes (faster resolutions, higher completion rates) rather than hype.

Why this matters now

The UK is moving from AI talk to AI delivery: a citizen assistant to help people navigate services and get tailored guidance, starting with employment support.

Media framed it as ironic: Anthropic warning about automation risks while helping build a job‑seeker tool. Both can be true; the point is safety + usefulness in the same programme.

For public bodies, the question isn’t “AI: yes/no?” but “Under what controls—and does it actually help citizens?”

What’s being piloted

A GOV.UK conversational assistant that can guide users through steps and forms, not just answer FAQs.

Initial scope: employment and training support (job seekers, skills, benefits navigation).

Target capability: link to authoritative pages, walk through eligibility criteria, summarise options, and surface the next action.

Outcome to test: completion rate uplift (people actually finishing processes), time‑to‑answer, and case‑worker load.

Guardrails that make this work

Public‑sector controls first

Data residency in UK regions; customer‑managed keys; no training on citizen prompts by default.

Access logging and independent audit.

Open integration layer

Use open standards/APIs so components can be swapped (models, vector stores, orchestration).

Avoid single‑vendor dependencies for identity, search, and content retrieval.

Human in the loop

Clear escalation to human agents; hand‑off transcripts captured.

High‑risk topics (benefits sanctions, immigration) require confirmable sources and double‑checks.

Safety + quality evaluation

Pre‑launch evals on helpfulness, factuality, bias; red‑teaming on sensitive use‑cases.

In‑production monitoring: drift, unsafe outputs, and resolution outcomes.

Transparent UX

Labelling that this is an AI assistant; show sources; record consent where data is reused.

What to measure

Task completion: % of users who finish a journey (e.g., job‑search sign‑up) via the assistant vs. web pages alone.

Average handling time: reduction for agent‑assisted cases.

Deflection quality: when the assistant resolves an issue, is it correct? (spot‑checks).

Equity: performance across demographics and accessibility needs.

Cost per successful outcome: operational cost divided by completed, verified cases.

Practical architecture

Frontend: GOV.UK design system components for trust and accessibility.

Brain: orchestration service calling a model (swappable), retrieval from a curated knowledge base, eligibility calculators.

Data: UK‑hosted vector store; content pipeline ingesting only authoritative pages; nightly rebuilds.

Safety: prompt filters, PII redaction, policy checks pre‑response; human escalation paths.

Analytics: consented telemetry + outcome tagging; dashboards for managers.

Risks & mitigations

Hallucinations on policy → Retrieval‑first design; require citations; block answers without sources.

Over‑automation → Default to suggestive mode; require explicit user confirmation before any irreversible step.

Vendor lock‑in → Contract for portability (export data, swap models), and maintain a second model as standby.

Equity gaps → Accessibility testing; language variants; bias evals on representative datasets.

Privacy creep → Data minimisation; retention limits; DPIAs published.

How this fits a modern public‑sector stack

Notion/Glean as the knowledge base and retrieval source of truth.

Open‑weight models as alternates where sovereignty or cost require self‑hosting.

FAQs

Will the assistant decide benefits or sanctions?

No. It informs and guides; decisions remain with authorised officers and systems.

Is my data used to train the model?

By default, prompts and personal data are not used to train public models. Data is stored only to improve the service under UK‑government controls.

Can I speak to a person?

Yes. The assistant offers hand‑off to trained agents with full context.

Call to action

Public‑sector lead?

Book a 60‑minute scoping session. We’ll design the guardrails, wire retrieval to your authoritative content, and run a pilot that measures outcomes citizens actually feel.

Receive practical advice directly in your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital