AI Cybersecurity Resilience: 2026 Protections

AI Cybersecurity Resilience: 2026 Protections

AI Safety

Nov 26, 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Schedule a Consultation

As AI models advance in cybersecurity, OpenAI and the wider ecosystem are fortifying them with multiple safeguards, extensive testing, and collaboration with international security experts. With 2026 approaching, the focus is on practical resilience: faster detection, safer rollouts, and measurable risk reduction.

Why this matters now

Attackers are already using automation and AI to probe systems rapidly. Defenders need AI that can identify subtle signals, correlate alerts, and assist teams to act swiftly—without introducing new risks. The opportunity lies in pairing powerful models with strict controls and human oversight to improve outcomes without expanding your attack surface.

Key points

Enhanced AI capabilities in cybersecurity. Modern models analyze vast event streams, learn typical patterns, and flag anomalies sooner, improving mean-time-to-detect (MTTD).

Implementation of layered safeguards. Defence-in-depth now includes model-level controls, policy guidelines, and continuous evaluation—not just perimeter tools.

Partnerships with global security experts. External red teaming, incident simulation, and standards collaboration help close gaps and strengthen defences.

What’s new and how it works

AI models are evolving to tackle real-world threats through all stages:

Data to detection. Models enhance telemetry from endpoints, identity, network, and cloud, providing correlated insights for analysts.

Prediction to prevention. Pattern recognition highlights suspicious behaviours before they escalate, enabling preventive measures (e.g., enforced MFA, conditional access).

Response at speed. Safe automation can draft response playbooks, open tickets, and orchestrate routine containment steps—always with approvals and audit trails. For incident workflows and team coordination, see Asana.

Feedback loops. Post-incident learnings, synthetic tests, and red-team findings continuously improve model quality and reduce false positives.

Practical steps

OpenAI and industry partners continue to invest in research and development, focusing on solid security frameworks that leverage AI’s predictive capabilities. Here’s how your organization can apply similar principles:

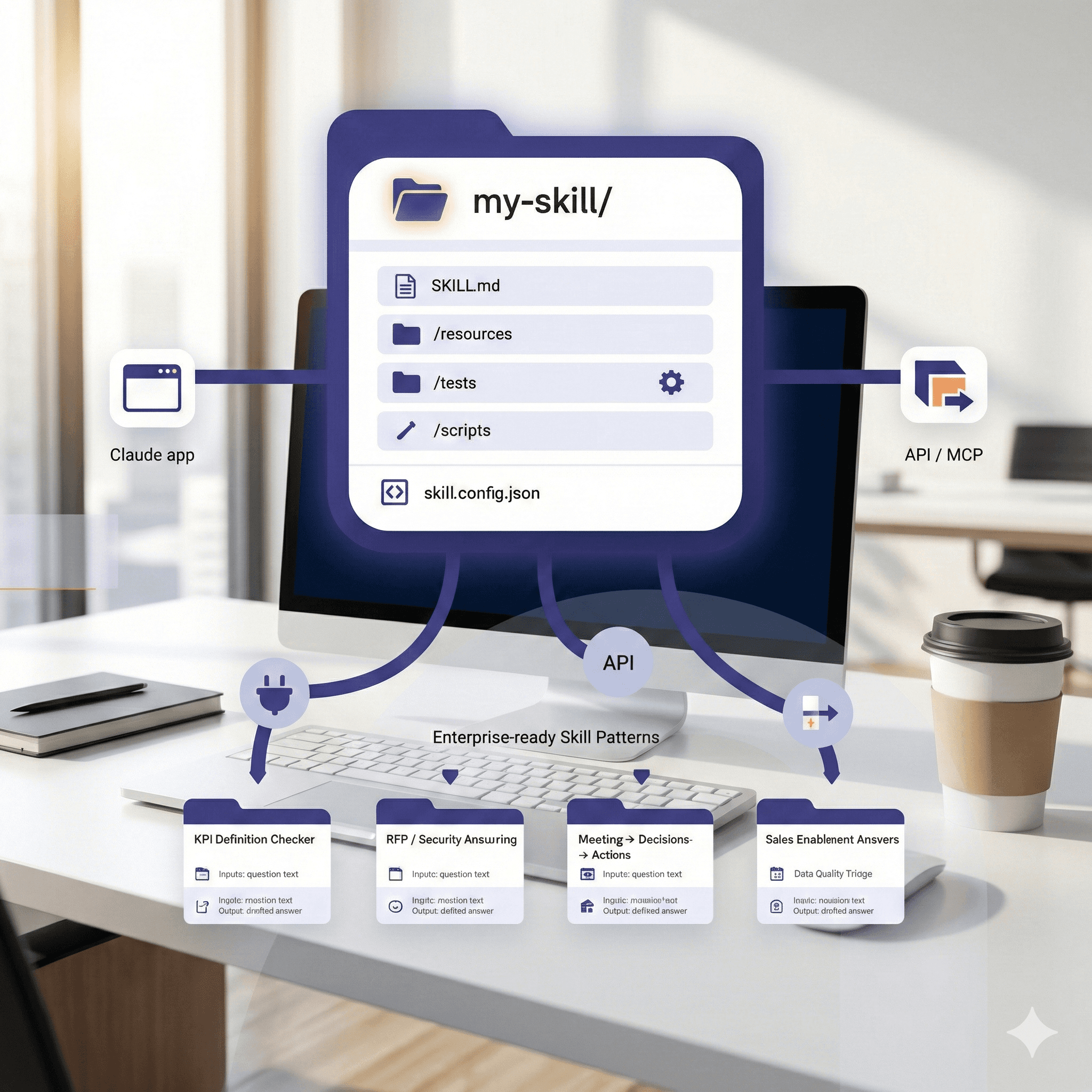

1) Establish layered safeguards for AI use

Create a control stack that spans:

Model safeguards: policy prompts, allow/deny lists, rate limits, sensitive-data filters.

Operational controls: identity-aware access, key management, network isolation, secret rotation.

Evaluation & testing: pre-deployment testing, adversarial prompts, scenario-based drills, ongoing drift checks.

Governance: clear ownership, risk registers, DPIAs where applicable, and rapid rollback paths. Documenting guardrails and runbooks in Notion keeps teams aligned and audit-ready.

2) Integrate AI with existing security tools

SOC alignment. Feed model outputs into SIEM/XDR so analysts receive enriched, explainable context—not just more alerts.

Automated but approval-gated response. Start with low-risk automations (e.g., tagging, case creation, enrichment) before progressing to partial containment with human sign-off—coordinated via Asana.

Quality telemetry. High-quality, well-labelled data lead to better detection. Use enterprise search like Glean to surface signals and previous cases quickly.

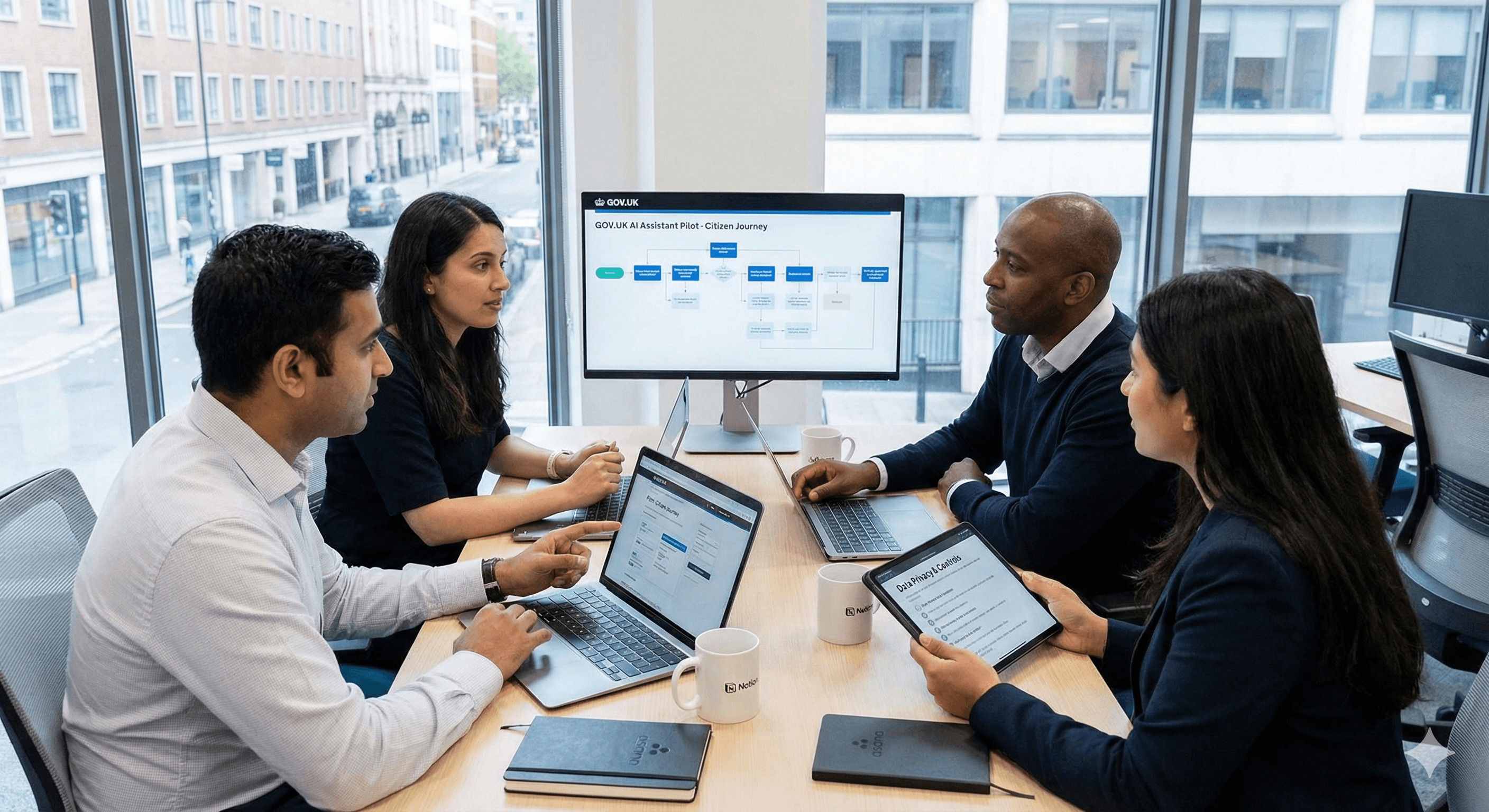

3) Partner with global security experts

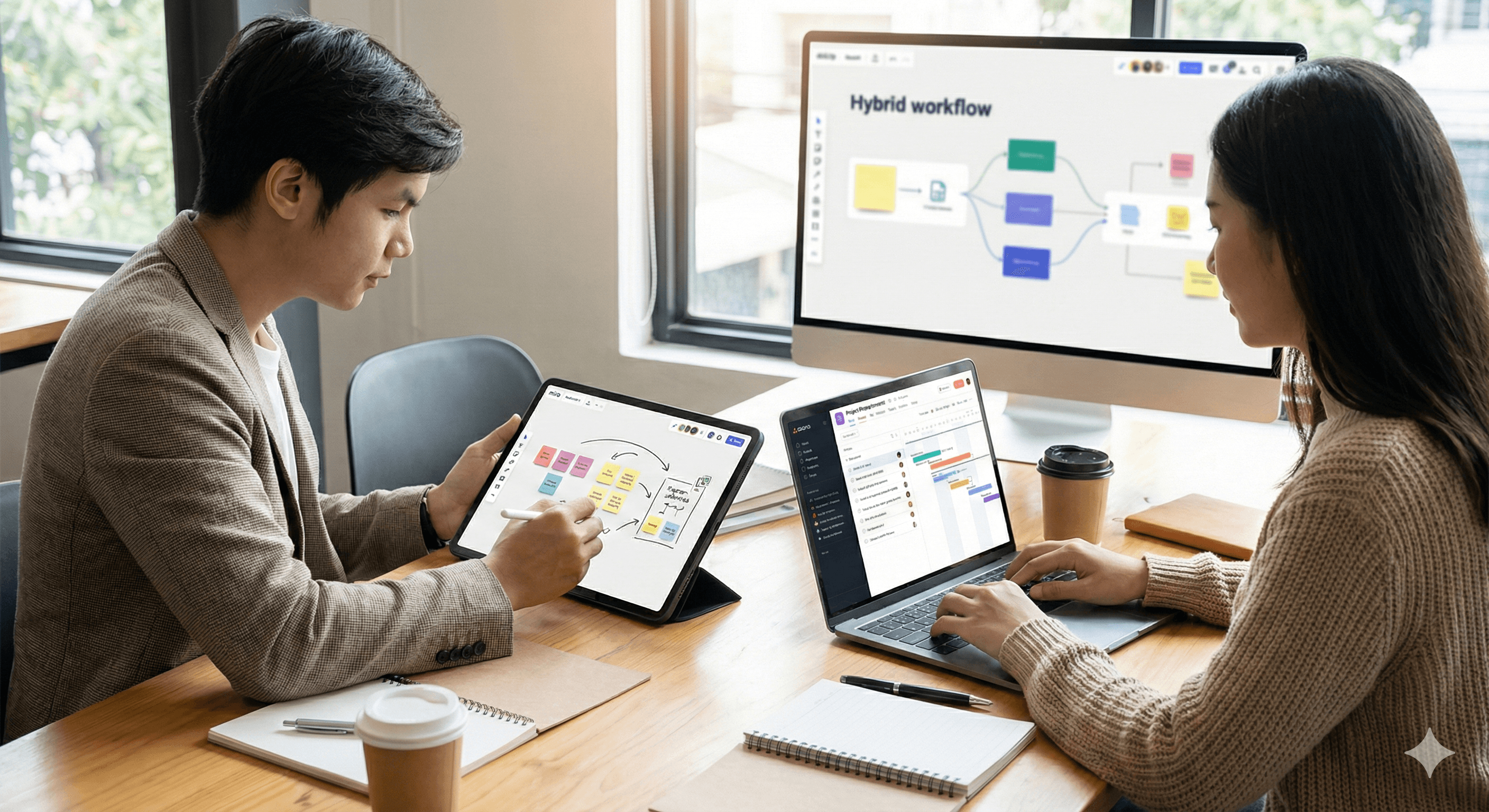

External red teaming. Commission tests that target both traditional infrastructure and the AI-assisted workflows around it. Visualize findings and remediation plans with collective canvases in Miro.

Benchmarking and assurance. Compare model performance against known attack scenarios; track MTTD/MTTR and false-positive rates.

Community collaboration. Engage with standards bodies and trusted researchers to share insights and improve safety methods.

4) Build responsible-AI security into day-to-day work

Human-in-the-loop. Require analyst review for significant actions until confidence thresholds are met.

Auditability. Log prompts, responses, decisions, and overrides to support forensics and compliance—store and share policies in Notion.

Least privilege & data minimization. Keep models scoped to the minimum data needed to achieve security outcomes.

Training & culture. Upskill your SOC on AI-assisted triage, playbook creation, and prompt hygiene; use Miro for workshops and tabletop exercises.

5) Measure what matters

Define KPIs that senior leaders can understand:

Risk reduction: incidents prevented, severity reduction, dwell-time trends.

Efficiency: analyst time saved, cases handled per shift, automation adoption rates.

Quality: precision/recall for detections, false-positive/negative ratios, post-incident improvement actions closed. Track remediation tasks with Asana and knowledge artefacts in Notion.

Realistic use cases

Identity anomalies: AI flags unusual access paths or privilege escalation patterns and opens an approval-gated containment workflow in Asana.

Phishing triage: Models cluster reported emails, extract IOCs, and enrich SIEM cases, shaving minutes off each investigation; analysts reference previous cases via Glean.

Cloud posture: Continuous analysis suggests least-privilege adjustments and highlights misconfigurations before they’re exploited; teams storyboard remediation in Miro.

Third-party risk: Text models summarize vendor security artefacts and map them to policy requirements; documentation is centralized in Notion.

FAQs

Q1. How does AI improve cybersecurity resilience?

AI enhances resilience by analyzing high-volume telemetry, spotting anomalies earlier, and drafting response actions. When paired with governance and human review, teams cut detection and response times while reducing false positives.

Q2. What safeguards are being implemented in AI models?

Organizations deploy multi-layered safeguards: policy guardrails, filtering, identity-aware access, network isolation, rate limiting, and continuous red-teaming—plus audit trails for accountability, captured in tools like Notion.

Q3. Who are OpenAI’s partners in this initiative?

Security vendors, standards bodies, and independent researchers contribute through red teaming, evaluation methodologies, and best-practice sharing to improve the safety and effectiveness of AI in cybersecurity. Collaborative workshops can be run in Miro to align stakeholders.

Summary

OpenAI and the broader security community are advancing AI to bolster cyber resilience. With layered safeguards, expert collaboration, and disciplined operations, organizations can reduce risk and respond faster. Want a practical roadmap for 2026? Contact Generation Digital to explore pilots, governance patterns, and safe automation tailored to your environment—with workflows in Asana, collaboration in Miro, documentation in Notion, and rapid discovery via Glean.

As AI models advance in cybersecurity, OpenAI and the wider ecosystem are fortifying them with multiple safeguards, extensive testing, and collaboration with international security experts. With 2026 approaching, the focus is on practical resilience: faster detection, safer rollouts, and measurable risk reduction.

Why this matters now

Attackers are already using automation and AI to probe systems rapidly. Defenders need AI that can identify subtle signals, correlate alerts, and assist teams to act swiftly—without introducing new risks. The opportunity lies in pairing powerful models with strict controls and human oversight to improve outcomes without expanding your attack surface.

Key points

Enhanced AI capabilities in cybersecurity. Modern models analyze vast event streams, learn typical patterns, and flag anomalies sooner, improving mean-time-to-detect (MTTD).

Implementation of layered safeguards. Defence-in-depth now includes model-level controls, policy guidelines, and continuous evaluation—not just perimeter tools.

Partnerships with global security experts. External red teaming, incident simulation, and standards collaboration help close gaps and strengthen defences.

What’s new and how it works

AI models are evolving to tackle real-world threats through all stages:

Data to detection. Models enhance telemetry from endpoints, identity, network, and cloud, providing correlated insights for analysts.

Prediction to prevention. Pattern recognition highlights suspicious behaviours before they escalate, enabling preventive measures (e.g., enforced MFA, conditional access).

Response at speed. Safe automation can draft response playbooks, open tickets, and orchestrate routine containment steps—always with approvals and audit trails. For incident workflows and team coordination, see Asana.

Feedback loops. Post-incident learnings, synthetic tests, and red-team findings continuously improve model quality and reduce false positives.

Practical steps

OpenAI and industry partners continue to invest in research and development, focusing on solid security frameworks that leverage AI’s predictive capabilities. Here’s how your organization can apply similar principles:

1) Establish layered safeguards for AI use

Create a control stack that spans:

Model safeguards: policy prompts, allow/deny lists, rate limits, sensitive-data filters.

Operational controls: identity-aware access, key management, network isolation, secret rotation.

Evaluation & testing: pre-deployment testing, adversarial prompts, scenario-based drills, ongoing drift checks.

Governance: clear ownership, risk registers, DPIAs where applicable, and rapid rollback paths. Documenting guardrails and runbooks in Notion keeps teams aligned and audit-ready.

2) Integrate AI with existing security tools

SOC alignment. Feed model outputs into SIEM/XDR so analysts receive enriched, explainable context—not just more alerts.

Automated but approval-gated response. Start with low-risk automations (e.g., tagging, case creation, enrichment) before progressing to partial containment with human sign-off—coordinated via Asana.

Quality telemetry. High-quality, well-labelled data lead to better detection. Use enterprise search like Glean to surface signals and previous cases quickly.

3) Partner with global security experts

External red teaming. Commission tests that target both traditional infrastructure and the AI-assisted workflows around it. Visualize findings and remediation plans with collective canvases in Miro.

Benchmarking and assurance. Compare model performance against known attack scenarios; track MTTD/MTTR and false-positive rates.

Community collaboration. Engage with standards bodies and trusted researchers to share insights and improve safety methods.

4) Build responsible-AI security into day-to-day work

Human-in-the-loop. Require analyst review for significant actions until confidence thresholds are met.

Auditability. Log prompts, responses, decisions, and overrides to support forensics and compliance—store and share policies in Notion.

Least privilege & data minimization. Keep models scoped to the minimum data needed to achieve security outcomes.

Training & culture. Upskill your SOC on AI-assisted triage, playbook creation, and prompt hygiene; use Miro for workshops and tabletop exercises.

5) Measure what matters

Define KPIs that senior leaders can understand:

Risk reduction: incidents prevented, severity reduction, dwell-time trends.

Efficiency: analyst time saved, cases handled per shift, automation adoption rates.

Quality: precision/recall for detections, false-positive/negative ratios, post-incident improvement actions closed. Track remediation tasks with Asana and knowledge artefacts in Notion.

Realistic use cases

Identity anomalies: AI flags unusual access paths or privilege escalation patterns and opens an approval-gated containment workflow in Asana.

Phishing triage: Models cluster reported emails, extract IOCs, and enrich SIEM cases, shaving minutes off each investigation; analysts reference previous cases via Glean.

Cloud posture: Continuous analysis suggests least-privilege adjustments and highlights misconfigurations before they’re exploited; teams storyboard remediation in Miro.

Third-party risk: Text models summarize vendor security artefacts and map them to policy requirements; documentation is centralized in Notion.

FAQs

Q1. How does AI improve cybersecurity resilience?

AI enhances resilience by analyzing high-volume telemetry, spotting anomalies earlier, and drafting response actions. When paired with governance and human review, teams cut detection and response times while reducing false positives.

Q2. What safeguards are being implemented in AI models?

Organizations deploy multi-layered safeguards: policy guardrails, filtering, identity-aware access, network isolation, rate limiting, and continuous red-teaming—plus audit trails for accountability, captured in tools like Notion.

Q3. Who are OpenAI’s partners in this initiative?

Security vendors, standards bodies, and independent researchers contribute through red teaming, evaluation methodologies, and best-practice sharing to improve the safety and effectiveness of AI in cybersecurity. Collaborative workshops can be run in Miro to align stakeholders.

Summary

OpenAI and the broader security community are advancing AI to bolster cyber resilience. With layered safeguards, expert collaboration, and disciplined operations, organizations can reduce risk and respond faster. Want a practical roadmap for 2026? Contact Generation Digital to explore pilots, governance patterns, and safe automation tailored to your environment—with workflows in Asana, collaboration in Miro, documentation in Notion, and rapid discovery via Glean.

Receive practical advice directly in your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital