Work AI Institute: A Practical Guide to Navigating AI Transformation in Canada

Work AI Institute: A Practical Guide to Navigating AI Transformation in Canada

Artificial Intelligence

Dec 10, 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Schedule a Consultation

What is the Work AI Institute?

The Work AI Institute is a research center focused on how AI delivers measurable outcomes in everyday work. It brings together academics and operators to study real implementations and publish practical guidance that leaders can apply immediately. Its early work highlights patterns that distinguish hype from lasting results and documents how organizations design processes around AI—not just add AI to outdated methods.

Why it matters now

From pilots to production. Many companies are stuck in pilot purgatory. The Institute’s research outlines the practices used by organizations that have overcome it.

Context beats raw capability. AI becomes useful when connected to an enterprise’s people, processes, systems, and knowledge. That requires a deliberate operating model for AI.

Agents are here. Autonomous and semi-autonomous agents can handle multi-step tasks with human oversight. Safely scaling them requires new governance.

The AI Operating Model (practical blueprint)

Use this model to guide transformation. Each element includes actions and deliverables you can implement in weeks, not months.

1) Strategy & Value

Define three business outcomes for the next two quarters (e.g., cycle-time reduction, cost-to-serve, win-rate).

Prioritize 5–10 AI “jobs to be done” that underpin those outcomes.

Produce a one-page AI Value Hypothesis for each job (baseline, target, constraints, owner).

2) Process & Workflow Design

Map current vs. target workflows; highlight where assistants (human-in-the-loop) or agents (autonomous with guardrails) fit.

Design control points: approval thresholds, exception queues, audit logs.

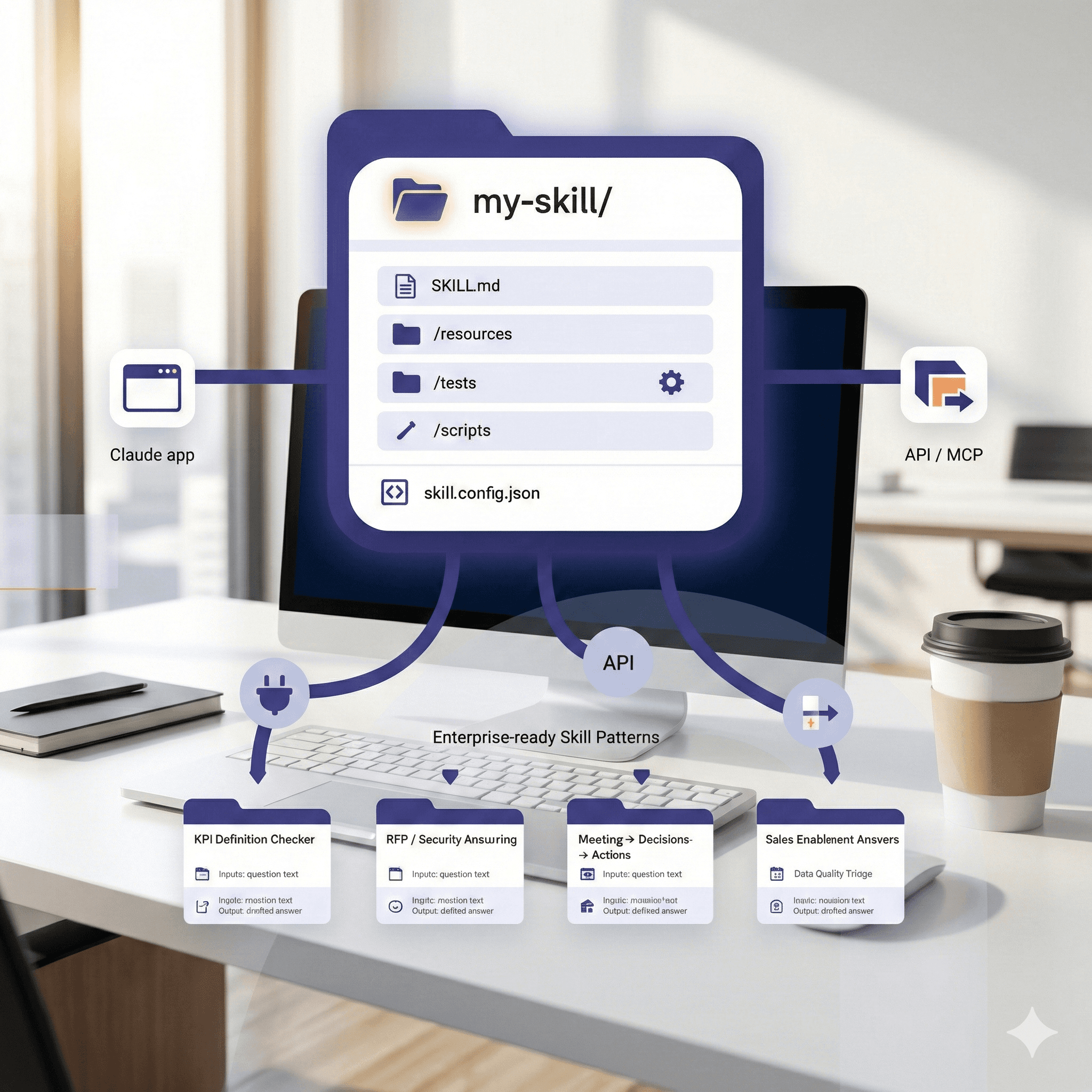

Standardize prompts and playbooks in a shared library; version them as you would code.

3) Data & Context Readiness

Inventory systems that provide context (knowledge bases, tickets, CRM, docs, code).

Define golden sources and access scopes; remove outdated or duplicate content.

Establish RAG/graph patterns to ground models in trusted knowledge.

4) Governance & Risk

Draft an AI Use Charter: permitted uses, sensitive data rules, escalation paths.

Create an AI Review Board (Legal, Security, Data, Domain leads) with bi-weekly reviews.

Implement evaluation mechanisms: quality, bias, safety, and drift checks.

5) Organization & Skills

Appoint domain product owners for each AI job-to-be-done.

Upskill with role-specific clinics (analyst, support, sales, developer) and a Prompt Patterns catalogue.

Incentivize adoption based on outcomes, not usage.

6) Measurement & Value Realisation

Track Time Saved, Quality Uplift, Risk Reduction, and Revenue Impact.

Instrument processes end-to-end; compare AI versus control cohorts.

Publish a monthly AI Value Dashboard; stop or scale based on the data.

Practical examples (cross-industry)

Customer Support: An agent triages tickets, drafts responses, and resolves known issues within policy; humans handle exceptions. Metrics: first-response time, CSAT, re-open rate.

Sales: An assistant composes account summaries from CRM + emails, suggests next actions, and updates opportunities. Metrics: meeting prep time, pipeline hygiene, win-rate.

Finance & Ops: An agent reconciles invoices and flags anomalies with links to source docs. Metrics: cycle time, error rate, write-offs prevented.

HR & Talent: An assistant drafts job descriptions aligned to competency frameworks and screens for minimum criteria with bias checks. Metrics: time-to-post, time-to-shortlist, diversity signals.

Engineering: An assistant proposes PR summaries and test plans grounded in repository context. Metrics: lead time, escaped defects, DevEx surveys.

A 6-Week Pilot-to-Scale Plan

Week 1 – Portfolio & guardrails. Identify 5–10 candidate use cases; score by value/feasibility/risk. Publish the AI Use Charter and access controls.

Week 2 – Design the work. Map target workflows and control points; define what the assistant/agent does versus the human.

Week 3 – Data and connectors. Connect knowledge sources; define retrieval strategies and test sample prompts with real data.

Week 4 – Build & evaluate. Deploy the first assistant/agent. Create evaluation mechanisms and success metrics.

Week 5 – Shadow production. Run with real work under supervision. Capture exceptions and improve prompts/policies.

Week 6 – Go-live & learn. Launch with the first team. Publish the value dashboard, a runbook, and a change-management plan. Decide to scale, iterate, or stop.

Tips from high-performing organizations

Ground in context. Connect AI to the systems where work happens; avoid “chat dead-ends.”

Design for exceptions. Most value lies in the last 10% of cases; route them effectively.

Make quality visible. Define accept/reject criteria and sample regularly.

Reward outcomes. Measure what matters (quality, risk, customer value), not tool usage.

Keep humans in control. Start with assistive patterns; automate only where confidence and controls are strong.

How Generation Digital can help

AI Operating Model workshop. Define outcomes, portfolio, guardrails, and metrics in a single day.

Use-case design sprints. Map workflows and control points; prototype assistants/agents.

Data & context integration. Connect knowledge sources and establish retrieval/graph patterns.

Governance toolkit. Templates for AI Charters, evaluation mechanisms, and value dashboards.

Adoption enablement. Role-based training and prompt libraries; change-management playbooks.

Contact us to book an AI Transformation workshop with Generation Digital.

FAQ

What is the Work AI Institute?

A research center focused on making AI work in real organizations. It studies live implementations and publishes guidance that leaders can put into practice.

How does it help with transformation?

It distills evidence from companies already scaling AI and turns it into frameworks, checklists, and strategies you can adopt quickly.

What’s the “AI Transformation 100”?

A major publication that compiles 100 practical strategies from interviews and studies of leaders who have achieved AI impact.

Where should we start?

Choose a narrow set of high-value jobs-to-be-done, design human-in-the-loop workflows, establish guardrails, and measure value from day one.

How is this different from generic AI training?

The focus is on operating model change, not one-off demos—connecting AI to your data, workflows, controls, and metrics.

What is the Work AI Institute?

The Work AI Institute is a research center focused on how AI delivers measurable outcomes in everyday work. It brings together academics and operators to study real implementations and publish practical guidance that leaders can apply immediately. Its early work highlights patterns that distinguish hype from lasting results and documents how organizations design processes around AI—not just add AI to outdated methods.

Why it matters now

From pilots to production. Many companies are stuck in pilot purgatory. The Institute’s research outlines the practices used by organizations that have overcome it.

Context beats raw capability. AI becomes useful when connected to an enterprise’s people, processes, systems, and knowledge. That requires a deliberate operating model for AI.

Agents are here. Autonomous and semi-autonomous agents can handle multi-step tasks with human oversight. Safely scaling them requires new governance.

The AI Operating Model (practical blueprint)

Use this model to guide transformation. Each element includes actions and deliverables you can implement in weeks, not months.

1) Strategy & Value

Define three business outcomes for the next two quarters (e.g., cycle-time reduction, cost-to-serve, win-rate).

Prioritize 5–10 AI “jobs to be done” that underpin those outcomes.

Produce a one-page AI Value Hypothesis for each job (baseline, target, constraints, owner).

2) Process & Workflow Design

Map current vs. target workflows; highlight where assistants (human-in-the-loop) or agents (autonomous with guardrails) fit.

Design control points: approval thresholds, exception queues, audit logs.

Standardize prompts and playbooks in a shared library; version them as you would code.

3) Data & Context Readiness

Inventory systems that provide context (knowledge bases, tickets, CRM, docs, code).

Define golden sources and access scopes; remove outdated or duplicate content.

Establish RAG/graph patterns to ground models in trusted knowledge.

4) Governance & Risk

Draft an AI Use Charter: permitted uses, sensitive data rules, escalation paths.

Create an AI Review Board (Legal, Security, Data, Domain leads) with bi-weekly reviews.

Implement evaluation mechanisms: quality, bias, safety, and drift checks.

5) Organization & Skills

Appoint domain product owners for each AI job-to-be-done.

Upskill with role-specific clinics (analyst, support, sales, developer) and a Prompt Patterns catalogue.

Incentivize adoption based on outcomes, not usage.

6) Measurement & Value Realisation

Track Time Saved, Quality Uplift, Risk Reduction, and Revenue Impact.

Instrument processes end-to-end; compare AI versus control cohorts.

Publish a monthly AI Value Dashboard; stop or scale based on the data.

Practical examples (cross-industry)

Customer Support: An agent triages tickets, drafts responses, and resolves known issues within policy; humans handle exceptions. Metrics: first-response time, CSAT, re-open rate.

Sales: An assistant composes account summaries from CRM + emails, suggests next actions, and updates opportunities. Metrics: meeting prep time, pipeline hygiene, win-rate.

Finance & Ops: An agent reconciles invoices and flags anomalies with links to source docs. Metrics: cycle time, error rate, write-offs prevented.

HR & Talent: An assistant drafts job descriptions aligned to competency frameworks and screens for minimum criteria with bias checks. Metrics: time-to-post, time-to-shortlist, diversity signals.

Engineering: An assistant proposes PR summaries and test plans grounded in repository context. Metrics: lead time, escaped defects, DevEx surveys.

A 6-Week Pilot-to-Scale Plan

Week 1 – Portfolio & guardrails. Identify 5–10 candidate use cases; score by value/feasibility/risk. Publish the AI Use Charter and access controls.

Week 2 – Design the work. Map target workflows and control points; define what the assistant/agent does versus the human.

Week 3 – Data and connectors. Connect knowledge sources; define retrieval strategies and test sample prompts with real data.

Week 4 – Build & evaluate. Deploy the first assistant/agent. Create evaluation mechanisms and success metrics.

Week 5 – Shadow production. Run with real work under supervision. Capture exceptions and improve prompts/policies.

Week 6 – Go-live & learn. Launch with the first team. Publish the value dashboard, a runbook, and a change-management plan. Decide to scale, iterate, or stop.

Tips from high-performing organizations

Ground in context. Connect AI to the systems where work happens; avoid “chat dead-ends.”

Design for exceptions. Most value lies in the last 10% of cases; route them effectively.

Make quality visible. Define accept/reject criteria and sample regularly.

Reward outcomes. Measure what matters (quality, risk, customer value), not tool usage.

Keep humans in control. Start with assistive patterns; automate only where confidence and controls are strong.

How Generation Digital can help

AI Operating Model workshop. Define outcomes, portfolio, guardrails, and metrics in a single day.

Use-case design sprints. Map workflows and control points; prototype assistants/agents.

Data & context integration. Connect knowledge sources and establish retrieval/graph patterns.

Governance toolkit. Templates for AI Charters, evaluation mechanisms, and value dashboards.

Adoption enablement. Role-based training and prompt libraries; change-management playbooks.

Contact us to book an AI Transformation workshop with Generation Digital.

FAQ

What is the Work AI Institute?

A research center focused on making AI work in real organizations. It studies live implementations and publishes guidance that leaders can put into practice.

How does it help with transformation?

It distills evidence from companies already scaling AI and turns it into frameworks, checklists, and strategies you can adopt quickly.

What’s the “AI Transformation 100”?

A major publication that compiles 100 practical strategies from interviews and studies of leaders who have achieved AI impact.

Where should we start?

Choose a narrow set of high-value jobs-to-be-done, design human-in-the-loop workflows, establish guardrails, and measure value from day one.

How is this different from generic AI training?

The focus is on operating model change, not one-off demos—connecting AI to your data, workflows, controls, and metrics.

Receive practical advice directly in your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital