Gemini Audio Models: Robust, Natural Voice Interactions

Gemini Audio Models: Robust, Natural Voice Interactions

Gemini

Dec 15, 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Schedule a Consultation

Why Gemini audio is important now

Modern voice interactions can’t depend on pieced-together systems (STT → LLM → TTS). They require a unified, native-audio model that listens continuously, reasons, activates tools, and responds instantly—without any awkward pauses. That’s the promise of Gemini 2.5 Native Audio with the Live API.

What’s new

Native audio I/O (Gemini 2.5): Real-time streaming input and output of audio for more natural communication, including expressive, controllable speech generation.

Sharper function calls: More reliable tool activation during live chats; top scores on ComplexFuncBench Audio and improved multi-turn coherence.

Live speech translation: Continuous listening and two-way real-time translation now available as a beta in Google Translate (Android) with support for headphones; wider availability to come.

Enterprise delivery: Gemini Live API on Vertex AI provides low-latency global servicing and data-residency controls. New native-audio model IDs are listed in the Gemini API changelog.

Key benefits

Natural, human-like voice: Continuous streaming reduces delay and maintains prosody, pacing, and smooth dialogue.

Actionable conversations: More precise function calling allows the assistant to access account data, check stock, or create tickets while talking—without interrupting the flow.

Global experiences: Built-in speech-to-speech translation enables multilingual support and real-time guidance.

Practical examples (by industry)

Customer service / sales: Live, multi-turn calls that verify identity, update orders, and schedule follow-ups during conversation. Production-grade on Vertex AI with monitoring and quotas.

Field operations: Hands-free workflows (checklists, fault diagnosis) with immediate, spoken responses; switch language mid-conversation if necessary.

Travel & hospitality: Two-way translation between staff and guests; headset experience through the Translate beta for live speech-to-speech.

Education & coaching: Real-time pronunciation feedback and voice tutoring with adjustable TTS voices and pacing.

How it works (at a glance)

Live API session streams audio to Gemini.

The model listens, reasons, and uses tools (APIs, knowledge) as needed.

Native audio output responds immediately with controllable voice, style, and speed.

Implementation steps

Choose a channel: Web, mobile, telephony, or contact centre. Start with a single, measurable call type (e.g., order status).

Deploy on Vertex AI (recommended): Use the Gemini Live API for streaming and set up data residency/region to comply with regulations.

Model selection & IDs: Begin with

gemini-2.5-flash-preview-native-audio-dialogfor low latency; evaluate the “thinking” variant for complex reasoning. Follow the Gemini API changelog for updates.Design function calling: Define tools (CRM, OMS, payments) with clear, typed schemas to enable reliable activation by Gemini mid-conversation.

Voice & UX: Use TTS controls (style, accent, pace, tone) to match brand and accessibility requirements.

Safety, testing, and QA: Log transcripts, audit tool calls, and conduct scripted test calls. Measure latency, handoff rate, task success, and customer satisfaction.

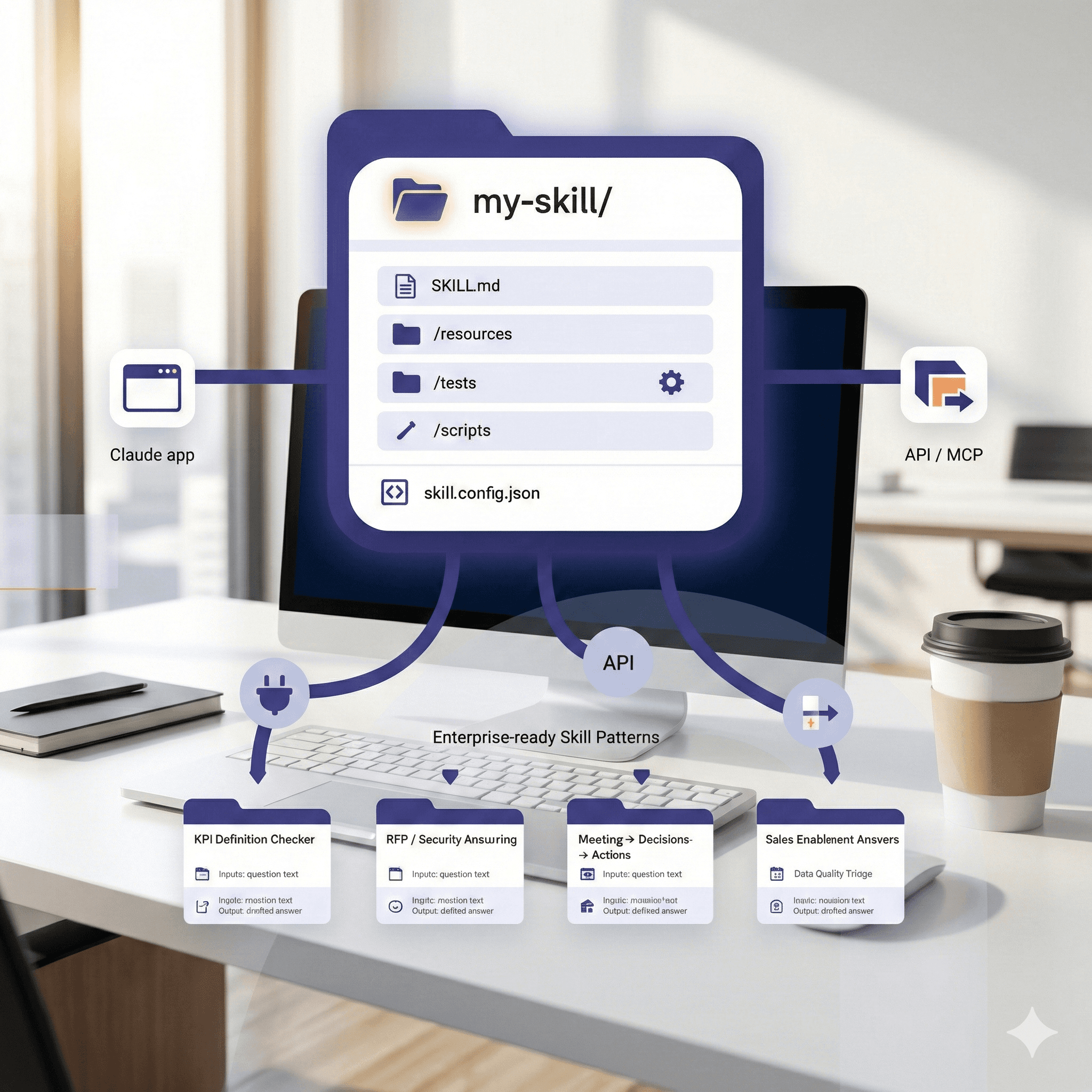

Scale & integrate: Connect transcripts to Asana for follow-ups, store prompts in Notion, reveal knowledge using Glean, and outline flows in Miro.

FAQs

What are Gemini audio models?

They’re native-audio variants of Gemini (e.g., 2.5 Flash Native Audio) that allow for real-time listening and speaking, with adjustable text-to-speech and low-latency streaming through the Live API. blog.google+1

How do the updates benefit users?

They enable clearer, faster, and more natural conversations; better tool utilization during dialogue; and live speech translation for multilingual settings. blog.google

Can businesses integrate these models easily?

Yes—use the Gemini Live API (Vertex AI) and the Gemini API for speech generation. You’ll also receive options for regional serving and enterprise governance. Google Cloud+1

Is live translation available today?

An open beta is available in the Google Translate app (Android) with headphone support in select regions, with broader product/API access planned. blog.google+1

Why Gemini audio is important now

Modern voice interactions can’t depend on pieced-together systems (STT → LLM → TTS). They require a unified, native-audio model that listens continuously, reasons, activates tools, and responds instantly—without any awkward pauses. That’s the promise of Gemini 2.5 Native Audio with the Live API.

What’s new

Native audio I/O (Gemini 2.5): Real-time streaming input and output of audio for more natural communication, including expressive, controllable speech generation.

Sharper function calls: More reliable tool activation during live chats; top scores on ComplexFuncBench Audio and improved multi-turn coherence.

Live speech translation: Continuous listening and two-way real-time translation now available as a beta in Google Translate (Android) with support for headphones; wider availability to come.

Enterprise delivery: Gemini Live API on Vertex AI provides low-latency global servicing and data-residency controls. New native-audio model IDs are listed in the Gemini API changelog.

Key benefits

Natural, human-like voice: Continuous streaming reduces delay and maintains prosody, pacing, and smooth dialogue.

Actionable conversations: More precise function calling allows the assistant to access account data, check stock, or create tickets while talking—without interrupting the flow.

Global experiences: Built-in speech-to-speech translation enables multilingual support and real-time guidance.

Practical examples (by industry)

Customer service / sales: Live, multi-turn calls that verify identity, update orders, and schedule follow-ups during conversation. Production-grade on Vertex AI with monitoring and quotas.

Field operations: Hands-free workflows (checklists, fault diagnosis) with immediate, spoken responses; switch language mid-conversation if necessary.

Travel & hospitality: Two-way translation between staff and guests; headset experience through the Translate beta for live speech-to-speech.

Education & coaching: Real-time pronunciation feedback and voice tutoring with adjustable TTS voices and pacing.

How it works (at a glance)

Live API session streams audio to Gemini.

The model listens, reasons, and uses tools (APIs, knowledge) as needed.

Native audio output responds immediately with controllable voice, style, and speed.

Implementation steps

Choose a channel: Web, mobile, telephony, or contact centre. Start with a single, measurable call type (e.g., order status).

Deploy on Vertex AI (recommended): Use the Gemini Live API for streaming and set up data residency/region to comply with regulations.

Model selection & IDs: Begin with

gemini-2.5-flash-preview-native-audio-dialogfor low latency; evaluate the “thinking” variant for complex reasoning. Follow the Gemini API changelog for updates.Design function calling: Define tools (CRM, OMS, payments) with clear, typed schemas to enable reliable activation by Gemini mid-conversation.

Voice & UX: Use TTS controls (style, accent, pace, tone) to match brand and accessibility requirements.

Safety, testing, and QA: Log transcripts, audit tool calls, and conduct scripted test calls. Measure latency, handoff rate, task success, and customer satisfaction.

Scale & integrate: Connect transcripts to Asana for follow-ups, store prompts in Notion, reveal knowledge using Glean, and outline flows in Miro.

FAQs

What are Gemini audio models?

They’re native-audio variants of Gemini (e.g., 2.5 Flash Native Audio) that allow for real-time listening and speaking, with adjustable text-to-speech and low-latency streaming through the Live API. blog.google+1

How do the updates benefit users?

They enable clearer, faster, and more natural conversations; better tool utilization during dialogue; and live speech translation for multilingual settings. blog.google

Can businesses integrate these models easily?

Yes—use the Gemini Live API (Vertex AI) and the Gemini API for speech generation. You’ll also receive options for regional serving and enterprise governance. Google Cloud+1

Is live translation available today?

An open beta is available in the Google Translate app (Android) with headphone support in select regions, with broader product/API access planned. blog.google+1

Receive practical advice directly in your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital