McKinsey AI 2025: Key Insights & Actions for Canadians

McKinsey AI 2025: Key Insights & Actions for Canadians

Artificial Intelligence

Featured List

Dec 15, 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Schedule a Consultation

McKinsey’s State of AI 2025: Findings, Risks & How to Scale

The new State of AI indicates a market that's growing up: more teams use AI daily, but most companies still find it challenging to scale and demonstrate enterprise-level impact. Leaders distinguish themselves by setting growth targets, reworking workflows, and establishing measurable controls—often with AI agents at the forefront.

What’s new in 2025 vs. 2024

In 2024, McKinsey reported that 65% of organizations were regularly using generative AI—a significant increase from 2023’s early adoption. The 2025 data highlights a different issue: only about one-third report successfully scaling AI throughout the organization. The takeaway: usage has increased; however, achieving value at scale remains difficult.

Five takeaways that matter now

Scaling is the bottleneck. Many companies report pilots, few demonstrate full transformation or EBIT impact at the enterprise level. Larger companies are more likely to be scaling, but even they mention workflow, data, and operational model obstacles. McKinsey & Company

High performers target growth and innovation—not just cost. Eight in ten cite efficiency goals, but leaders also prioritize revenue and innovation, helping secure investment and cross-functional commitment. McKinsey & Company

Workflow redesign is essential. Top performers don’t simply “add on” models; they rebuild processes (e.g., sales strategies, support documents, software delivery lifecycles) and re-platform content/knowledge so AI can act reliably. McKinsey & Company

AI agents move from hype to practicality. The 2025 materials highlight the growing use of agents capable of planning, tool calling, and performing multi-step tasks—especially in software engineering and customer operations—when guided by policy oversight and retrieval. McKinsey & Company

Measurement is underdeveloped. Many organizations still lack strong, leading KPIs for gen-AI initiatives; where tracking exists, value realization increases and risk incidents decrease. (Generation Digital view, aligned with McKinsey’s ongoing call for governance and metrics.) McKinsey & Company

Numbers the board will ask for

Adoption level: Regular gen-AI use reached approximately 65% of organizations in early 2024; 2025 emphasizes the scaling challenge rather than a simple adoption rate. McKinsey & Company

Economic potential: McKinsey’s baseline estimate for gen AI remains $2.6–$4.4 trillion in annual value across 63 use cases, with the majority in customer operations, marketing & sales, software engineering, and R&D. Consider these directional, not guaranteed. McKinsey & Company

Usage vs. leadership perception: Employees often use AI more than leaders assume—highlighting the need for enablement and policy rather than outright bans. McKinsey & Company

Generation Digital’s interpretation: how to move from pilots to proof

1) Start with business goals, not features. Mimic high performers: establish revenue or innovation targets alongside cost. Translate these into outcome-focused KPIs (cycle time, conversion rate, CSAT, defect escape). McKinsey & Company

2) Rework a complete workflow. Select one value stream (e.g., L1→L2 support escalation, SDR→AE transition, incident→post-mortem). Redesign artefacts (playbooks, taxonomies), decision points, and tools to make AI the default option—not an optional add-on. McKinsey & Company

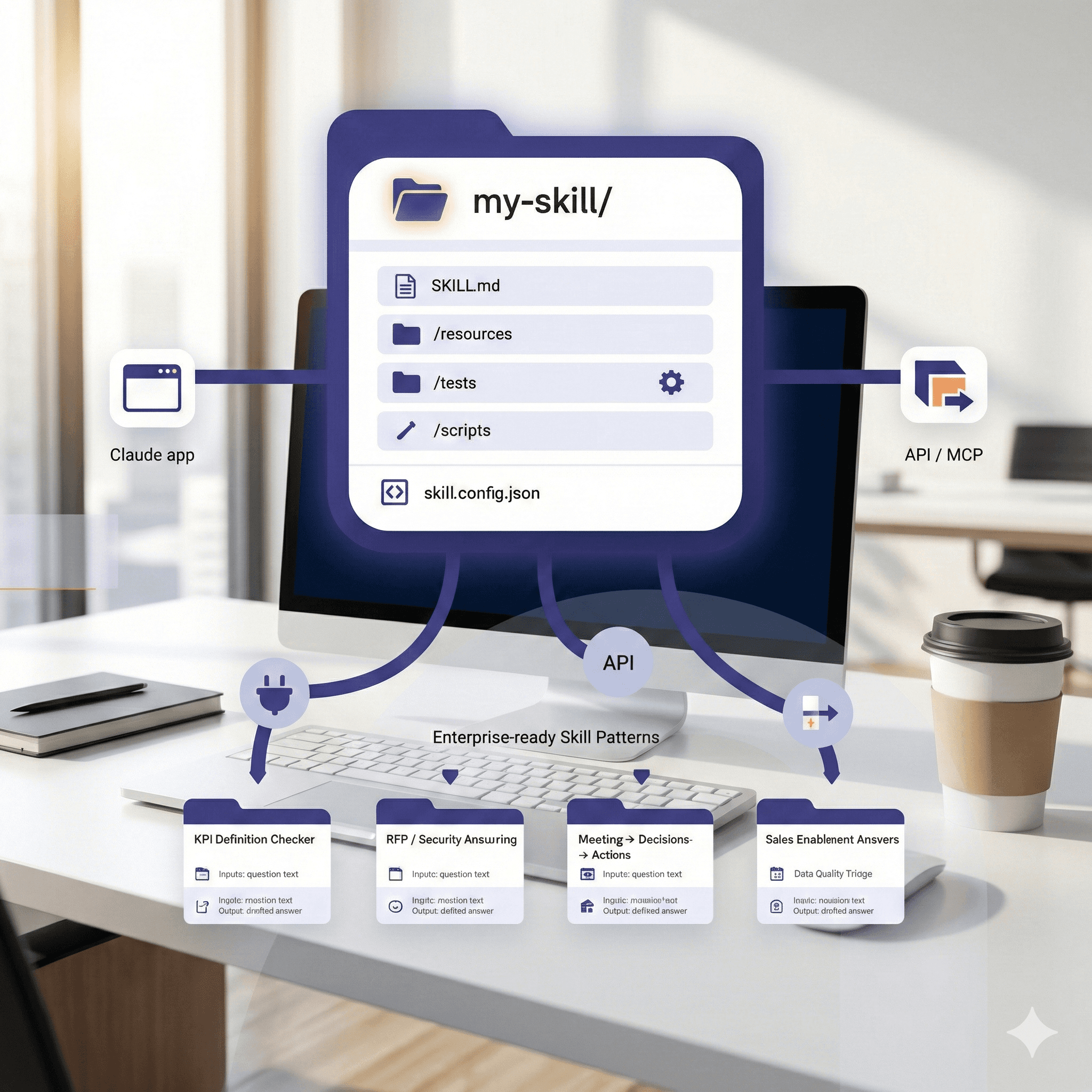

3) Create an agent-ready stack. Introduce policy-aware retrieval, tool calling, and audit trails. In engineering, connect agents to ticketing, code repositories, CI pipelines; in CX, connect to CRM, knowledge bases, and telephony. McKinsey & Company

4) Govern for safety and speed. Set up an approvals matrix by risk tier; pre-authorize tools/datasets; track prompts and outputs; define rollback processes. This shortens time-to-value while satisfying compliance needs.

5) Measure leading and lagging indicators. Monitor adoption (active users, tasks automated), quality (hallucination rate, guardrail blocks), and business outcomes (EBIT impact, revenue increase). Connect use cases to a financial model at an early stage.

Where to deploy first (practical bets)

Software engineering: Code suggestions, PR drafting, test generation, incident summarization, and root-cause analysis—where McKinsey often finds significant value. McKinsey & Company

Customer operations: Assisted resolution, knowledge surfacing, next-best-action; rapid ROI when paired with well-structured content. McKinsey & Company

Sales & marketing: Personalized messaging, proposal assembly, and pipeline management with agentic workflows. McKinsey & Company

Risks & controls

Data leakage & provenance: Use focused retrieval and redaction; mark sensitive outputs.

Hallucinations: Base every response on solid data; track answer-acceptance and override rates.

Change fatigue: Invest in enablement—McKinsey points to gaps between perceived and actual employee usage; formalize enablement over unofficial AI. McKinsey & Company

Notes for UK-based organizations

Regulated sectors (FS, Health, Public) can still scale by introducing tiered risk models and human-in-the-loop checkpoints.

For UK multinationals, align UK GDPR with global AI policies; maintain model documentation and DPIAs where necessary.

The bottom line

The 2025 State of AI communicates a clear message: AI alone won’t produce enterprise value. Value arises when leaders set growth objectives, rework workflows, adopt agent-ready systems, and measure outcomes. If your pilots aren’t affecting EBIT, the issue is likely operational model and measurement—not the AI model itself. McKinsey & Company+1

FAQ

Q1: What are the biggest changes in McKinsey’s State of AI 2025?

Adoption is widespread, but scalability remains limited. High performers aim for growth and efficiency and restructure workflows—often with AI agents—to secure value. McKinsey & Company+1

Q2: What percentage of organizations use generative AI?

McKinsey reported approximately 65% regular gen-AI use in early 2024; 2025 shifts focus to the challenges of scaling rather than headline adoption. McKinsey & Company+1

Q3: Where is the economic value?

Largest opportunities: customer operations, marketing & sales, software engineering, and R&D—contributing to an estimated $2.6–$4.4T annual impact potential. McKinsey & Company

Q4: How should we measure AI success?

Track adoption and quality leading indicators plus business KPIs (e.g., CSAT, conversion, cycle time, EBIT). Scaling without metrics is where programs falter.

McKinsey’s State of AI 2025: Findings, Risks & How to Scale

The new State of AI indicates a market that's growing up: more teams use AI daily, but most companies still find it challenging to scale and demonstrate enterprise-level impact. Leaders distinguish themselves by setting growth targets, reworking workflows, and establishing measurable controls—often with AI agents at the forefront.

What’s new in 2025 vs. 2024

In 2024, McKinsey reported that 65% of organizations were regularly using generative AI—a significant increase from 2023’s early adoption. The 2025 data highlights a different issue: only about one-third report successfully scaling AI throughout the organization. The takeaway: usage has increased; however, achieving value at scale remains difficult.

Five takeaways that matter now

Scaling is the bottleneck. Many companies report pilots, few demonstrate full transformation or EBIT impact at the enterprise level. Larger companies are more likely to be scaling, but even they mention workflow, data, and operational model obstacles. McKinsey & Company

High performers target growth and innovation—not just cost. Eight in ten cite efficiency goals, but leaders also prioritize revenue and innovation, helping secure investment and cross-functional commitment. McKinsey & Company

Workflow redesign is essential. Top performers don’t simply “add on” models; they rebuild processes (e.g., sales strategies, support documents, software delivery lifecycles) and re-platform content/knowledge so AI can act reliably. McKinsey & Company

AI agents move from hype to practicality. The 2025 materials highlight the growing use of agents capable of planning, tool calling, and performing multi-step tasks—especially in software engineering and customer operations—when guided by policy oversight and retrieval. McKinsey & Company

Measurement is underdeveloped. Many organizations still lack strong, leading KPIs for gen-AI initiatives; where tracking exists, value realization increases and risk incidents decrease. (Generation Digital view, aligned with McKinsey’s ongoing call for governance and metrics.) McKinsey & Company

Numbers the board will ask for

Adoption level: Regular gen-AI use reached approximately 65% of organizations in early 2024; 2025 emphasizes the scaling challenge rather than a simple adoption rate. McKinsey & Company

Economic potential: McKinsey’s baseline estimate for gen AI remains $2.6–$4.4 trillion in annual value across 63 use cases, with the majority in customer operations, marketing & sales, software engineering, and R&D. Consider these directional, not guaranteed. McKinsey & Company

Usage vs. leadership perception: Employees often use AI more than leaders assume—highlighting the need for enablement and policy rather than outright bans. McKinsey & Company

Generation Digital’s interpretation: how to move from pilots to proof

1) Start with business goals, not features. Mimic high performers: establish revenue or innovation targets alongside cost. Translate these into outcome-focused KPIs (cycle time, conversion rate, CSAT, defect escape). McKinsey & Company

2) Rework a complete workflow. Select one value stream (e.g., L1→L2 support escalation, SDR→AE transition, incident→post-mortem). Redesign artefacts (playbooks, taxonomies), decision points, and tools to make AI the default option—not an optional add-on. McKinsey & Company

3) Create an agent-ready stack. Introduce policy-aware retrieval, tool calling, and audit trails. In engineering, connect agents to ticketing, code repositories, CI pipelines; in CX, connect to CRM, knowledge bases, and telephony. McKinsey & Company

4) Govern for safety and speed. Set up an approvals matrix by risk tier; pre-authorize tools/datasets; track prompts and outputs; define rollback processes. This shortens time-to-value while satisfying compliance needs.

5) Measure leading and lagging indicators. Monitor adoption (active users, tasks automated), quality (hallucination rate, guardrail blocks), and business outcomes (EBIT impact, revenue increase). Connect use cases to a financial model at an early stage.

Where to deploy first (practical bets)

Software engineering: Code suggestions, PR drafting, test generation, incident summarization, and root-cause analysis—where McKinsey often finds significant value. McKinsey & Company

Customer operations: Assisted resolution, knowledge surfacing, next-best-action; rapid ROI when paired with well-structured content. McKinsey & Company

Sales & marketing: Personalized messaging, proposal assembly, and pipeline management with agentic workflows. McKinsey & Company

Risks & controls

Data leakage & provenance: Use focused retrieval and redaction; mark sensitive outputs.

Hallucinations: Base every response on solid data; track answer-acceptance and override rates.

Change fatigue: Invest in enablement—McKinsey points to gaps between perceived and actual employee usage; formalize enablement over unofficial AI. McKinsey & Company

Notes for UK-based organizations

Regulated sectors (FS, Health, Public) can still scale by introducing tiered risk models and human-in-the-loop checkpoints.

For UK multinationals, align UK GDPR with global AI policies; maintain model documentation and DPIAs where necessary.

The bottom line

The 2025 State of AI communicates a clear message: AI alone won’t produce enterprise value. Value arises when leaders set growth objectives, rework workflows, adopt agent-ready systems, and measure outcomes. If your pilots aren’t affecting EBIT, the issue is likely operational model and measurement—not the AI model itself. McKinsey & Company+1

FAQ

Q1: What are the biggest changes in McKinsey’s State of AI 2025?

Adoption is widespread, but scalability remains limited. High performers aim for growth and efficiency and restructure workflows—often with AI agents—to secure value. McKinsey & Company+1

Q2: What percentage of organizations use generative AI?

McKinsey reported approximately 65% regular gen-AI use in early 2024; 2025 shifts focus to the challenges of scaling rather than headline adoption. McKinsey & Company+1

Q3: Where is the economic value?

Largest opportunities: customer operations, marketing & sales, software engineering, and R&D—contributing to an estimated $2.6–$4.4T annual impact potential. McKinsey & Company

Q4: How should we measure AI success?

Track adoption and quality leading indicators plus business KPIs (e.g., CSAT, conversion, cycle time, EBIT). Scaling without metrics is where programs falter.

Receive practical advice directly in your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital