Prompt Engineering for Business - The 2026 Practical Guide

Prompt Engineering for Business - The 2026 Practical Guide

AI

Jan 5, 2026

Not sure where to start with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure where to start with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Download Our Free AI Readiness Pack

Why this matters now

Generative AI is embedded across knowledge work. The difference between ad‑hoc prompts and designed prompts is the difference between novelty and repeatable ROI. This guide gives business teams a practical system to brief AI reliably, measure quality, and scale safe usage—complete with copy‑paste templates and evaluation criteria.

Outcomes

By the end, your teams will be able to:

Write clear, constrained prompts that reflect business context and compliance.

Select the right prompt pattern for the task (summarise, generate, transform, plan, decide).

Measure output quality with a shared rubric.

Operationalise prompts: versioning, testing, approvals, and analytics.

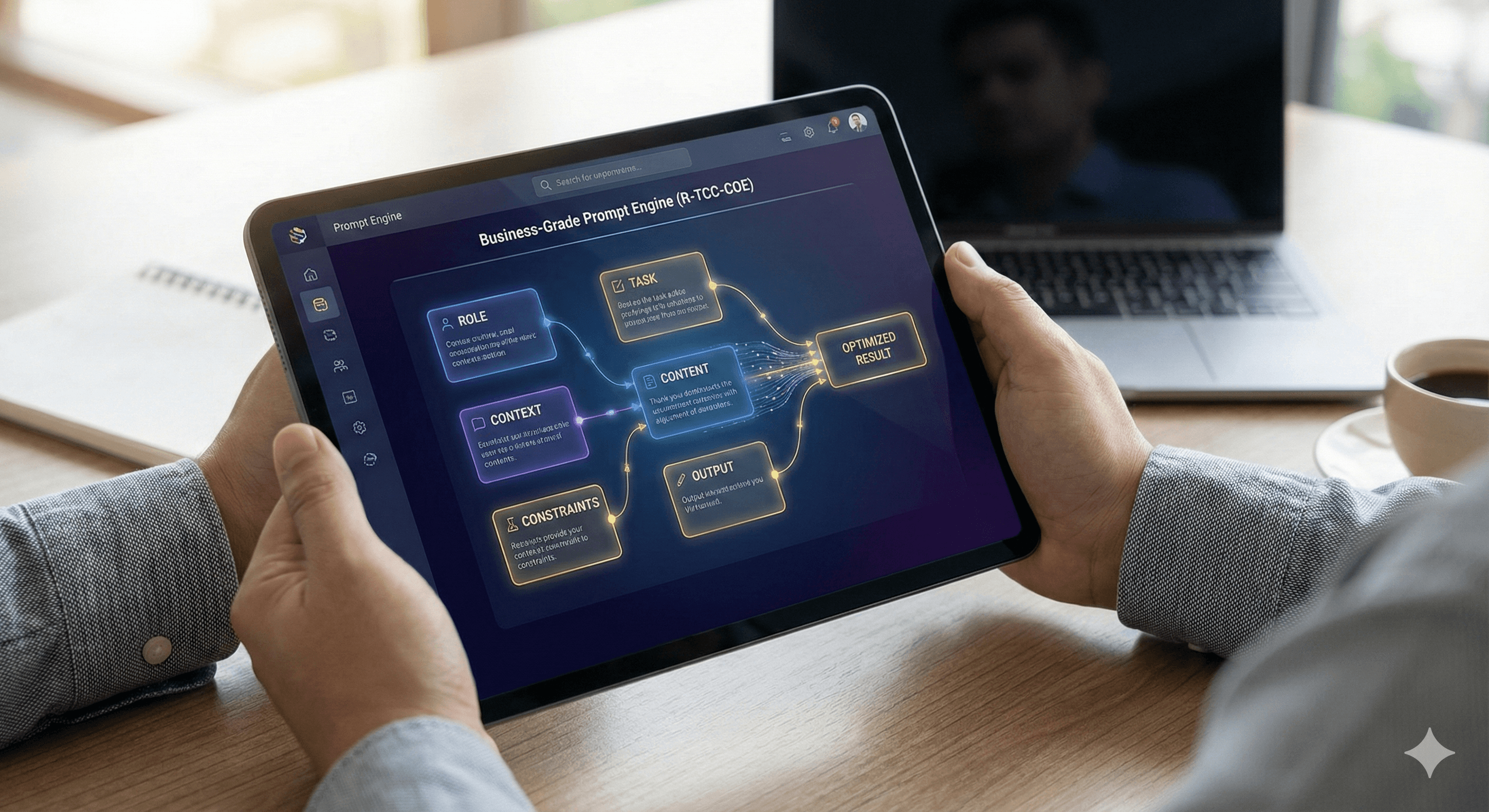

The Anatomy of a Business‑Grade Prompt (R-TCC-COE)

Use this structure for 90% of work tasks.

Role – Who the model is simulating.

Task – What to do, in one sentence.

Context – Audience, brand, channel, constraints.

Content – The source data to use (paste, attach, or reference).

Constraints – Rules: tone, length, style, compliance.

Output – Exact format required (JSON schema, table, bullets).

Evaluation – How success is judged (criteria, tests, or checklists).

Template

Role: [discipline + seniority].

Task: [action verb + outcome].

Context: [audience, channel, brand rules, locale].

Content: [paste data or point to files].

Constraints: [Do/Don’t, sources only, citations, word limits].

Output: [format + fields].

Evaluation: [acceptance criteria or scoring rubric].

Prompt Patterns & Examples

Below are reusable patterns with examples and acceptance criteria. Copy, adapt, and save to your workspace projects.

1) Summarise→Decide (executive brief)

Use when: Turning long docs into board‑ready decisions.

Prompt

Role: You are a strategy analyst for a UK SaaS firm.

Task: Produce an executive brief and a go/no‑go recommendation.

Context: Audience is the COO; British English; risk‑aware.

Content: <>

Constraints: 250–300 words; include 3 quantified risks; cite document page refs.

Output: Markdown with sections: Summary, Options, Risks, Recommendation.

Evaluation: Meets length; includes 3 risks with impact/likelihood; cites page refs.

Acceptance criteria (scored 0–2 each; target ≥8/10)

Accuracy of facts

Relevance to COO priorities

Clarity & brevity

Risk specificity with numbers

Actionable recommendation

2) Transform→Standardise (policy clean‑up)

Use when: Converting messy text into approved templates.

Prompt

Role: Compliance editor.

Task: Rewrite into the company’s policy template.

Context: UK employment law; inclusive language.

Content: <>

Constraints: Preserve legal meaning; remove US‑only references; flag gaps.

Output: 1) Clean policy; 2) Change log table; 3) Gap list.

Evaluation: Legal meaning preserved; gaps flagged with rationale.

Acceptance test

If a clause is ambiguous, the model must add a “REVIEW” tag rather than resolving it.

3) Generate→Persuade (sales email sequence)

Use when: Outbound copy that matches brand voice.

Prompt

Role: Senior B2B copywriter in cybersecurity.

Task: Draft a 3‑email sequence to CFOs in FTSE 250.

Context: Brand voice = “Warm Intelligence”; avoid hype.

Content: ICP notes + 3 case studies (paste bullets).

Constraints: Plain language, no jargon; subject lines ≤7 words; UK spelling.

Output: 3 emails with subject, 120–150 words each; CTA variations.

Evaluation: Voice match; value prop accuracy; no spammy claims; readability (FK grade ≤8).

Quality checks

Run a readability pass and a hallucination check against the pasted case studies.

4) Analyse→Explain (data analysis with files)

Use when: Asking the model to analyse spreadsheets or CSVs.

Prompt

Role: Finance analyst.

Task: Analyse month‑end variances and explain drivers.

Context: Audience = Finance Director; highlight risks/opportunities.

Content: Attach:

P&L_Jan–Dec_2025.csvandBudget_2025.csv.Constraints: Use only attached files; show formulas; no external assumptions.

Output: Table: Line Item | Budget | Actual | Variance | Driver (1 sentence);

then a 150‑word narrative.Evaluation: Variances match files; explanations reference file rows.

Acceptance test

Spot‑check 3 rows for arithmetic correctness before sign‑off.

5) Plan→Breakdown (project planning)

Use when: Converting goals to a delivery plan.

Prompt

Role: Programme manager (PRINCE2/Agile).

Task: Build a 90‑day plan for an AI rollout.

Context: 50‑person UK retailer; SSO and data governance required.

Content: 5 high‑level goals (paste).

Constraints: Week‑by‑week; include owners, risks, and success metrics.

Output: Markdown table + RAID log + weekly status template.

Evaluation: Dependencies explicit; measurable milestones; risks mitigated.

The Business Prompt Quality Rubric (BPQR)

Score each criterion 0–2 (Poor / Adequate / Strong). Target ≥8/10; Mandatory criteria marked .

Factual Accuracy – Matches provided sources; no invented data.

Relevance – Directly serves the stated audience and goal.

Clarity – Short sentences; plain English; unambiguous instructions.

Structure & Format – Output exactly as specified (JSON/table/sections).

Constraints Compliance – Tone, length, style guides and legal caveats followed.

Verifiability – Citations, page refs, or file row refs included where asked.

Safety & Privacy – No PII leakage; no policy‑violating content.

Tip: Bake this rubric into your prompts as the Evaluation step. Ask the model to self‑score, then improve until ≥8/10.

Anti‑Patterns to Avoid

Vague roles: “Act as an expert” without domain, seniority, and audience.

Missing context: No brand voice, region, or compliance notes.

Open‑ended tasks: “Write something great about X.” Be specific.

No source data: Asking for analysis without attaching files or quoting text.

Unbounded outputs: No format, length, or evaluation criteria.

Hidden requirements: Expectations not stated in the prompt inevitably fail QA.

Advanced Techniques

A) Chain‑of‑Thought Guardrails (business‑safe)

Ask for structured reasoning summaries without revealing sensitive internals:

“Provide a numbered reasoning summary (max 5 bullets) focused on the facts from the attached files; do not disclose internal model chain‑of‑thought.”

B) Few‑Shot Examples

Give 1–3 concise, high‑quality examples that mirror your desired output. Keep examples short; highlight boundaries (good/bad examples) to reduce drift.

C) Tooling & Data Controls

When using file uploads, projects, web search, or apps, explicitly constrain the data:

“Use only the attached files and the approved knowledge base. Do not browse the public web.”

D) Output Contracts (JSON)

For integrations, specify a JSON schema right in the prompt:

{ "title": "SalesEmail", "type": "object", "properties": { "subject": {"type": "string"}, "body": {"type": "string"}, "cta": {"type": "string"} }, "required": ["subject", "body", "cta"] }

Add: “Validate JSON before responding; do not include markdown fences.”

E) Self‑Critique → Improve Loop

End prompts with: “Self‑evaluate using BPQR; list 3 improvements; apply them and present only the improved version.”

Role‑Based Prompt Packs

Sales (B2B)

Prospecting email from a case study

Role: Senior AE for [industry].

Task: Draft a first‑touch email using the case study.

Context: CFO audience; UK English; 120–140 words; value‑led.

Content: <<Paste 5–7 bullet points from case study>>

Constraints: No claims without numbers; add one relevant metric.

Output: Subject + body + CTA; reading age ≤8.

Evaluation: Truthful, concise, specific to the case study.

Marketing

Repurpose webinar → blog + social

Role: Content strategist.

Task: Turn transcript into a 900‑word blog and 5 LinkedIn posts.

Context: Brand voice “Warm Intelligence”; avoid hype.

Content: <>

Constraints: Attribute quotes; British spelling; include 3 sub‑headings.

Output: Blog (markdown) + 5 posts (≤280 chars each).

Evaluation: Originality; quote accuracy; SEO‑friendly headings.

HR / People

Job description normalisation

Role: HRBP.

Task: Convert JD to the standard template.

Context: Inclusive language; UK employment norms.

Content: <>

Constraints: Remove biased phrasing; flag unrealistic requirements.

Output: Template sections + risk flags.

Evaluation: Bias reduced; role clarity improved.

Finance

Vendor comparison

Role: Procurement analyst.

Task: Compare 3 vendor proposals.

Context: Mid‑market UK company; 2‑year TCO; risk‑based.

Content: <>

Constraints: Use only provided documents; show a comparison table.

Output: Table + 150‑word recommendation.

Evaluation: Numbers correct; assumptions stated.

Product / Engineering

Requirements to user stories

Role: Product manager.

Task: Turn requirements into INVEST user stories.

Context: Web app; accessibility and security non‑functional reqs.

Content: <>

Constraints: Add acceptance criteria and edge cases.

Output: User stories in a table + risk list.

Evaluation: Measurable ACs; risk coverage.

Safety, Privacy & Compliance Prompts

Add these lines to prompts where applicable:

“Exclude personal data (PII) unless explicitly provided.”

“If unsure, ask for clarification before proceeding.”

“Cite file names and line/page numbers for all facts.”

“If content could breach policy or law, halt and explain the risk.”

Red‑flag checks (ask the model to run):

Does the output contain PII or confidential data?

Are any claims unverifiable from the provided sources?

Is there ambiguous or discriminatory language?

Measuring ROI from Prompting

Track before/after on:

Time saved per task (minutes).

Quality score via BPQR.

Error rate (rework/defects).

Adoption (active users, prompts reused).

Simple pilot target: 3 use‑cases × 10 users × 4 weeks → publish a one‑page impact report.

Next Steps?

Ready to scale prompting beyond ad‑hoc experiments?

Book a 60‑minute Prompt Engineering Accelerator

Map 3–5 high‑impact use‑cases per function

Turn them into reviewed, versioned prompts with BPQR

Leave with a rollout checklist, templates, and success metrics

FAQ

1) What’s the minimum structure every prompt should have?

Role, Task, Context, Content, Constraints, Output, Evaluation (R‑TCC‑COE). Use the universal template in the appendix.

2) How do we reduce hallucinations?

Attach source data, forbid external browsing when not needed, demand citations/page or row refs, and require a self‑critique step before final output.

3) How do we measure quality consistently?

Use the BPQR rubric (0–2 each for Accuracy, Relevance, Clarity, Structure, Constraints, Verifiability, Safety). Target ≥8/10 and promote only “gold” prompts.

4) When should we fine‑tune vs improve prompts?

Fine‑tune when you have consistent, high‑quality examples and the same task repeats at scale. Otherwise, prefer prompt and workflow improvements plus few‑shot examples.

5) Is this safe with ChatGPT for Business?

Yes—pair prompts with appropriate data controls, avoid PII unless necessary, and use workspace policies; Business excludes training on your data by default.

Why this matters now

Generative AI is embedded across knowledge work. The difference between ad‑hoc prompts and designed prompts is the difference between novelty and repeatable ROI. This guide gives business teams a practical system to brief AI reliably, measure quality, and scale safe usage—complete with copy‑paste templates and evaluation criteria.

Outcomes

By the end, your teams will be able to:

Write clear, constrained prompts that reflect business context and compliance.

Select the right prompt pattern for the task (summarise, generate, transform, plan, decide).

Measure output quality with a shared rubric.

Operationalise prompts: versioning, testing, approvals, and analytics.

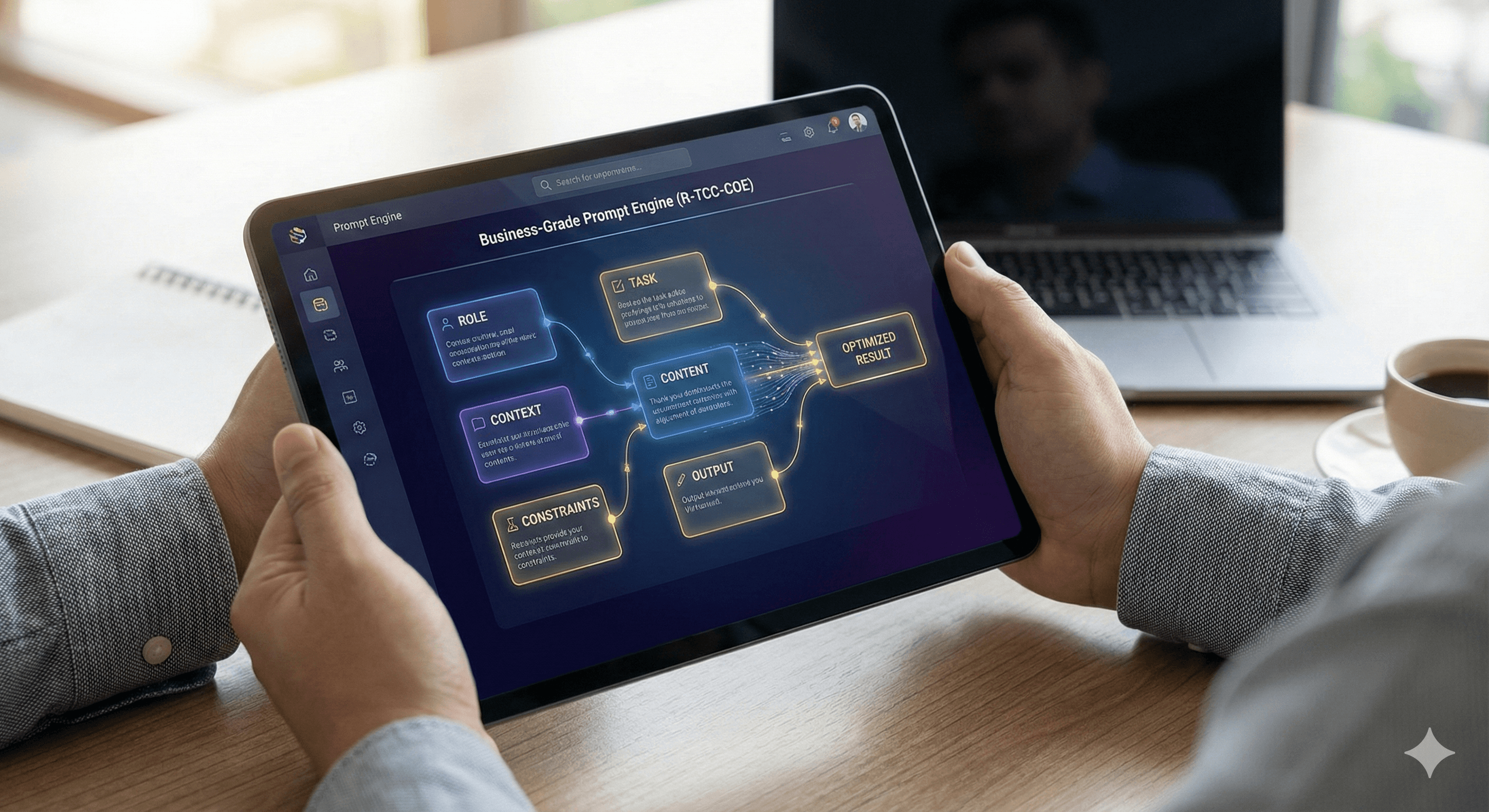

The Anatomy of a Business‑Grade Prompt (R-TCC-COE)

Use this structure for 90% of work tasks.

Role – Who the model is simulating.

Task – What to do, in one sentence.

Context – Audience, brand, channel, constraints.

Content – The source data to use (paste, attach, or reference).

Constraints – Rules: tone, length, style, compliance.

Output – Exact format required (JSON schema, table, bullets).

Evaluation – How success is judged (criteria, tests, or checklists).

Template

Role: [discipline + seniority].

Task: [action verb + outcome].

Context: [audience, channel, brand rules, locale].

Content: [paste data or point to files].

Constraints: [Do/Don’t, sources only, citations, word limits].

Output: [format + fields].

Evaluation: [acceptance criteria or scoring rubric].

Prompt Patterns & Examples

Below are reusable patterns with examples and acceptance criteria. Copy, adapt, and save to your workspace projects.

1) Summarise→Decide (executive brief)

Use when: Turning long docs into board‑ready decisions.

Prompt

Role: You are a strategy analyst for a UK SaaS firm.

Task: Produce an executive brief and a go/no‑go recommendation.

Context: Audience is the COO; British English; risk‑aware.

Content: <>

Constraints: 250–300 words; include 3 quantified risks; cite document page refs.

Output: Markdown with sections: Summary, Options, Risks, Recommendation.

Evaluation: Meets length; includes 3 risks with impact/likelihood; cites page refs.

Acceptance criteria (scored 0–2 each; target ≥8/10)

Accuracy of facts

Relevance to COO priorities

Clarity & brevity

Risk specificity with numbers

Actionable recommendation

2) Transform→Standardise (policy clean‑up)

Use when: Converting messy text into approved templates.

Prompt

Role: Compliance editor.

Task: Rewrite into the company’s policy template.

Context: UK employment law; inclusive language.

Content: <>

Constraints: Preserve legal meaning; remove US‑only references; flag gaps.

Output: 1) Clean policy; 2) Change log table; 3) Gap list.

Evaluation: Legal meaning preserved; gaps flagged with rationale.

Acceptance test

If a clause is ambiguous, the model must add a “REVIEW” tag rather than resolving it.

3) Generate→Persuade (sales email sequence)

Use when: Outbound copy that matches brand voice.

Prompt

Role: Senior B2B copywriter in cybersecurity.

Task: Draft a 3‑email sequence to CFOs in FTSE 250.

Context: Brand voice = “Warm Intelligence”; avoid hype.

Content: ICP notes + 3 case studies (paste bullets).

Constraints: Plain language, no jargon; subject lines ≤7 words; UK spelling.

Output: 3 emails with subject, 120–150 words each; CTA variations.

Evaluation: Voice match; value prop accuracy; no spammy claims; readability (FK grade ≤8).

Quality checks

Run a readability pass and a hallucination check against the pasted case studies.

4) Analyse→Explain (data analysis with files)

Use when: Asking the model to analyse spreadsheets or CSVs.

Prompt

Role: Finance analyst.

Task: Analyse month‑end variances and explain drivers.

Context: Audience = Finance Director; highlight risks/opportunities.

Content: Attach:

P&L_Jan–Dec_2025.csvandBudget_2025.csv.Constraints: Use only attached files; show formulas; no external assumptions.

Output: Table: Line Item | Budget | Actual | Variance | Driver (1 sentence);

then a 150‑word narrative.Evaluation: Variances match files; explanations reference file rows.

Acceptance test

Spot‑check 3 rows for arithmetic correctness before sign‑off.

5) Plan→Breakdown (project planning)

Use when: Converting goals to a delivery plan.

Prompt

Role: Programme manager (PRINCE2/Agile).

Task: Build a 90‑day plan for an AI rollout.

Context: 50‑person UK retailer; SSO and data governance required.

Content: 5 high‑level goals (paste).

Constraints: Week‑by‑week; include owners, risks, and success metrics.

Output: Markdown table + RAID log + weekly status template.

Evaluation: Dependencies explicit; measurable milestones; risks mitigated.

The Business Prompt Quality Rubric (BPQR)

Score each criterion 0–2 (Poor / Adequate / Strong). Target ≥8/10; Mandatory criteria marked .

Factual Accuracy – Matches provided sources; no invented data.

Relevance – Directly serves the stated audience and goal.

Clarity – Short sentences; plain English; unambiguous instructions.

Structure & Format – Output exactly as specified (JSON/table/sections).

Constraints Compliance – Tone, length, style guides and legal caveats followed.

Verifiability – Citations, page refs, or file row refs included where asked.

Safety & Privacy – No PII leakage; no policy‑violating content.

Tip: Bake this rubric into your prompts as the Evaluation step. Ask the model to self‑score, then improve until ≥8/10.

Anti‑Patterns to Avoid

Vague roles: “Act as an expert” without domain, seniority, and audience.

Missing context: No brand voice, region, or compliance notes.

Open‑ended tasks: “Write something great about X.” Be specific.

No source data: Asking for analysis without attaching files or quoting text.

Unbounded outputs: No format, length, or evaluation criteria.

Hidden requirements: Expectations not stated in the prompt inevitably fail QA.

Advanced Techniques

A) Chain‑of‑Thought Guardrails (business‑safe)

Ask for structured reasoning summaries without revealing sensitive internals:

“Provide a numbered reasoning summary (max 5 bullets) focused on the facts from the attached files; do not disclose internal model chain‑of‑thought.”

B) Few‑Shot Examples

Give 1–3 concise, high‑quality examples that mirror your desired output. Keep examples short; highlight boundaries (good/bad examples) to reduce drift.

C) Tooling & Data Controls

When using file uploads, projects, web search, or apps, explicitly constrain the data:

“Use only the attached files and the approved knowledge base. Do not browse the public web.”

D) Output Contracts (JSON)

For integrations, specify a JSON schema right in the prompt:

{ "title": "SalesEmail", "type": "object", "properties": { "subject": {"type": "string"}, "body": {"type": "string"}, "cta": {"type": "string"} }, "required": ["subject", "body", "cta"] }

Add: “Validate JSON before responding; do not include markdown fences.”

E) Self‑Critique → Improve Loop

End prompts with: “Self‑evaluate using BPQR; list 3 improvements; apply them and present only the improved version.”

Role‑Based Prompt Packs

Sales (B2B)

Prospecting email from a case study

Role: Senior AE for [industry].

Task: Draft a first‑touch email using the case study.

Context: CFO audience; UK English; 120–140 words; value‑led.

Content: <<Paste 5–7 bullet points from case study>>

Constraints: No claims without numbers; add one relevant metric.

Output: Subject + body + CTA; reading age ≤8.

Evaluation: Truthful, concise, specific to the case study.

Marketing

Repurpose webinar → blog + social

Role: Content strategist.

Task: Turn transcript into a 900‑word blog and 5 LinkedIn posts.

Context: Brand voice “Warm Intelligence”; avoid hype.

Content: <>

Constraints: Attribute quotes; British spelling; include 3 sub‑headings.

Output: Blog (markdown) + 5 posts (≤280 chars each).

Evaluation: Originality; quote accuracy; SEO‑friendly headings.

HR / People

Job description normalisation

Role: HRBP.

Task: Convert JD to the standard template.

Context: Inclusive language; UK employment norms.

Content: <>

Constraints: Remove biased phrasing; flag unrealistic requirements.

Output: Template sections + risk flags.

Evaluation: Bias reduced; role clarity improved.

Finance

Vendor comparison

Role: Procurement analyst.

Task: Compare 3 vendor proposals.

Context: Mid‑market UK company; 2‑year TCO; risk‑based.

Content: <>

Constraints: Use only provided documents; show a comparison table.

Output: Table + 150‑word recommendation.

Evaluation: Numbers correct; assumptions stated.

Product / Engineering

Requirements to user stories

Role: Product manager.

Task: Turn requirements into INVEST user stories.

Context: Web app; accessibility and security non‑functional reqs.

Content: <>

Constraints: Add acceptance criteria and edge cases.

Output: User stories in a table + risk list.

Evaluation: Measurable ACs; risk coverage.

Safety, Privacy & Compliance Prompts

Add these lines to prompts where applicable:

“Exclude personal data (PII) unless explicitly provided.”

“If unsure, ask for clarification before proceeding.”

“Cite file names and line/page numbers for all facts.”

“If content could breach policy or law, halt and explain the risk.”

Red‑flag checks (ask the model to run):

Does the output contain PII or confidential data?

Are any claims unverifiable from the provided sources?

Is there ambiguous or discriminatory language?

Measuring ROI from Prompting

Track before/after on:

Time saved per task (minutes).

Quality score via BPQR.

Error rate (rework/defects).

Adoption (active users, prompts reused).

Simple pilot target: 3 use‑cases × 10 users × 4 weeks → publish a one‑page impact report.

Next Steps?

Ready to scale prompting beyond ad‑hoc experiments?

Book a 60‑minute Prompt Engineering Accelerator

Map 3–5 high‑impact use‑cases per function

Turn them into reviewed, versioned prompts with BPQR

Leave with a rollout checklist, templates, and success metrics

FAQ

1) What’s the minimum structure every prompt should have?

Role, Task, Context, Content, Constraints, Output, Evaluation (R‑TCC‑COE). Use the universal template in the appendix.

2) How do we reduce hallucinations?

Attach source data, forbid external browsing when not needed, demand citations/page or row refs, and require a self‑critique step before final output.

3) How do we measure quality consistently?

Use the BPQR rubric (0–2 each for Accuracy, Relevance, Clarity, Structure, Constraints, Verifiability, Safety). Target ≥8/10 and promote only “gold” prompts.

4) When should we fine‑tune vs improve prompts?

Fine‑tune when you have consistent, high‑quality examples and the same task repeats at scale. Otherwise, prefer prompt and workflow improvements plus few‑shot examples.

5) Is this safe with ChatGPT for Business?

Yes—pair prompts with appropriate data controls, avoid PII unless necessary, and use workspace policies; Business excludes training on your data by default.

Get weekly AI news and advice delivered to your inbox

By subscribing you consent to Generation Digital storing and processing your details in line with our privacy policy. You can read the full policy at gend.co/privacy.

Upcoming Workshops and Webinars

Operational Clarity at Scale - Asana

Virtual Webinar

Weds 25th February 2026

Online

Work With AI Teammates - Asana

In-Person Workshop

Thurs 26th February 2026

London, UK

From Idea to Prototype - AI in Miro

Virtual Webinar

Weds 18th February 2026

Online

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia

Company No: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia