Scaling PostgreSQL: OpenAI’s 800M-user Playbook

Scaling PostgreSQL: OpenAI’s 800M-user Playbook

AI

OpenAI

Jan 23, 2026

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Start the AI Readiness Pack

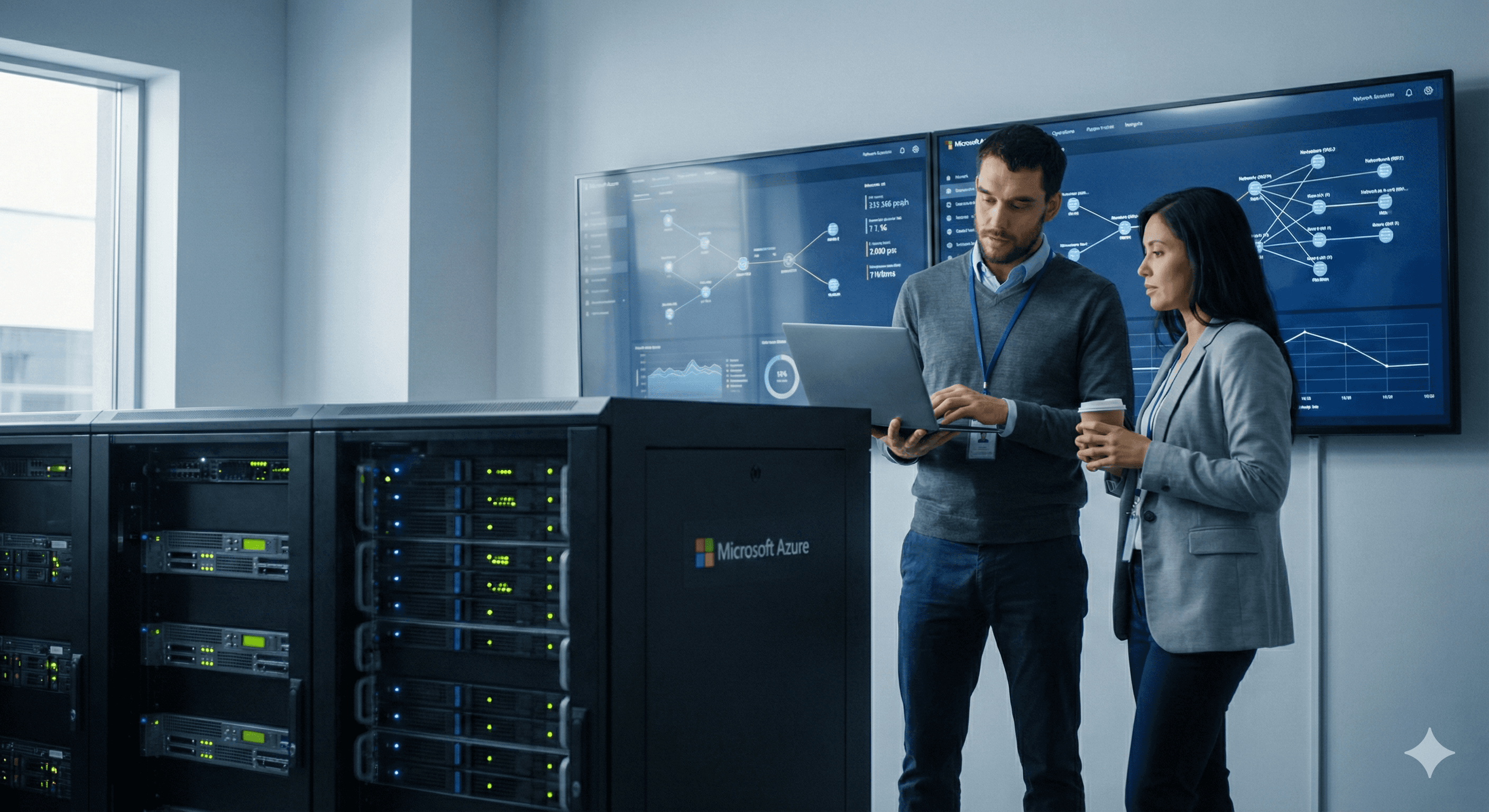

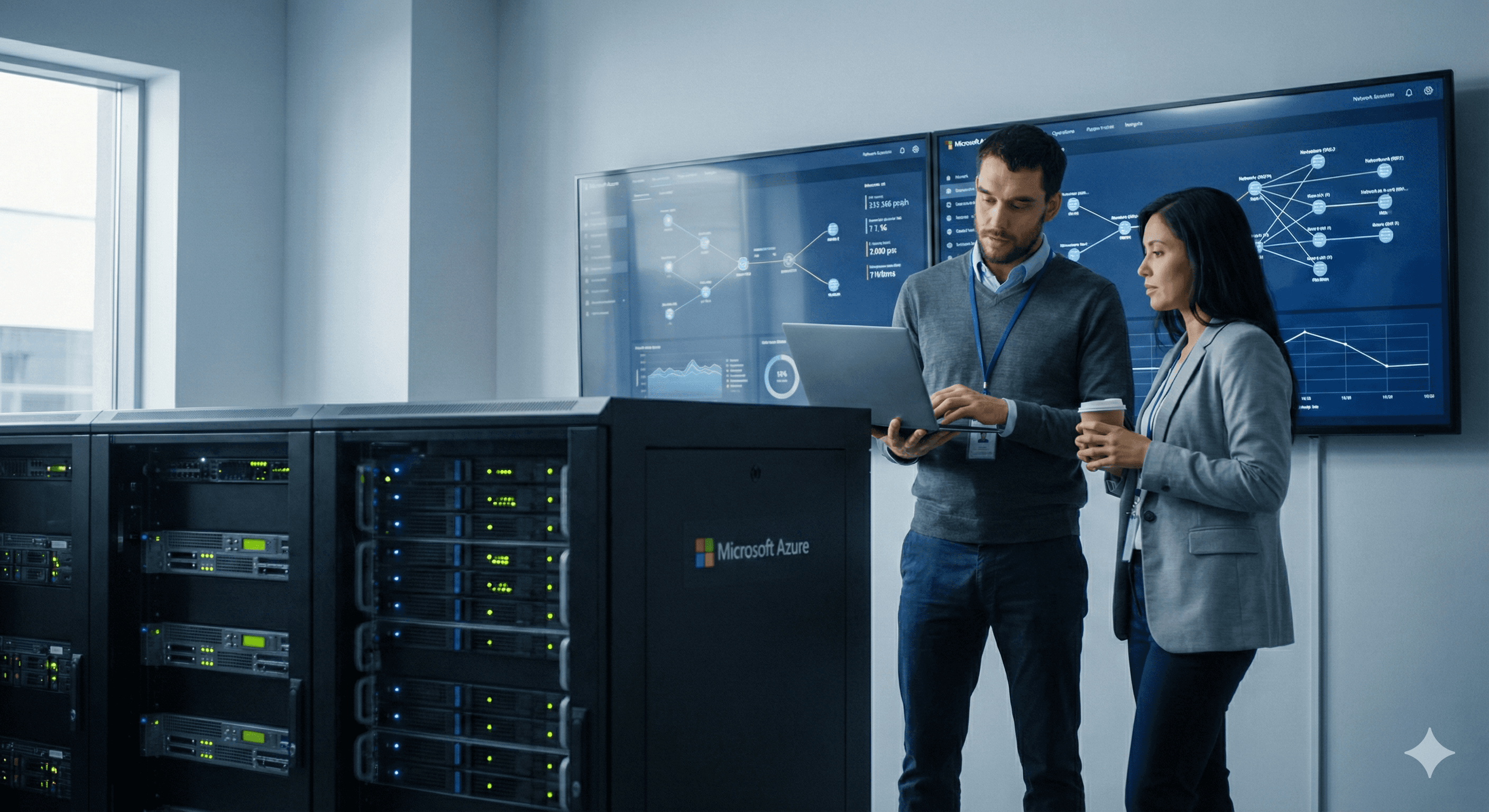

OpenAI scaled PostgreSQL for ChatGPT by keeping a single primary on Azure, offloading reads to ~50 replicas, and hardening the stack with connection pooling, multi-layer rate limiting, query simplification, and workload isolation. The result: millions of QPS, low double-digit p99 latency, and five-nines availability at global scale.

Why this matters now

ChatGPT runs at unprecedented scale—~800M weekly users—which forces sharp engineering trade-offs. Instead of jumping to a distributed database, OpenAI proved how far you can take Postgres with the right patterns, cloud primitives, and ruthless elimination of write pressure.

The core architecture (surprisingly simple)

Single primary (writer) on Azure PostgreSQL Flexible Server.

~50 read replicas across regions to keep reads fast and resilient.

Unsharded Postgres for reads; shardable, write-heavy workloads moved to Cosmos DB.

Outcomes: millions of QPS, low double-digit ms p99, ~99.999% availability.

Why not shard Postgres now?

Sharding legacy application paths is costly and slow. Because ChatGPT is read-heavy, OpenAI preserved a single writer and focused on removing write spikes while scaling reads horizontally. Sharding remains a future option, not a near-term requirement.

Seven patterns you can copy

1) Reduce load on the primary (treat writes as a scarce resource)

Offload every possible read to replicas.

Fix app bugs causing redundant writes; introduce lazy writes to smooth spikes.

Enforce strict rate limits on backfills and background updates.

Move shardable write-heavy domains to a sharded system (e.g., Cosmos DB).

2) Optimise queries (escape ORM foot-guns)

Identify “killer” queries (e.g., 12-table joins) that can sink the cluster.

Break complex joins into simpler app-level logic; review generated SQL.

Configure idle_in_transaction_session_timeout to stop idle transactions blocking autovacuum.

3) Isolate noisy neighbours

Split traffic into high- and low-priority tiers on dedicated instances.

Isolate products/services to prevent a launch in one area degrading another.

4) Pool connections like your uptime depends on it

Azure Postgres caps connections (e.g., ~5,000). Use a pooler to avoid storms and idle pile-ups.

Tune max connections, queue limits, and timeouts; block abusive clients early.

5) Rate limit at multiple layers (and avoid retry storms)

Apply controls at application, pooler, proxy, and query layers.

Avoid aggressive retry intervals; implement digest-based blocks for bad queries.

6) Manage schema with a blast-radius mindset

No full-table rewrites in production migrations; 5-second DDL timeouts.

Create/drop indexes concurrently only.

New tables for new features? Put them in sharded systems, not the primary.

7) Plan replica growth with cascading replication

Primary streaming WAL to dozens of replicas won’t scale forever.

Test cascading replication so intermediates relay WAL downstream, enabling >100 replicas without overloading the writer (with added failover complexity).

Results you can aim for

With these tactics, OpenAI reports millions of QPS, near-zero replication lag, geo-distributed low-latency reads, and five-nines availability—despite running a single writer. They’ve had one SEV-0 Postgres incident over 12 months, triggered by a viral product surge, and recovered by design.

Practical rollout plan (90 days)

Week 1–2: Baseline

Capture per-endpoint QPS, CPU, connections, and slow query digests; map write-heavy domains.Week 3–4: Pooling + timeouts

Deploy PgBouncer-style pooling; set sane defaults for connect/statement/idle timeouts; cap max connections.Week 5–6: Rate limiting

Add app-level and proxy-level rate limits; implement exponential backoff and circuit breakers; introduce digest-level blocks for worst offenders.Week 7–8: Query surgery

Eliminate multi-way joins; push join logic into app; add targeted covering indexes; enforce idle_in_transaction_session_timeout.Week 9–10: Workload isolation

Split low-priority/background traffic from interactive paths; scale read replicas regionally.Week 11–12: Backfills + schema guardrails

Throttle backfills; enforce 5-second DDL timeouts; only concurrent index changes; no new tables on the primary.Parallel track: Evaluate cascading replication in staging for future growth.

FAQs

What database does ChatGPT use?

OpenAI runs a single-primary PostgreSQL for core workloads with ~50 read replicas and augments write-heavy domains with sharded systems like Cosmos DB.

How big is ChatGPT’s user base today?

Recent reporting places ChatGPT at ~800 million weekly active users by early 2026, with OpenAI’s own research referencing 700M+ in 2025.

Why not switch to a distributed SQL database?

Sharding legacy paths is costly; ChatGPT’s workloads are read-heavy, so OpenAI first maximised Postgres with replicas, pooling, and rate limits—keeping the door open to sharding later.

What settings matter most?

Start with pooler caps, connection/statement/idle timeouts, idle_in_transaction_session_timeout, and disciplined DDL controls.

Next Steps

Want this architecture for your product? Contact Generation Digital for a readiness review, rollout plan, and hands-on implementation.

OpenAI scaled PostgreSQL for ChatGPT by keeping a single primary on Azure, offloading reads to ~50 replicas, and hardening the stack with connection pooling, multi-layer rate limiting, query simplification, and workload isolation. The result: millions of QPS, low double-digit p99 latency, and five-nines availability at global scale.

Why this matters now

ChatGPT runs at unprecedented scale—~800M weekly users—which forces sharp engineering trade-offs. Instead of jumping to a distributed database, OpenAI proved how far you can take Postgres with the right patterns, cloud primitives, and ruthless elimination of write pressure.

The core architecture (surprisingly simple)

Single primary (writer) on Azure PostgreSQL Flexible Server.

~50 read replicas across regions to keep reads fast and resilient.

Unsharded Postgres for reads; shardable, write-heavy workloads moved to Cosmos DB.

Outcomes: millions of QPS, low double-digit ms p99, ~99.999% availability.

Why not shard Postgres now?

Sharding legacy application paths is costly and slow. Because ChatGPT is read-heavy, OpenAI preserved a single writer and focused on removing write spikes while scaling reads horizontally. Sharding remains a future option, not a near-term requirement.

Seven patterns you can copy

1) Reduce load on the primary (treat writes as a scarce resource)

Offload every possible read to replicas.

Fix app bugs causing redundant writes; introduce lazy writes to smooth spikes.

Enforce strict rate limits on backfills and background updates.

Move shardable write-heavy domains to a sharded system (e.g., Cosmos DB).

2) Optimise queries (escape ORM foot-guns)

Identify “killer” queries (e.g., 12-table joins) that can sink the cluster.

Break complex joins into simpler app-level logic; review generated SQL.

Configure idle_in_transaction_session_timeout to stop idle transactions blocking autovacuum.

3) Isolate noisy neighbours

Split traffic into high- and low-priority tiers on dedicated instances.

Isolate products/services to prevent a launch in one area degrading another.

4) Pool connections like your uptime depends on it

Azure Postgres caps connections (e.g., ~5,000). Use a pooler to avoid storms and idle pile-ups.

Tune max connections, queue limits, and timeouts; block abusive clients early.

5) Rate limit at multiple layers (and avoid retry storms)

Apply controls at application, pooler, proxy, and query layers.

Avoid aggressive retry intervals; implement digest-based blocks for bad queries.

6) Manage schema with a blast-radius mindset

No full-table rewrites in production migrations; 5-second DDL timeouts.

Create/drop indexes concurrently only.

New tables for new features? Put them in sharded systems, not the primary.

7) Plan replica growth with cascading replication

Primary streaming WAL to dozens of replicas won’t scale forever.

Test cascading replication so intermediates relay WAL downstream, enabling >100 replicas without overloading the writer (with added failover complexity).

Results you can aim for

With these tactics, OpenAI reports millions of QPS, near-zero replication lag, geo-distributed low-latency reads, and five-nines availability—despite running a single writer. They’ve had one SEV-0 Postgres incident over 12 months, triggered by a viral product surge, and recovered by design.

Practical rollout plan (90 days)

Week 1–2: Baseline

Capture per-endpoint QPS, CPU, connections, and slow query digests; map write-heavy domains.Week 3–4: Pooling + timeouts

Deploy PgBouncer-style pooling; set sane defaults for connect/statement/idle timeouts; cap max connections.Week 5–6: Rate limiting

Add app-level and proxy-level rate limits; implement exponential backoff and circuit breakers; introduce digest-level blocks for worst offenders.Week 7–8: Query surgery

Eliminate multi-way joins; push join logic into app; add targeted covering indexes; enforce idle_in_transaction_session_timeout.Week 9–10: Workload isolation

Split low-priority/background traffic from interactive paths; scale read replicas regionally.Week 11–12: Backfills + schema guardrails

Throttle backfills; enforce 5-second DDL timeouts; only concurrent index changes; no new tables on the primary.Parallel track: Evaluate cascading replication in staging for future growth.

FAQs

What database does ChatGPT use?

OpenAI runs a single-primary PostgreSQL for core workloads with ~50 read replicas and augments write-heavy domains with sharded systems like Cosmos DB.

How big is ChatGPT’s user base today?

Recent reporting places ChatGPT at ~800 million weekly active users by early 2026, with OpenAI’s own research referencing 700M+ in 2025.

Why not switch to a distributed SQL database?

Sharding legacy paths is costly; ChatGPT’s workloads are read-heavy, so OpenAI first maximised Postgres with replicas, pooling, and rate limits—keeping the door open to sharding later.

What settings matter most?

Start with pooler caps, connection/statement/idle timeouts, idle_in_transaction_session_timeout, and disciplined DDL controls.

Next Steps

Want this architecture for your product? Contact Generation Digital for a readiness review, rollout plan, and hands-on implementation.

Get practical advice delivered to your inbox

By subscribing you consent to Generation Digital storing and processing your details in line with our privacy policy. You can read the full policy at gend.co/privacy.

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia

Company No: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia