GPT‑5.3‑Codex‑Spark: Real-Time Coding in Codex (2026)

GPT‑5.3‑Codex‑Spark: Real-Time Coding in Codex (2026)

Artificial Intelligence

OpenAI

Feb 2, 2026

Uncertain about how to get started with AI?

Evaluate your readiness, potential risks, and key priorities in less than an hour.

Uncertain about how to get started with AI?

Evaluate your readiness, potential risks, and key priorities in less than an hour.

➔ Download Our Free AI Preparedness Pack

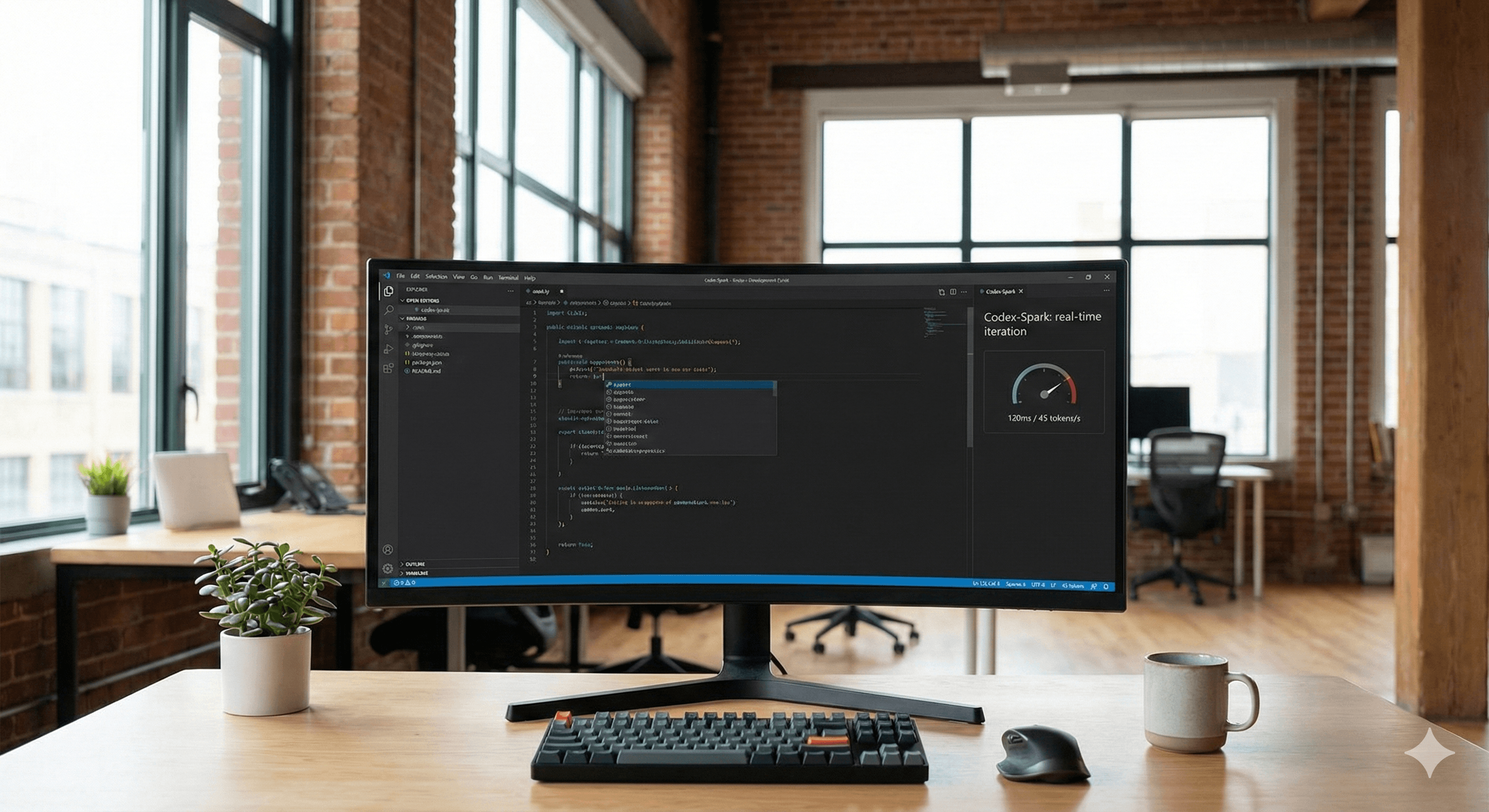

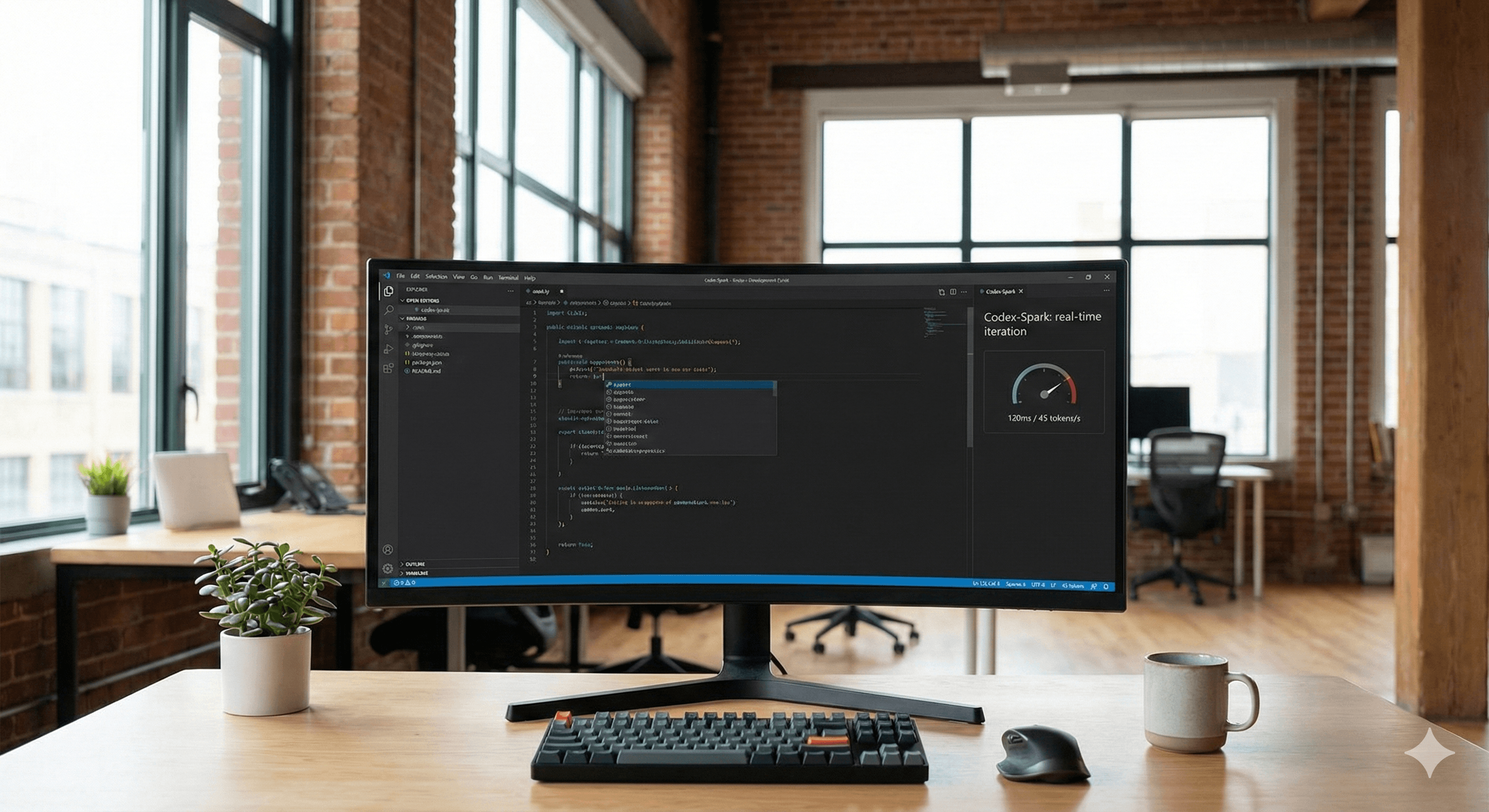

GPT‑5.3‑Codex‑Spark is OpenAI’s research-preview model built for real-time coding in Codex. Designed for near-instant iteration, it can generate code at very high speed (reported as over 1,000 tokens per second) and supports longer-context workflows, helping developers iterate faster in the Codex app, CLI and IDE tools.

“AI coding assistants” are everywhere — but most still feel like a chat interface bolted onto development work. OpenAI’s GPT‑5.3‑Codex‑Spark is different: it’s designed for real-time coding iteration, where responsiveness matters as much as capability.

In February 2026, OpenAI released Codex‑Spark as a research preview model aimed at making AI-assisted coding feel near-instant, with OpenAI describing performance of more than 1,000 tokens per second when served on low-latency hardware.

Key benefits at a glance

Near-instant generation for tighter feedback loops

Codex‑Spark is optimised to keep pace with live coding: quick edits, rapid retries, and fast back-and-forth when you’re debugging or refactoring. OpenAI positions it as its first model designed specifically for real-time coding.

Longer-context coding workflows

If you’re working across large files, multiple modules, or complex diffs, longer context matters. OpenAI’s research listing for Codex‑Spark highlights 128k context, which supports more “whole-project” style iteration than typical chat-based coding help.

Research preview access for Pro users

Codex‑Spark is currently offered as a research preview and is available to ChatGPT Pro users through Codex surfaces, including the app and CLI.

Updated as of 13 February 2026: Codex‑Spark is listed by OpenAI as a research preview model for real-time coding.

How it works (what’s actually new)

Codex‑Spark is described as a smaller version of GPT‑5.3‑Codex, tuned for speed so you can iterate quickly during active development — rather than waiting for long generations to complete. OpenAI also frames it as the first milestone in its partnership with Cerebras, which focuses on ultra-low-latency serving for fast inference.

The practical impact is simple: the model is built to support new interaction patterns — more like a responsive pair programmer than a slow “generate-and-paste” tool.

Practical steps: how to try GPT‑5.3‑Codex‑Spark

Confirm access (ChatGPT Pro): Codex‑Spark is listed under Pro benefits as research preview access.

Use it where you code: OpenAI lists Codex‑Spark availability across Codex tooling, including the Codex app, CLI, and IDE extension.

Switch the model in your workflow: OpenAI’s Codex docs show model selection for the CLI (e.g., using

gpt-5.3-codex-spark) and describe using the faster model for responsive tasks.

Where Codex‑Spark fits best

Codex‑Spark is most compelling when latency is the bottleneck:

tight edit–run–fix loops

fast refactors across a codebase

rapid testing of small implementation changes

“pair programming” style collaboration in an IDE

For deeper multi-step engineering work, teams may still prefer heavier models — but Spark is built for the moments where speed changes how you work.

Summary

GPT‑5.3‑Codex‑Spark is OpenAI’s first model designed for real-time coding, offered as a research preview for ChatGPT Pro users. If your goal is faster iteration — not just occasional code generation — Spark is worth testing in your daily workflow.

Next steps: If you’re exploring how tools like Codex fit into your engineering workflow (governance, enablement, best-practice prompts, and safe rollout), contact Generation Digital for guidance.

FAQs

What is GPT‑5.3‑Codex‑Spark?

GPT‑5.3‑Codex‑Spark is OpenAI’s research-preview model designed for real-time coding in Codex, optimised for near-instant iteration.

How is Codex‑Spark different from GPT‑5.3‑Codex?

OpenAI describes Codex‑Spark as a smaller version of GPT‑5.3‑Codex tuned for speed and responsiveness, intended to support new real-time coding interaction patterns.

How fast is Codex‑Spark?

OpenAI states it can deliver more than 1,000 tokens per second when served on ultra-low-latency hardware. Some OpenAI materials and coverage also describe “15x faster generation,” but the most consistent official metric is the 1,000+ tokens/sec framing.

Who can access GPT‑5.3‑Codex‑Spark?

OpenAI lists Codex‑Spark as a research preview available to ChatGPT Pro users (via Codex tooling such as the app and CLI).

Where can I use it?

OpenAI documentation references Codex‑Spark usage in the Codex app, Codex CLI, and an IDE extension.

GPT‑5.3‑Codex‑Spark is OpenAI’s research-preview model built for real-time coding in Codex. Designed for near-instant iteration, it can generate code at very high speed (reported as over 1,000 tokens per second) and supports longer-context workflows, helping developers iterate faster in the Codex app, CLI and IDE tools.

“AI coding assistants” are everywhere — but most still feel like a chat interface bolted onto development work. OpenAI’s GPT‑5.3‑Codex‑Spark is different: it’s designed for real-time coding iteration, where responsiveness matters as much as capability.

In February 2026, OpenAI released Codex‑Spark as a research preview model aimed at making AI-assisted coding feel near-instant, with OpenAI describing performance of more than 1,000 tokens per second when served on low-latency hardware.

Key benefits at a glance

Near-instant generation for tighter feedback loops

Codex‑Spark is optimised to keep pace with live coding: quick edits, rapid retries, and fast back-and-forth when you’re debugging or refactoring. OpenAI positions it as its first model designed specifically for real-time coding.

Longer-context coding workflows

If you’re working across large files, multiple modules, or complex diffs, longer context matters. OpenAI’s research listing for Codex‑Spark highlights 128k context, which supports more “whole-project” style iteration than typical chat-based coding help.

Research preview access for Pro users

Codex‑Spark is currently offered as a research preview and is available to ChatGPT Pro users through Codex surfaces, including the app and CLI.

Updated as of 13 February 2026: Codex‑Spark is listed by OpenAI as a research preview model for real-time coding.

How it works (what’s actually new)

Codex‑Spark is described as a smaller version of GPT‑5.3‑Codex, tuned for speed so you can iterate quickly during active development — rather than waiting for long generations to complete. OpenAI also frames it as the first milestone in its partnership with Cerebras, which focuses on ultra-low-latency serving for fast inference.

The practical impact is simple: the model is built to support new interaction patterns — more like a responsive pair programmer than a slow “generate-and-paste” tool.

Practical steps: how to try GPT‑5.3‑Codex‑Spark

Confirm access (ChatGPT Pro): Codex‑Spark is listed under Pro benefits as research preview access.

Use it where you code: OpenAI lists Codex‑Spark availability across Codex tooling, including the Codex app, CLI, and IDE extension.

Switch the model in your workflow: OpenAI’s Codex docs show model selection for the CLI (e.g., using

gpt-5.3-codex-spark) and describe using the faster model for responsive tasks.

Where Codex‑Spark fits best

Codex‑Spark is most compelling when latency is the bottleneck:

tight edit–run–fix loops

fast refactors across a codebase

rapid testing of small implementation changes

“pair programming” style collaboration in an IDE

For deeper multi-step engineering work, teams may still prefer heavier models — but Spark is built for the moments where speed changes how you work.

Summary

GPT‑5.3‑Codex‑Spark is OpenAI’s first model designed for real-time coding, offered as a research preview for ChatGPT Pro users. If your goal is faster iteration — not just occasional code generation — Spark is worth testing in your daily workflow.

Next steps: If you’re exploring how tools like Codex fit into your engineering workflow (governance, enablement, best-practice prompts, and safe rollout), contact Generation Digital for guidance.

FAQs

What is GPT‑5.3‑Codex‑Spark?

GPT‑5.3‑Codex‑Spark is OpenAI’s research-preview model designed for real-time coding in Codex, optimised for near-instant iteration.

How is Codex‑Spark different from GPT‑5.3‑Codex?

OpenAI describes Codex‑Spark as a smaller version of GPT‑5.3‑Codex tuned for speed and responsiveness, intended to support new real-time coding interaction patterns.

How fast is Codex‑Spark?

OpenAI states it can deliver more than 1,000 tokens per second when served on ultra-low-latency hardware. Some OpenAI materials and coverage also describe “15x faster generation,” but the most consistent official metric is the 1,000+ tokens/sec framing.

Who can access GPT‑5.3‑Codex‑Spark?

OpenAI lists Codex‑Spark as a research preview available to ChatGPT Pro users (via Codex tooling such as the app and CLI).

Where can I use it?

OpenAI documentation references Codex‑Spark usage in the Codex app, Codex CLI, and an IDE extension.

Receive weekly AI news and advice straight to your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Upcoming Workshops and Webinars

Streamlined Operations for Canadian Businesses - Asana

Virtual Webinar

Wednesday, February 25, 2026

Online

Collaborate with AI Team Members - Asana

In-Person Workshop

Thursday, February 26, 2026

Toronto, Canada

From Concept to Prototype - AI in Miro

Online Webinar

Wednesday, February 18, 2026

Online

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital