Unlocking Your Organisational Knowledge with Custom AI

Unlocking Your Organisational Knowledge with Custom AI

Glean

Dec 1, 2025

Not sure where to start with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure where to start with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Download Our Free AI Readiness Pack

Onboard your AI, not just your people

Your best answers aren’t on the public internet. They’re hiding in SharePoint folders, Confluence pages, Git repos, and the heads of people who are in back‑to‑back meetings. Meanwhile, your AI assistant keeps giving confident half‑truths. The fix isn’t to buy a bigger model—it’s to give your assistant your organisational memory.

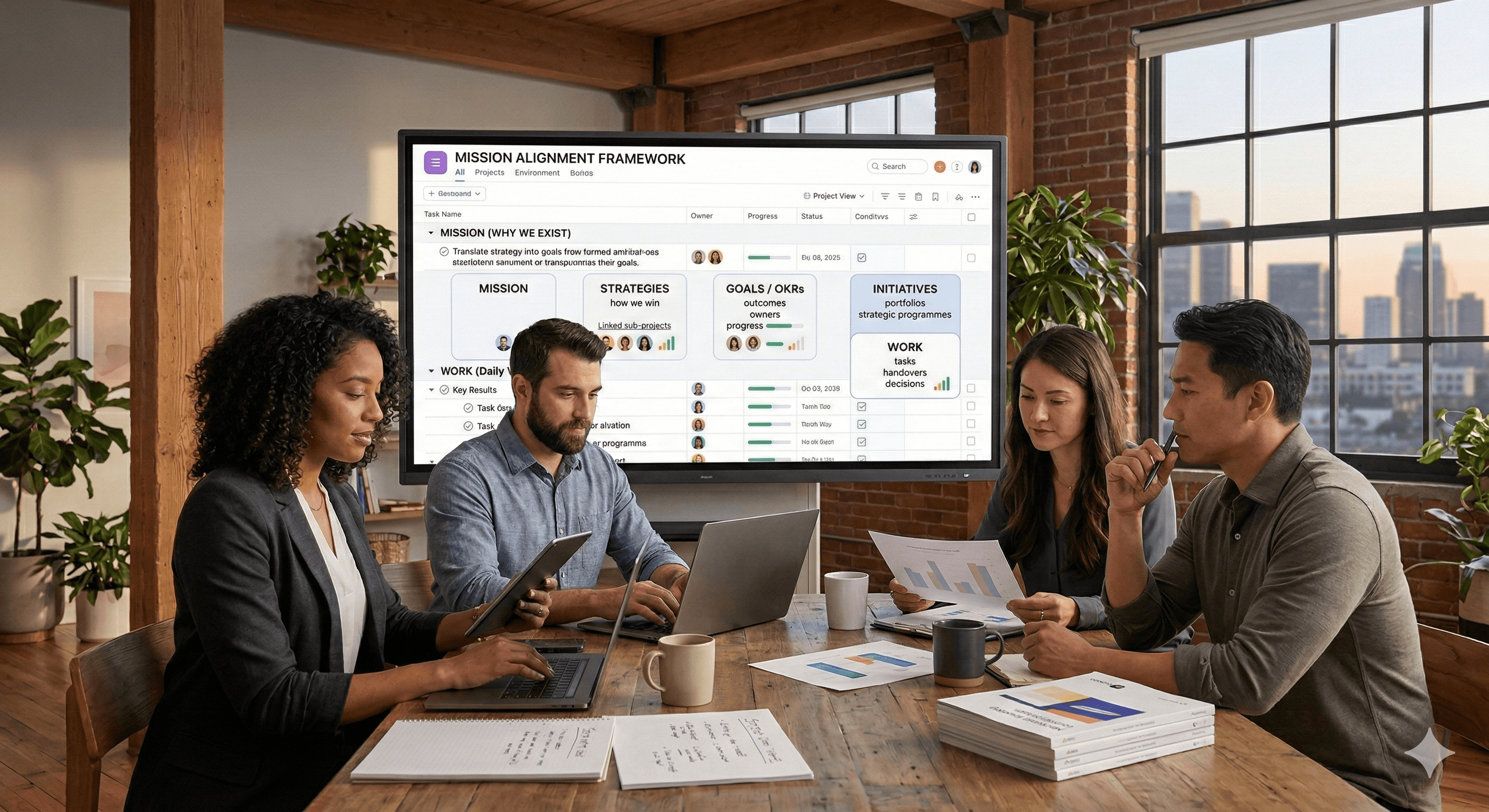

Big idea: Treat AI like a new colleague who needs onboarding—sources, standards, and guardrails—not like another search box.

The context gap: why generic AI falls short at work

Let’s be honest: the biggest blocker to workplace AI success is missing context. People waste hours re‑asking the same questions because the assistant can’t see what they can see. The result? Change fatigue. Skepticism. A sense that AI is clever but not trustworthy.

Here’s what’s changed in 2025:

Company knowledge grounding means your assistant can answer with citations to your files (e.g., policy PDFs, client decks, Jira tickets), respecting existing permissions.

Persistent context (projects, glossaries, coding standards) lets teams keep shared ground rules in every conversation—no more repeating yourself.

Data controls (residency, retention, audit logs) make legal and security teams comfortable from day one.

Imagine a new starter asking, “What’s our policy on client data in test environments?” and your assistant replies with a two‑sentence answer, a confidence note, and links to the exact paragraphs in your infosec policy and SOP. That’s the shift—from guessing to grounded.

When context clicks: three high‑leverage moments

Picture three high‑leverage moments across your organisation:

1) Engineering velocity

The assistant knows your coding standards, architectural decisions, and PR templates. It drafts migration steps aligned to your repo’s CLAUDE.md and links to previous PRs that solved a similar issue. Engineers spend time shipping, not scavenging.

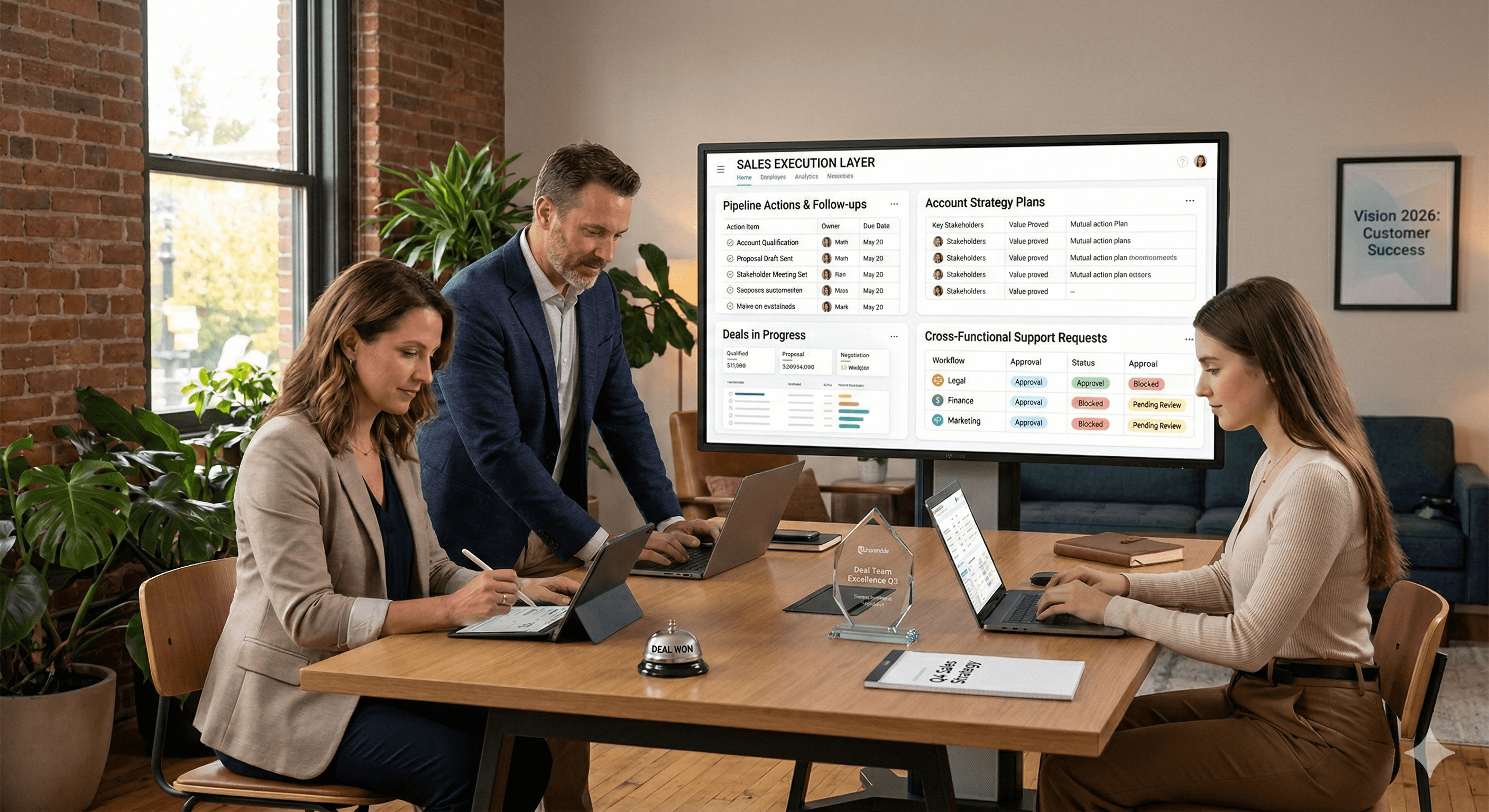

2) Client delivery without rework

A consultant asks for a client‑approved definition of “production‑ready.” The assistant pulls the signed SoW line item and the acceptance checklist, then drafts a status update you can paste into Slack/Teams. No ping‑ponging across five tools.

3) Ops that actually scales

HR needs an onboarding plan for a new role. The assistant stitches the role handbook, security training, and facilities checklist into a single, dated plan—complete with links and a short Loom script outline. Day one becomes day done.

Why this works

RAG done right: Answers are composed from your sources with citations.

Memory with boundaries: Team‑level context persists; personal details don’t.

Governance by design: SSO/SCIM, least‑privilege access, and audit trails are standard.

Outcome: Faster decisions, fewer errors, and growing trust—because every answer shows its working.

From idea to impact: your 90‑day plan

Stop treating AI as a search engine. Treat it as an expert colleague you can brief.

Here's a 90‑day plan to get there

Weeks 1–3: Foundations

Curate 20 must‑answer questions per team (policy, delivery, engineering).

Connect priority sources (SharePoint/Drive/Confluence/GitHub/CRM). Mirror permissions.

Create project‑level context packs (glossary, style, coding rules).

Weeks 4–8: Pilot

Enable citations. Require a source link for every fact.

Run side‑by‑side tests (assistant vs. human search). Track time‑to‑answer & accuracy.

Close gaps: add missing repositories; refine chunking; update context packs.

Weeks 9–12: Scale

Automate governance (SCIM groups, DLP, retention). Turn on audit dashboards.

Roll out enablement: “how to ask”, “how to check”, “when to escalate”.

Publish a living playbook; review monthly.

Generation Digital helps UK organisations design this securely—connectors, context, and controls configured to your estate. Book a working session and we’ll map your top use‑cases and ship a pilot plan.

The Bottom Line

Custom AI is onboarding: connect your systems, give it persistent team context, and insist on citations. With that, assistants move from clever guessers to dependable colleagues who speed decisions and reduce cognitive load—without exposing data beyond existing permissions.

FAQs

How do we avoid hallucinations? Ground answers in your content and require citations. Most “hallucinations” are missing context, not model failure.

Is memory safe? Yes—when scoped. Use team/project memory and set sensible retention. Avoid storing personal data.

Do we need a data lake? No. Start with connectors and retrieval. Federate search before you consolidate.

Will this work with Microsoft 365? Yes. SharePoint/OneDrive/Teams are common first connectors.

Custom AI for organisations means connecting internal systems (SharePoint, Drive, GitHub, CRM) to an assistant and adding persistent team context (policies, standards, client info). The assistant answers with citations to your files, reducing hallucinations and speeding decisions—while respecting existing permissions, retention rules, and data residency requirements.

Next steps?

Ready to turn AI into an expert colleague? Contact Generation Digital to plan a secure, UK‑ready rollout.

Onboard your AI, not just your people

Your best answers aren’t on the public internet. They’re hiding in SharePoint folders, Confluence pages, Git repos, and the heads of people who are in back‑to‑back meetings. Meanwhile, your AI assistant keeps giving confident half‑truths. The fix isn’t to buy a bigger model—it’s to give your assistant your organisational memory.

Big idea: Treat AI like a new colleague who needs onboarding—sources, standards, and guardrails—not like another search box.

The context gap: why generic AI falls short at work

Let’s be honest: the biggest blocker to workplace AI success is missing context. People waste hours re‑asking the same questions because the assistant can’t see what they can see. The result? Change fatigue. Skepticism. A sense that AI is clever but not trustworthy.

Here’s what’s changed in 2025:

Company knowledge grounding means your assistant can answer with citations to your files (e.g., policy PDFs, client decks, Jira tickets), respecting existing permissions.

Persistent context (projects, glossaries, coding standards) lets teams keep shared ground rules in every conversation—no more repeating yourself.

Data controls (residency, retention, audit logs) make legal and security teams comfortable from day one.

Imagine a new starter asking, “What’s our policy on client data in test environments?” and your assistant replies with a two‑sentence answer, a confidence note, and links to the exact paragraphs in your infosec policy and SOP. That’s the shift—from guessing to grounded.

When context clicks: three high‑leverage moments

Picture three high‑leverage moments across your organisation:

1) Engineering velocity

The assistant knows your coding standards, architectural decisions, and PR templates. It drafts migration steps aligned to your repo’s CLAUDE.md and links to previous PRs that solved a similar issue. Engineers spend time shipping, not scavenging.

2) Client delivery without rework

A consultant asks for a client‑approved definition of “production‑ready.” The assistant pulls the signed SoW line item and the acceptance checklist, then drafts a status update you can paste into Slack/Teams. No ping‑ponging across five tools.

3) Ops that actually scales

HR needs an onboarding plan for a new role. The assistant stitches the role handbook, security training, and facilities checklist into a single, dated plan—complete with links and a short Loom script outline. Day one becomes day done.

Why this works

RAG done right: Answers are composed from your sources with citations.

Memory with boundaries: Team‑level context persists; personal details don’t.

Governance by design: SSO/SCIM, least‑privilege access, and audit trails are standard.

Outcome: Faster decisions, fewer errors, and growing trust—because every answer shows its working.

From idea to impact: your 90‑day plan

Stop treating AI as a search engine. Treat it as an expert colleague you can brief.

Here's a 90‑day plan to get there

Weeks 1–3: Foundations

Curate 20 must‑answer questions per team (policy, delivery, engineering).

Connect priority sources (SharePoint/Drive/Confluence/GitHub/CRM). Mirror permissions.

Create project‑level context packs (glossary, style, coding rules).

Weeks 4–8: Pilot

Enable citations. Require a source link for every fact.

Run side‑by‑side tests (assistant vs. human search). Track time‑to‑answer & accuracy.

Close gaps: add missing repositories; refine chunking; update context packs.

Weeks 9–12: Scale

Automate governance (SCIM groups, DLP, retention). Turn on audit dashboards.

Roll out enablement: “how to ask”, “how to check”, “when to escalate”.

Publish a living playbook; review monthly.

Generation Digital helps UK organisations design this securely—connectors, context, and controls configured to your estate. Book a working session and we’ll map your top use‑cases and ship a pilot plan.

The Bottom Line

Custom AI is onboarding: connect your systems, give it persistent team context, and insist on citations. With that, assistants move from clever guessers to dependable colleagues who speed decisions and reduce cognitive load—without exposing data beyond existing permissions.

FAQs

How do we avoid hallucinations? Ground answers in your content and require citations. Most “hallucinations” are missing context, not model failure.

Is memory safe? Yes—when scoped. Use team/project memory and set sensible retention. Avoid storing personal data.

Do we need a data lake? No. Start with connectors and retrieval. Federate search before you consolidate.

Will this work with Microsoft 365? Yes. SharePoint/OneDrive/Teams are common first connectors.

Custom AI for organisations means connecting internal systems (SharePoint, Drive, GitHub, CRM) to an assistant and adding persistent team context (policies, standards, client info). The assistant answers with citations to your files, reducing hallucinations and speeding decisions—while respecting existing permissions, retention rules, and data residency requirements.

Next steps?

Ready to turn AI into an expert colleague? Contact Generation Digital to plan a secure, UK‑ready rollout.

Get weekly AI news and advice delivered to your inbox

By subscribing you consent to Generation Digital storing and processing your details in line with our privacy policy. You can read the full policy at gend.co/privacy.

Upcoming Workshops and Webinars

Operational Clarity at Scale - Asana

Virtual Webinar

Weds 25th February 2026

Online

Work With AI Teammates - Asana

In-Person Workshop

Thurs 26th February 2026

London, UK

From Idea to Prototype - AI in Miro

Virtual Webinar

Weds 18th February 2026

Online

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia

Company No: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia