Sora Feed Philosophy: Creative, Safe, and Steerable

Sora Feed Philosophy: Creative, Safe, and Steerable

OpenAI

Sora

Feb 3, 2026

Not sure where to start with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure where to start with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Download Our Free AI Readiness Pack

The Sora feed is designed to inspire people to create, not maximise passive scrolling. It uses personalised recommendations based on signals such as your activity and engagement, while adding safety layers to filter harmful content. For families, parental controls can limit personalisation, continuous scrolling, and messaging for teen accounts.

Most feeds are built to keep you watching. The Sora feed is built to help you make—to show what’s possible, spark ideas, and encourage people to try creating for themselves. That philosophy matters, because discovery tools shape behaviour: what you see influences what you attempt next.

At the same time, OpenAI frames safety as part of the product design—not an afterthought. The feed is supported by layered guardrails and clear controls, including tools for parents managing teen accounts.

The Sora feed philosophy in plain English

OpenAI’s goal for the Sora feed is simple: inspire creation and learning. Instead of optimising only for attention, the feed aims to highlight ideas, formats, and techniques that nudge people from “watching” to “trying”.

This philosophy shows up in three practical ways:

Personalised inspiration (the feed adapts to you)

Steerability (you can shape what you see)

Safety and control (guardrails plus parental options where needed)

How recommendations work

Sora’s recommendation system is designed to give you a feed that matches your interests and creative direction. OpenAI notes that personalisation can consider signals such as your activity and engagement in Sora, helping tailor what appears in your feed.

Practically, that means if you spend time on certain styles, subjects, or formats, the feed will tend to surface more content that feels relevant—so you can learn patterns, remix ideas, and build confidence faster.

Safety isn’t one feature—it’s a set of layers

OpenAI describes “layered” safety: guardrails at creation time, plus filtering and review designed to reduce harmful content in the feed, while still allowing room for experimentation and artistic expression.

For a creative platform, that balance is the point: too few safeguards and the feed becomes risky; too many blunt restrictions and it becomes sterile. The approach here is to combine automation with policies and intervention points where needed.

Parental controls: what parents can actually manage

Where your original copy mentioned parental controls in general terms, OpenAI’s documentation is specific. Parents can manage teen account settings for Sora via ChatGPT parental controls, including:

Personalised feed (opt out of a personalised feed)

Continuous feed (control uninterrupted scrolling)

Messaging (turn direct messages on/off)

That’s helpful because it maps neatly to common safety goals:

Reduce algorithmic rabbit holes (personalisation off)

Add friction to endless scrolling (continuous feed control)

Limit unwanted contact (messaging off)

Practical steps: how to customise Sora for creativity and safety

Here’s a simple setup that works for most people:

Shape your feed intentionally

Engage with the styles you want to learn from and skip what you don’t—recommendation signals are influenced by what you choose to watch and interact with.Use the controls that match your goal

If you want broader discovery, keep personalisation on. If you want fewer surprises (especially for teens), consider turning personalisation off via parental controls.Understand what gets shared

OpenAI’s Sora data controls explain that videos can be eligible for publication to the explore/feed experience depending on settings and content type, and that published videos can be remixed or downloaded by others (subject to rules).

Summary

The Sora feed is designed to push creativity forward—helping people learn what’s possible and encouraging them to create—while keeping safety and user control built into the experience. With steerable recommendations, layered safeguards, and parental controls for teen accounts, it aims to be a feed you can actually trust and tailor.

Next steps (Generation Digital): If you’re exploring Sora for brand, education, or internal innovation, we can help you define safe use cases, set governance, and design workflows that turn curiosity into outcomes.

FAQs

Q1: What makes the Sora feed unique?

It’s designed to inspire people to create—using recommendations that surface ideas and formats—rather than simply maximising passive consumption.

Q2: How does Sora help keep users safe?

OpenAI describes layered safety: guardrails during creation and filtering/review designed to reduce harmful content in the feed.

Q3: Can the Sora feed be personalised?

Yes. The feed can use signals such as your activity and engagement to tailor recommendations.

Q4: What parental controls are available for Sora?

Parents can manage teen settings such as personalised feed, continuous feed, and messaging via ChatGPT parental controls.

Recommended schema: FAQPage (plus Article)

Internal link opportunities on gend.co

A short “Responsible AI video in organisations” explainer (governance + brand safety)

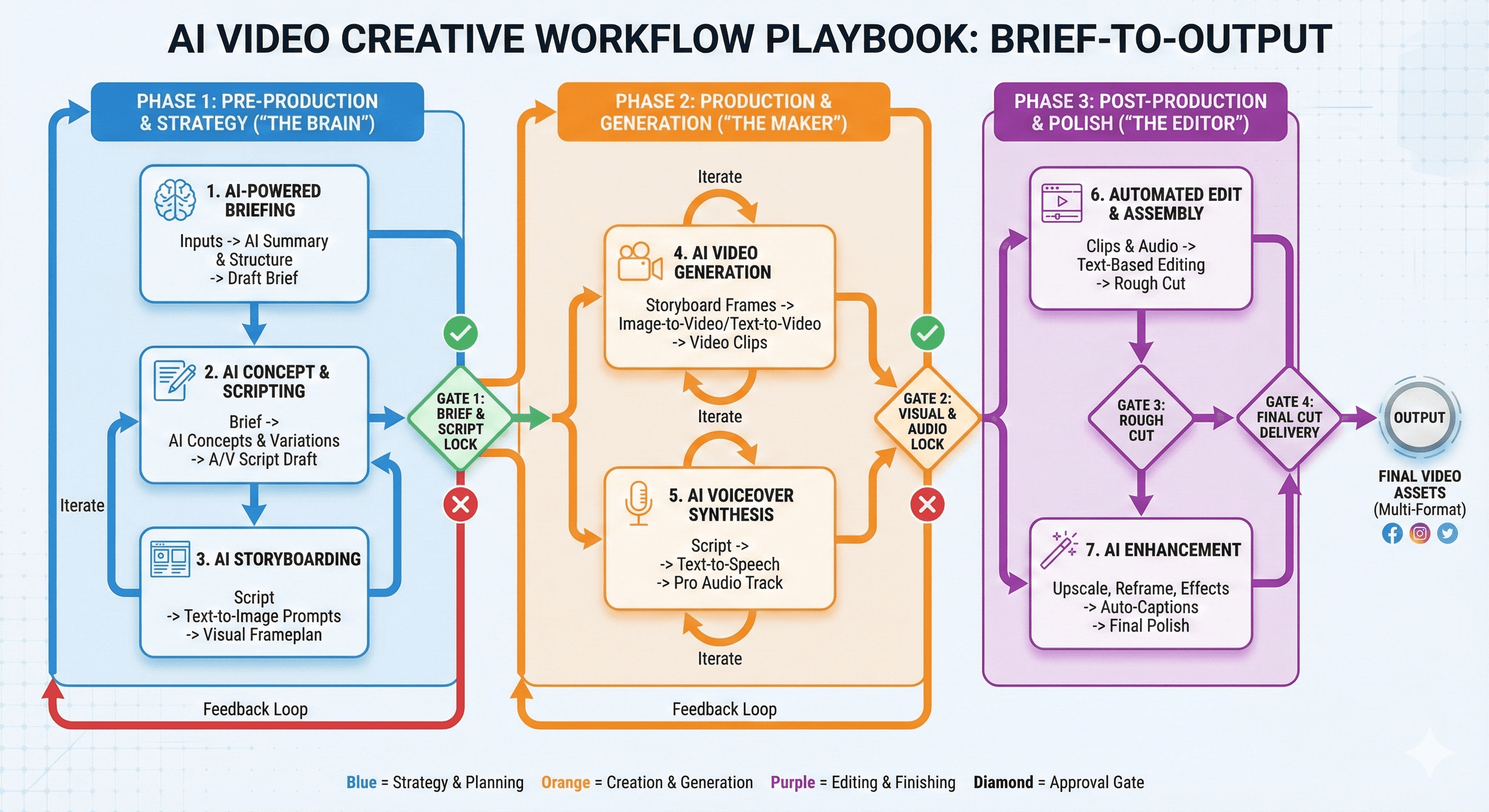

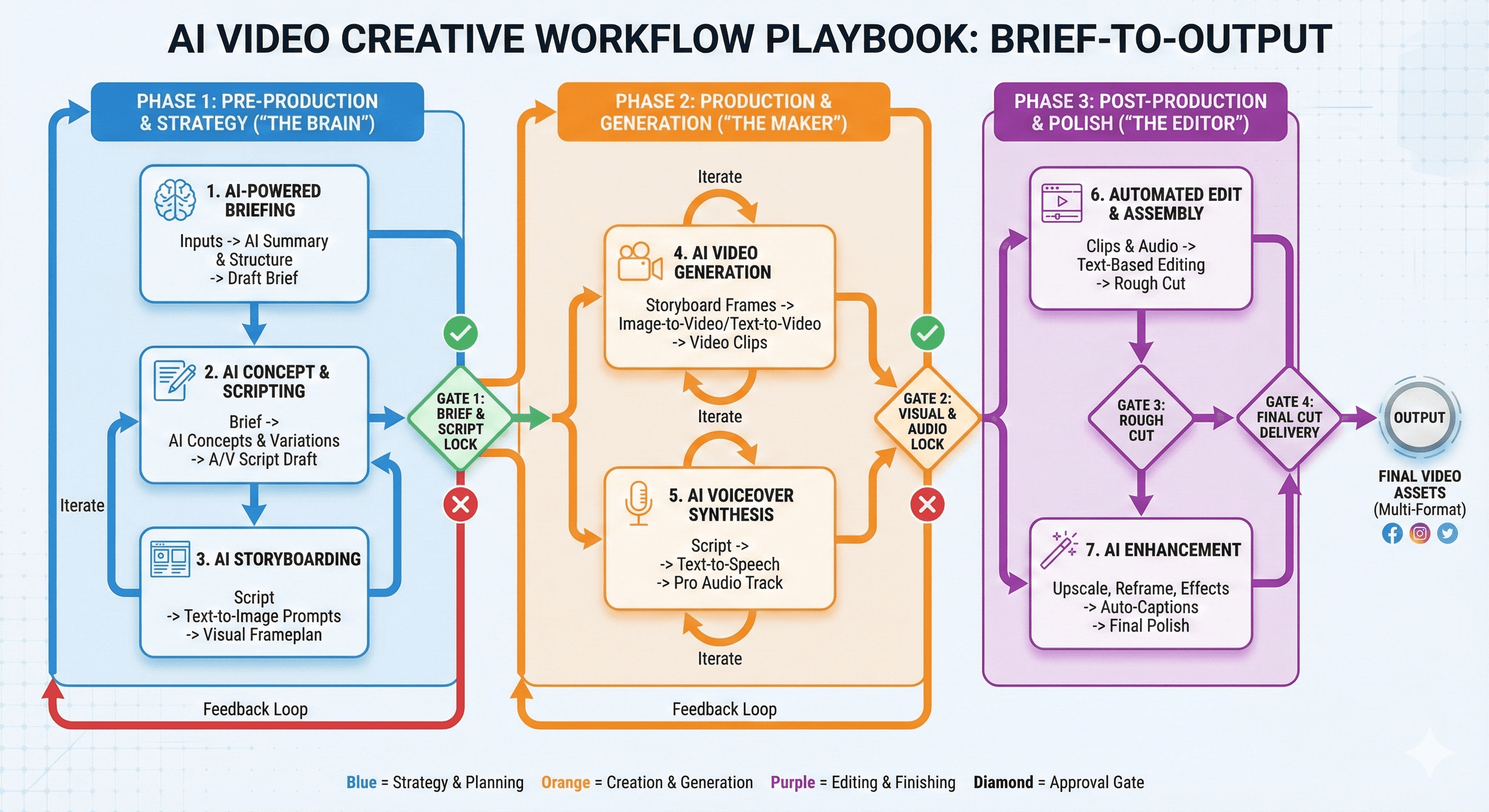

A “Creative workflow with AI video” playbook (brief-to-output process, approvals, templates)

A “Family and education safety” page (if you’re targeting schools/parents)

The Sora feed is designed to inspire people to create, not maximise passive scrolling. It uses personalised recommendations based on signals such as your activity and engagement, while adding safety layers to filter harmful content. For families, parental controls can limit personalisation, continuous scrolling, and messaging for teen accounts.

Most feeds are built to keep you watching. The Sora feed is built to help you make—to show what’s possible, spark ideas, and encourage people to try creating for themselves. That philosophy matters, because discovery tools shape behaviour: what you see influences what you attempt next.

At the same time, OpenAI frames safety as part of the product design—not an afterthought. The feed is supported by layered guardrails and clear controls, including tools for parents managing teen accounts.

The Sora feed philosophy in plain English

OpenAI’s goal for the Sora feed is simple: inspire creation and learning. Instead of optimising only for attention, the feed aims to highlight ideas, formats, and techniques that nudge people from “watching” to “trying”.

This philosophy shows up in three practical ways:

Personalised inspiration (the feed adapts to you)

Steerability (you can shape what you see)

Safety and control (guardrails plus parental options where needed)

How recommendations work

Sora’s recommendation system is designed to give you a feed that matches your interests and creative direction. OpenAI notes that personalisation can consider signals such as your activity and engagement in Sora, helping tailor what appears in your feed.

Practically, that means if you spend time on certain styles, subjects, or formats, the feed will tend to surface more content that feels relevant—so you can learn patterns, remix ideas, and build confidence faster.

Safety isn’t one feature—it’s a set of layers

OpenAI describes “layered” safety: guardrails at creation time, plus filtering and review designed to reduce harmful content in the feed, while still allowing room for experimentation and artistic expression.

For a creative platform, that balance is the point: too few safeguards and the feed becomes risky; too many blunt restrictions and it becomes sterile. The approach here is to combine automation with policies and intervention points where needed.

Parental controls: what parents can actually manage

Where your original copy mentioned parental controls in general terms, OpenAI’s documentation is specific. Parents can manage teen account settings for Sora via ChatGPT parental controls, including:

Personalised feed (opt out of a personalised feed)

Continuous feed (control uninterrupted scrolling)

Messaging (turn direct messages on/off)

That’s helpful because it maps neatly to common safety goals:

Reduce algorithmic rabbit holes (personalisation off)

Add friction to endless scrolling (continuous feed control)

Limit unwanted contact (messaging off)

Practical steps: how to customise Sora for creativity and safety

Here’s a simple setup that works for most people:

Shape your feed intentionally

Engage with the styles you want to learn from and skip what you don’t—recommendation signals are influenced by what you choose to watch and interact with.Use the controls that match your goal

If you want broader discovery, keep personalisation on. If you want fewer surprises (especially for teens), consider turning personalisation off via parental controls.Understand what gets shared

OpenAI’s Sora data controls explain that videos can be eligible for publication to the explore/feed experience depending on settings and content type, and that published videos can be remixed or downloaded by others (subject to rules).

Summary

The Sora feed is designed to push creativity forward—helping people learn what’s possible and encouraging them to create—while keeping safety and user control built into the experience. With steerable recommendations, layered safeguards, and parental controls for teen accounts, it aims to be a feed you can actually trust and tailor.

Next steps (Generation Digital): If you’re exploring Sora for brand, education, or internal innovation, we can help you define safe use cases, set governance, and design workflows that turn curiosity into outcomes.

FAQs

Q1: What makes the Sora feed unique?

It’s designed to inspire people to create—using recommendations that surface ideas and formats—rather than simply maximising passive consumption.

Q2: How does Sora help keep users safe?

OpenAI describes layered safety: guardrails during creation and filtering/review designed to reduce harmful content in the feed.

Q3: Can the Sora feed be personalised?

Yes. The feed can use signals such as your activity and engagement to tailor recommendations.

Q4: What parental controls are available for Sora?

Parents can manage teen settings such as personalised feed, continuous feed, and messaging via ChatGPT parental controls.

Recommended schema: FAQPage (plus Article)

Internal link opportunities on gend.co

A short “Responsible AI video in organisations” explainer (governance + brand safety)

A “Creative workflow with AI video” playbook (brief-to-output process, approvals, templates)

A “Family and education safety” page (if you’re targeting schools/parents)

Get weekly AI news and advice delivered to your inbox

By subscribing you consent to Generation Digital storing and processing your details in line with our privacy policy. You can read the full policy at gend.co/privacy.

Upcoming Workshops and Webinars

Operational Clarity at Scale - Asana

Virtual Webinar

Weds 25th February 2026

Online

Work With AI Teammates - Asana

In-Person Workshop

Thurs 26th February 2026

London, UK

From Idea to Prototype - AI in Miro

Virtual Webinar

Weds 18th February 2026

Online

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia

Company No: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia