Trust in AI - How to Scale Safely, from Stack Overflow’s CEO

Trust in AI - How to Scale Safely, from Stack Overflow’s CEO

AI

Feb 2, 2026

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Download Our Free AI Readiness Pack

Trust in AI is the degree of confidence people and organisations have that AI systems are reliable, safe, and aligned with policy and ethics. To scale AI, leaders must govern data quality, make outcomes auditable, and embed human-validated knowledge into daily workflows.

AI adoption is racing ahead—but trust often lags behind. In a new interview, Stack Overflow’s CEO, Prashanth Chandrasekar, explains why enterprises stall after pilots and how better knowledge quality, governance, and developer experience unlock scale. Here’s what leaders should act on now.

Why trust—not tooling—decides AI at scale

Many organisations can ship a demo. Fewer can deploy dependable systems across teams. The difference is trust: consistent, explainable outputs; transparent data lineage; and clear accountability from model to decision. McKinsey’s interview centres on these organisational levers rather than just models or infrastructure.

Make knowledge quality your foundation

Enterprises need a single source of truth for code, policies, and domain know-how. Chandrasekar emphasises human-validated knowledge—curated answers, coding patterns, architecture decisions—as the substrate that makes AI reliable in daily development. Systems built on vetted knowledge are easier to audit and improve.

Practical moves

Stand up a “knowledge as a service” layer: codified standards, design docs, and decision records that AI can retrieve.

Use retrieval-augmented generation (RAG) to ground AI outputs in that corpus; require citations in answers.

Track answer acceptance and downstream usage to measure trust.

Recent interviews echo the same pattern: developers use AI heavily but trust it selectively—so grounding responses in reviewed knowledge is critical.

Treat developers as the trust engine

Developers are both the earliest adopters and the first line of defence. The interview points to evolving sentiment on AI assistance: teams embrace speed gains but insist on code review, tests, and provenance. Embed trust practices where developers work—IDEs, repos, CI/CD—rather than in separate dashboards.

What to implement

Policy-as-code guardrails in pipelines (licensing, secrets, dependency risks).

Mandatory unit and property-based tests for AI-generated code.

Pull-request templates that require sources when AI contributed.

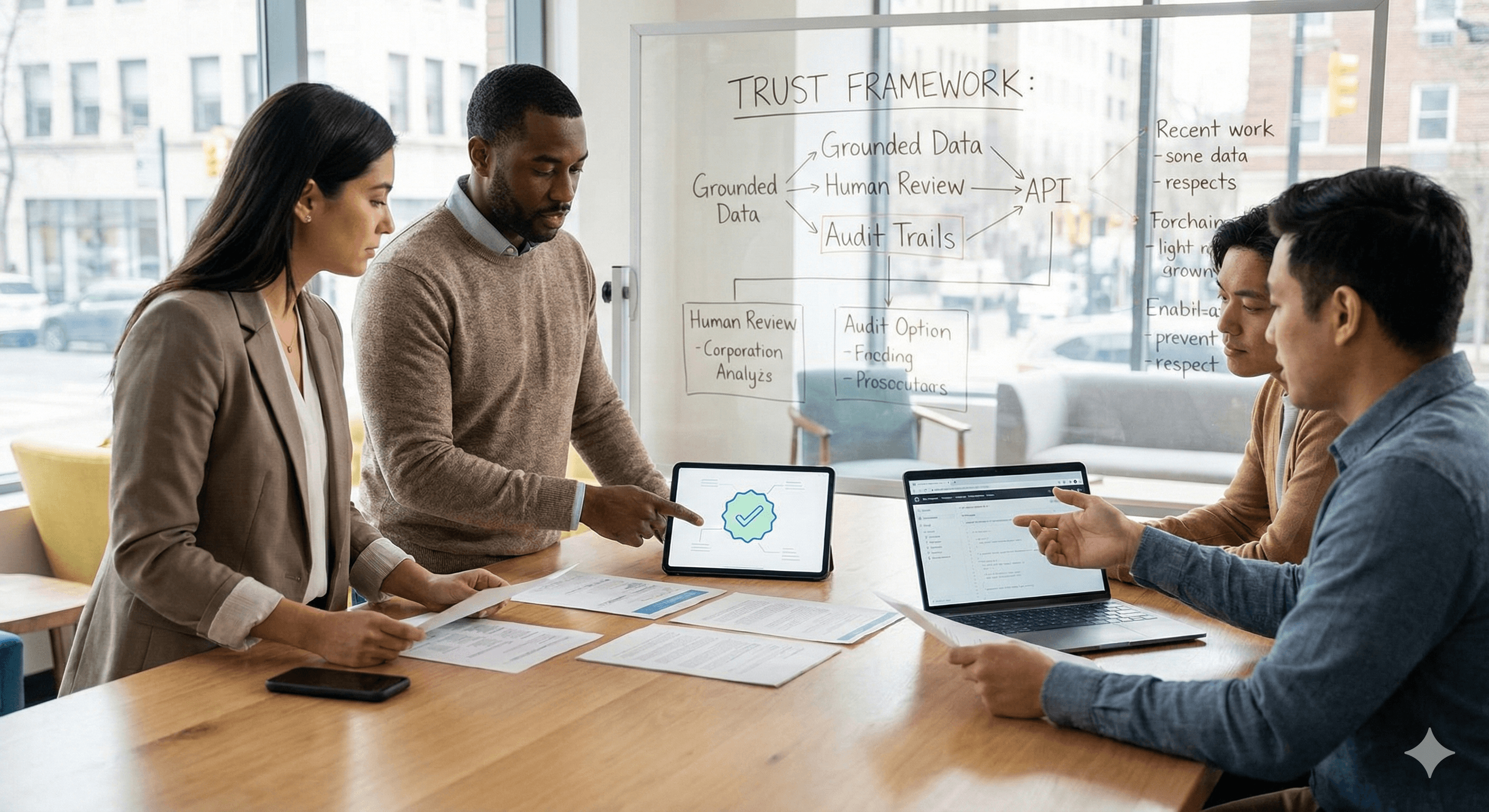

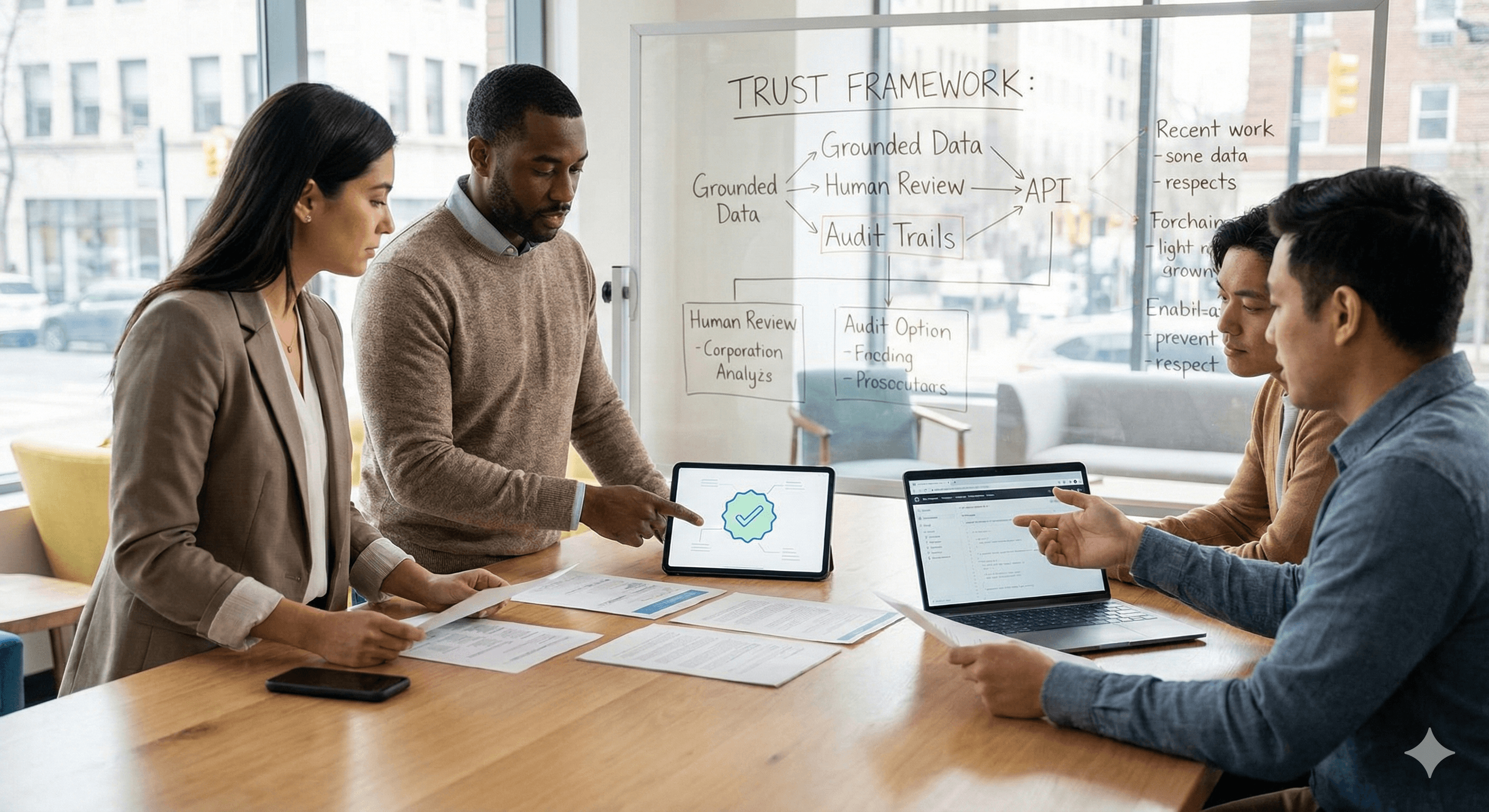

Govern for scale: clear roles, auditable outcomes

You can’t rely on “best efforts” as usage grows. Define who approves models, who owns prompts, and how you roll back failures. Keep an audit trail from prompt to output to production decision. Leaders interviewed by McKinsey & Company consistently link trust to operational discipline, not slogans.

A simple operating model

Product owns use-case value and acceptance criteria.

Engineering owns integration, resilience, and benchmarks.

Data/ML owns model selection, evaluation, and drift.

Risk/Legal owns policy, IP, and regulatory alignment.

Internal Comms owns transparency with employees.

Measure trust the way you measure reliability

Adopt SLO-style metrics for AI: grounded-answer rate, citation-coverage rate, harmful-output rate, and time-to-rollback. Publish these like reliability stats. Several 2025 interviews with industry leaders reinforce that organisations will demand verifiable ROI and quality, not experimentation for its own sake.

Build with communities, not just for them

Stack Overflow’s vantage point shows that community norms—review, voting, curation—are a scalable mechanism for quality. Borrow that “human-in-the-loop” pattern inside your enterprise: expert review boards for prompts and patterns; contributor recognition in performance frameworks; clear routes to escalate errors.

Five actions to take this quarter

Inventory knowledge: catalogue the docs, patterns, and policies your AI should trust; fix ROT (redundant, obsolete, trivial) content.

Ground everything: require RAG with human-curated sources for production assistants.

Test like you mean it: add adversarial and property-based tests to PR checks for AI-touched code.

Instrument trust: log prompts, sources, citations, and reviewer sign-off; report grounded-answer rate weekly.

Upskill teams: train developers and product leads on evaluation, bias, and provenance; make it part of engineering excellence.

What this means for UK organisations

UK boards are asking for evidence that AI is safe, lawful, and materially valuable. A trust-first approach—knowledge quality, auditable pipelines, developer-centred guardrails—reduces risk while accelerating delivery. That is how you move from “promising pilot” to enterprise-wide impact. Recent coverage underscores that adoption outpaces trust; the winners turn trust into a competency.

Further reading from McKinsey on AI in software development mentions knowledge as a service and developer-centred scaling—useful context alongside the interview.

FAQ

Q1: What is “trust in AI” in an enterprise context?

It’s measurable confidence that AI outputs are accurate, safe, and policy-compliant—supported by governance, audits, and human-validated knowledge.

Q2: How do we increase developer trust in AI assistants?

Ground responses in reviewed knowledge, require citations, enforce tests on AI-generated code, and keep humans in review loops.

Q3: What metrics prove AI is trustworthy at scale?

Grounded-answer rate, citation coverage, harmful-output rate, evaluation scores on internal benchmarks, and time-to-rollback.

Q4: Why do AI pilots succeed but scaling fails?

Pilots bypass governance and rely on experts; at scale you need codified knowledge, roles, and auditable processes.

Trust in AI is the degree of confidence people and organisations have that AI systems are reliable, safe, and aligned with policy and ethics. To scale AI, leaders must govern data quality, make outcomes auditable, and embed human-validated knowledge into daily workflows.

AI adoption is racing ahead—but trust often lags behind. In a new interview, Stack Overflow’s CEO, Prashanth Chandrasekar, explains why enterprises stall after pilots and how better knowledge quality, governance, and developer experience unlock scale. Here’s what leaders should act on now.

Why trust—not tooling—decides AI at scale

Many organisations can ship a demo. Fewer can deploy dependable systems across teams. The difference is trust: consistent, explainable outputs; transparent data lineage; and clear accountability from model to decision. McKinsey’s interview centres on these organisational levers rather than just models or infrastructure.

Make knowledge quality your foundation

Enterprises need a single source of truth for code, policies, and domain know-how. Chandrasekar emphasises human-validated knowledge—curated answers, coding patterns, architecture decisions—as the substrate that makes AI reliable in daily development. Systems built on vetted knowledge are easier to audit and improve.

Practical moves

Stand up a “knowledge as a service” layer: codified standards, design docs, and decision records that AI can retrieve.

Use retrieval-augmented generation (RAG) to ground AI outputs in that corpus; require citations in answers.

Track answer acceptance and downstream usage to measure trust.

Recent interviews echo the same pattern: developers use AI heavily but trust it selectively—so grounding responses in reviewed knowledge is critical.

Treat developers as the trust engine

Developers are both the earliest adopters and the first line of defence. The interview points to evolving sentiment on AI assistance: teams embrace speed gains but insist on code review, tests, and provenance. Embed trust practices where developers work—IDEs, repos, CI/CD—rather than in separate dashboards.

What to implement

Policy-as-code guardrails in pipelines (licensing, secrets, dependency risks).

Mandatory unit and property-based tests for AI-generated code.

Pull-request templates that require sources when AI contributed.

Govern for scale: clear roles, auditable outcomes

You can’t rely on “best efforts” as usage grows. Define who approves models, who owns prompts, and how you roll back failures. Keep an audit trail from prompt to output to production decision. Leaders interviewed by McKinsey & Company consistently link trust to operational discipline, not slogans.

A simple operating model

Product owns use-case value and acceptance criteria.

Engineering owns integration, resilience, and benchmarks.

Data/ML owns model selection, evaluation, and drift.

Risk/Legal owns policy, IP, and regulatory alignment.

Internal Comms owns transparency with employees.

Measure trust the way you measure reliability

Adopt SLO-style metrics for AI: grounded-answer rate, citation-coverage rate, harmful-output rate, and time-to-rollback. Publish these like reliability stats. Several 2025 interviews with industry leaders reinforce that organisations will demand verifiable ROI and quality, not experimentation for its own sake.

Build with communities, not just for them

Stack Overflow’s vantage point shows that community norms—review, voting, curation—are a scalable mechanism for quality. Borrow that “human-in-the-loop” pattern inside your enterprise: expert review boards for prompts and patterns; contributor recognition in performance frameworks; clear routes to escalate errors.

Five actions to take this quarter

Inventory knowledge: catalogue the docs, patterns, and policies your AI should trust; fix ROT (redundant, obsolete, trivial) content.

Ground everything: require RAG with human-curated sources for production assistants.

Test like you mean it: add adversarial and property-based tests to PR checks for AI-touched code.

Instrument trust: log prompts, sources, citations, and reviewer sign-off; report grounded-answer rate weekly.

Upskill teams: train developers and product leads on evaluation, bias, and provenance; make it part of engineering excellence.

What this means for UK organisations

UK boards are asking for evidence that AI is safe, lawful, and materially valuable. A trust-first approach—knowledge quality, auditable pipelines, developer-centred guardrails—reduces risk while accelerating delivery. That is how you move from “promising pilot” to enterprise-wide impact. Recent coverage underscores that adoption outpaces trust; the winners turn trust into a competency.

Further reading from McKinsey on AI in software development mentions knowledge as a service and developer-centred scaling—useful context alongside the interview.

FAQ

Q1: What is “trust in AI” in an enterprise context?

It’s measurable confidence that AI outputs are accurate, safe, and policy-compliant—supported by governance, audits, and human-validated knowledge.

Q2: How do we increase developer trust in AI assistants?

Ground responses in reviewed knowledge, require citations, enforce tests on AI-generated code, and keep humans in review loops.

Q3: What metrics prove AI is trustworthy at scale?

Grounded-answer rate, citation coverage, harmful-output rate, evaluation scores on internal benchmarks, and time-to-rollback.

Q4: Why do AI pilots succeed but scaling fails?

Pilots bypass governance and rely on experts; at scale you need codified knowledge, roles, and auditable processes.

Get weekly AI news and advice delivered to your inbox

By subscribing you consent to Generation Digital storing and processing your details in line with our privacy policy. You can read the full policy at gend.co/privacy.

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia

Company No: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital

UK Office

Generation Digital Ltd

33 Queen St,

London

EC4R 1AP

United Kingdom

Canada Office

Generation Digital Americas Inc

181 Bay St., Suite 1800

Toronto, ON, M5J 2T9

Canada

USA Office

Generation Digital Americas Inc

77 Sands St,

Brooklyn, NY 11201,

United States

EU Office

Generation Digital Software

Elgee Building

Dundalk

A91 X2R3

Ireland

Middle East Office

6994 Alsharq 3890,

An Narjis,

Riyadh 13343,

Saudi Arabia