Build with Claude: Practical Guidance and Best Practices for Canadian Success

Build with Claude: Practical Guidance and Best Practices for Canadian Success

Claude

Dec 9, 2025

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

Not sure what to do next with AI?

Assess readiness, risk, and priorities in under an hour.

➔ Schedule a Consultation

To build with Claude, choose the right model, base it on your data, and deliver structured outputs. Use tools (and MCP) for actions, prompt caching for efficiency and cost savings, and integrate computer use for desktop tasks. Wrap everything up with evaluations, guardrails, and a rollout plan to ensure pilots transform into valuable productions.

Why this is important now

Claude’s latest models (Opus/Sonnet 4.5) feature improved coding, agent workflows, and long-context reasoning. By adding structured outputs, prompt caching, tool use & computer use, along with the Model Context Protocol (MCP), you can progress from interesting demos to reliable systems that deliver value.

Model selection

Opus 4.5: excellent for intricate coding, agents, research, and multi-step plans. Choose this when quality is more important than cost.

Sonnet 4.5: offers balanced performance and pricing for most applications and APIs.

Haiku (if accessible in your region): quickest and most affordable for straightforward classification, extraction, or routing.

Context window: up to 200k tokens with the current 4.5 family. However, avoid “just pasting everything”—focus on retrieving and using relevant data.

Golden rules (saving time and effort)

Return structured data rather than prose. Request structured outputs (e.g., JSON Schema) so that your code can process results deterministically.

Keep prompts modular. Utilize a concise, stable system prompt along with task instructions and examples.

Cache substantial prefixes. Optimize prompt caching for policies, examples, and extended backgrounds to reduce latency and cost.

Connect tools efficiently. Standardize connections using MCP and tool use.

Create control points. Human involvement for high-risk tasks; automate low-risk ones.

Measure and enhance. Monitor accuracy, latency, cost, and business outcomes (time savings, quality, risk, revenue).

Quickstart (10–15 minutes)

1) Select a model & outline the system prompt

Define the role, target audience, constraints, and response behavior (e.g., “say I don’t know when uncertain”). Keep it concise.

2) Specify the output schema

Design a JSON Schema that accurately represents what you need (types, enums, mandatory fields). Initially, avoid overly complex features.

3) Incorporate tools (optional initially, essential eventually)

Begin with simple tools like “search” or “getCustomer.” Advance to MCP when ready to standardize connections.

4) Activate prompt caching

Cache consistent prefixes (like policies and examples) to minimize latency and cost for each request.

5) Create a small evaluation

Design 10–20 cases reflecting real inputs. Evaluate based on exact-match fields and business rules.

6) Launch with a pilot group

Implement logging and dashboards. Make decisions on scaling, iterating, or halting based on the data.

Example: TypeScript Messages API featuring structured JSON

import Anthropic from "@anthropic-ai/sdk"; const client = new Anthropic({ apiKey: process.env.ANTHROPIC_API_KEY! }); const schema = { type: "object", additionalProperties: false, properties: { intent: { type: "string", enum: ["support", "sales", "other"] }, priority: { type: "integer", minimum: 1, maximum: 5 }, summary: { type: "string", maxLength: 240 } }, required: ["intent", "priority", "summary"] } as const; const msg = await client.messages.create({ model: "claude-sonnet-4.5", max_tokens: 800, system: "You are a helpful triage assistant. If uncertain, say you are uncertain.", messages: [{ role: "user", content: "My checkout fails at payment step" }], output_format: { type: "json_schema", json_schema: schema } }); const result = JSON.parse(msg.content[0].text);

Prompt caching (TypeScript)

const msg = await client.messages.create({ model: "claude-sonnet-4.5", max_tokens: 800, system: { type: "text", text: "Global policies and examples...", cache_control: { type: "ephemeral" } // cache this heavy prefix }, messages: [{ role: "user", content: "New request text..." }] });

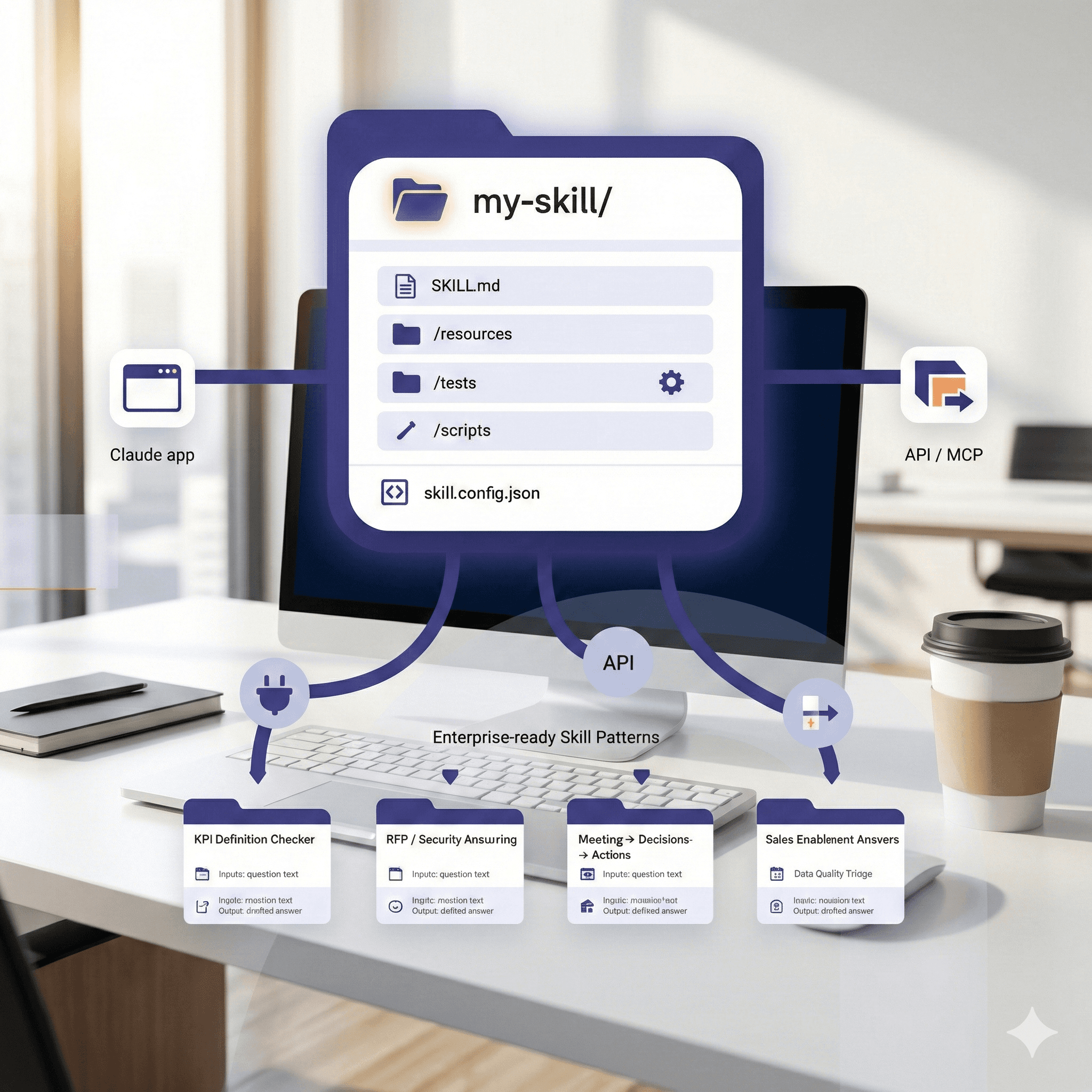

Tools, MCP, and computer use (when ready to implement)

Tool use allows Claude to call functions that you define (using JSON-schema parameters). Ideal for: database lookups, searches, sending emails, posting to CRMs.

MCP standardizes how models connect to tools and data—think of it as USB‑C for AI. Use it to eliminate the need for custom adapters and take advantage of a growing ecosystem of predefined servers.

Computer use (beta) permits controlled desktop interactions (mouse/keyboard/screen) for tasks confined to GUI-only applications.

Pattern

Begin with 1–2 tools (e.g., knowledge search, entity retrieval).

Introduce programmatic tool calling and a tool runner that retries with clear error messaging.

Log all calls (inputs/outputs) for traceability.

Prompt patterns that consistently aid

Role + constraints: “You are a compliance reviewer. If uncertain, request access to the policy page.”

Examples (few-shot): one solid example can be more effective than numerous vague rules.

Output contract: remind Claude that invalid JSON equates to an invalid task.

Allow it to say I don’t know: reduces hallucinations and improves trust.

Break down tasks: request a plan before proceeding with actions in high-stakes workflows.

Evaluations & quality

Set success criteria early (exact-match fields, pass@k, precision/recall, latency). Build a compact evaluation setup:

10–20 real test cases with expected outputs.

Automated scoring for structural validity and business rules.

Weekly trend chart (accuracy, cost, latency, cache hit rate).

Red flags: formats that change silently, increasing latency, tool-call failures, overly confident answers.

Cost & performance checklist

Prompt caching for static prefixes and examples.

Streaming responses for faster perceived UI speed.

Concise messages: place long context behind a retrieval step rather than inline.

Appropriate model sizing: Haiku for extraction/routing; Sonnet/Opus for intensive reasoning.

Batching non-interactive jobs during off-peak times.

Security, safety & governance

Data scopes: use least-privilege keys for tools and MCP servers; prevent broad access.

Handling PII: mask information on entry; unmask only when authorized.

Human control points: approvals for significant actions (e.g., payments, customer communications).

Audit & observability: log prompts, tool requests, and outputs with IDs, retain for compliance purposes.

Policy prompts: encode “must/never” rules within a concise system layer; maintain version control.

Practical strategies

1) Knowledge answering with citations

Retrieve the top 5 passages using your search/KB tool.

Request Claude to answer with inline citations and a confidence label.

Return as JSON:

{answer, citations:[...], confidence: enum}.

2) Support triage

Classify intent/priority, provide summaries, suggest next steps.

If high-risk or data is missing, route to a human.

Document reasoning within a hidden audit field.

3) Sales research assistant

Tool actions: company lookup → CRM enrichment → draft email.

Produce JSON + separate draft text for review.

4) Agent for back-office operations (computer use)

Read screen → navigate legacy GUI → export data → upload to record system.

Protect with timeouts, approved lists, and manual approvals.

Common challenges (and friendly resolutions)

Free-text outputs → switch to structured outputs.

Massive prompts → use retrieval plus prompt caching.

Excessive automation → keep humans involved in edge cases first.

One large tool → divide into smaller, integrated tools with clear schemas.

No evaluations → even 20 test cases surpasses guesswork.

FAQ

Is Claude suitable for beginners?

Yes. Begin with the Messages API and structured outputs; integrate tools later.

How do I prevent misunderstandings?

Request confirmation of uncertainty, anchor with retrieved context, and require citations or structured outputs.

What kind of context window should I anticipate?

Expect long-context models (up to ~200k tokens) but retrieve just what’s necessary.

Can Claude function within my systems?

Yes, through tool use and MCP; for desktop applications, consider computer use (beta) with protective measures.

How do I evaluate ROI?

Analyze accuracy, time saved, error reduction, cost per task, and—if applicable—increased revenue.

Book a Claude Build Workshop — we’ll assist you in choosing the proper model, connecting tools via MCP, enabling structured outputs and caching, and establishing an evaluation setup so you launch with assurance.

To build with Claude, choose the right model, base it on your data, and deliver structured outputs. Use tools (and MCP) for actions, prompt caching for efficiency and cost savings, and integrate computer use for desktop tasks. Wrap everything up with evaluations, guardrails, and a rollout plan to ensure pilots transform into valuable productions.

Why this is important now

Claude’s latest models (Opus/Sonnet 4.5) feature improved coding, agent workflows, and long-context reasoning. By adding structured outputs, prompt caching, tool use & computer use, along with the Model Context Protocol (MCP), you can progress from interesting demos to reliable systems that deliver value.

Model selection

Opus 4.5: excellent for intricate coding, agents, research, and multi-step plans. Choose this when quality is more important than cost.

Sonnet 4.5: offers balanced performance and pricing for most applications and APIs.

Haiku (if accessible in your region): quickest and most affordable for straightforward classification, extraction, or routing.

Context window: up to 200k tokens with the current 4.5 family. However, avoid “just pasting everything”—focus on retrieving and using relevant data.

Golden rules (saving time and effort)

Return structured data rather than prose. Request structured outputs (e.g., JSON Schema) so that your code can process results deterministically.

Keep prompts modular. Utilize a concise, stable system prompt along with task instructions and examples.

Cache substantial prefixes. Optimize prompt caching for policies, examples, and extended backgrounds to reduce latency and cost.

Connect tools efficiently. Standardize connections using MCP and tool use.

Create control points. Human involvement for high-risk tasks; automate low-risk ones.

Measure and enhance. Monitor accuracy, latency, cost, and business outcomes (time savings, quality, risk, revenue).

Quickstart (10–15 minutes)

1) Select a model & outline the system prompt

Define the role, target audience, constraints, and response behavior (e.g., “say I don’t know when uncertain”). Keep it concise.

2) Specify the output schema

Design a JSON Schema that accurately represents what you need (types, enums, mandatory fields). Initially, avoid overly complex features.

3) Incorporate tools (optional initially, essential eventually)

Begin with simple tools like “search” or “getCustomer.” Advance to MCP when ready to standardize connections.

4) Activate prompt caching

Cache consistent prefixes (like policies and examples) to minimize latency and cost for each request.

5) Create a small evaluation

Design 10–20 cases reflecting real inputs. Evaluate based on exact-match fields and business rules.

6) Launch with a pilot group

Implement logging and dashboards. Make decisions on scaling, iterating, or halting based on the data.

Example: TypeScript Messages API featuring structured JSON

import Anthropic from "@anthropic-ai/sdk"; const client = new Anthropic({ apiKey: process.env.ANTHROPIC_API_KEY! }); const schema = { type: "object", additionalProperties: false, properties: { intent: { type: "string", enum: ["support", "sales", "other"] }, priority: { type: "integer", minimum: 1, maximum: 5 }, summary: { type: "string", maxLength: 240 } }, required: ["intent", "priority", "summary"] } as const; const msg = await client.messages.create({ model: "claude-sonnet-4.5", max_tokens: 800, system: "You are a helpful triage assistant. If uncertain, say you are uncertain.", messages: [{ role: "user", content: "My checkout fails at payment step" }], output_format: { type: "json_schema", json_schema: schema } }); const result = JSON.parse(msg.content[0].text);

Prompt caching (TypeScript)

const msg = await client.messages.create({ model: "claude-sonnet-4.5", max_tokens: 800, system: { type: "text", text: "Global policies and examples...", cache_control: { type: "ephemeral" } // cache this heavy prefix }, messages: [{ role: "user", content: "New request text..." }] });

Tools, MCP, and computer use (when ready to implement)

Tool use allows Claude to call functions that you define (using JSON-schema parameters). Ideal for: database lookups, searches, sending emails, posting to CRMs.

MCP standardizes how models connect to tools and data—think of it as USB‑C for AI. Use it to eliminate the need for custom adapters and take advantage of a growing ecosystem of predefined servers.

Computer use (beta) permits controlled desktop interactions (mouse/keyboard/screen) for tasks confined to GUI-only applications.

Pattern

Begin with 1–2 tools (e.g., knowledge search, entity retrieval).

Introduce programmatic tool calling and a tool runner that retries with clear error messaging.

Log all calls (inputs/outputs) for traceability.

Prompt patterns that consistently aid

Role + constraints: “You are a compliance reviewer. If uncertain, request access to the policy page.”

Examples (few-shot): one solid example can be more effective than numerous vague rules.

Output contract: remind Claude that invalid JSON equates to an invalid task.

Allow it to say I don’t know: reduces hallucinations and improves trust.

Break down tasks: request a plan before proceeding with actions in high-stakes workflows.

Evaluations & quality

Set success criteria early (exact-match fields, pass@k, precision/recall, latency). Build a compact evaluation setup:

10–20 real test cases with expected outputs.

Automated scoring for structural validity and business rules.

Weekly trend chart (accuracy, cost, latency, cache hit rate).

Red flags: formats that change silently, increasing latency, tool-call failures, overly confident answers.

Cost & performance checklist

Prompt caching for static prefixes and examples.

Streaming responses for faster perceived UI speed.

Concise messages: place long context behind a retrieval step rather than inline.

Appropriate model sizing: Haiku for extraction/routing; Sonnet/Opus for intensive reasoning.

Batching non-interactive jobs during off-peak times.

Security, safety & governance

Data scopes: use least-privilege keys for tools and MCP servers; prevent broad access.

Handling PII: mask information on entry; unmask only when authorized.

Human control points: approvals for significant actions (e.g., payments, customer communications).

Audit & observability: log prompts, tool requests, and outputs with IDs, retain for compliance purposes.

Policy prompts: encode “must/never” rules within a concise system layer; maintain version control.

Practical strategies

1) Knowledge answering with citations

Retrieve the top 5 passages using your search/KB tool.

Request Claude to answer with inline citations and a confidence label.

Return as JSON:

{answer, citations:[...], confidence: enum}.

2) Support triage

Classify intent/priority, provide summaries, suggest next steps.

If high-risk or data is missing, route to a human.

Document reasoning within a hidden audit field.

3) Sales research assistant

Tool actions: company lookup → CRM enrichment → draft email.

Produce JSON + separate draft text for review.

4) Agent for back-office operations (computer use)

Read screen → navigate legacy GUI → export data → upload to record system.

Protect with timeouts, approved lists, and manual approvals.

Common challenges (and friendly resolutions)

Free-text outputs → switch to structured outputs.

Massive prompts → use retrieval plus prompt caching.

Excessive automation → keep humans involved in edge cases first.

One large tool → divide into smaller, integrated tools with clear schemas.

No evaluations → even 20 test cases surpasses guesswork.

FAQ

Is Claude suitable for beginners?

Yes. Begin with the Messages API and structured outputs; integrate tools later.

How do I prevent misunderstandings?

Request confirmation of uncertainty, anchor with retrieved context, and require citations or structured outputs.

What kind of context window should I anticipate?

Expect long-context models (up to ~200k tokens) but retrieve just what’s necessary.

Can Claude function within my systems?

Yes, through tool use and MCP; for desktop applications, consider computer use (beta) with protective measures.

How do I evaluate ROI?

Analyze accuracy, time saved, error reduction, cost per task, and—if applicable—increased revenue.

Book a Claude Build Workshop — we’ll assist you in choosing the proper model, connecting tools via MCP, enabling structured outputs and caching, and establishing an evaluation setup so you launch with assurance.

Receive practical advice directly in your inbox

By subscribing, you agree to allow Generation Digital to store and process your information according to our privacy policy. You can review the full policy at gend.co/privacy.

Generation

Digital

Business Number: 256 9431 77 | Copyright 2026 | Terms and Conditions | Privacy Policy

Generation

Digital